Overview of MuCGEC: A Multi-Reference Multi-Source Evaluation Dataset for Chinese Grammatical Error Correction

The paper "MuCGEC: A Multi-Reference Multi-Source Evaluation Dataset for Chinese Grammatical Error Correction" presents a novel evaluation dataset specifically designed to address the current limitations in Chinese Grammatical Error Correction (CGEC). The dataset, MuCGEC, includes 7,063 sentences sourced from learners of Chinese as a Second Language, annotated with multiple high-quality references. The researchers aim to provide a more comprehensive and reliable resource to facilitate CGEC research and improve model evaluation metrics.

Key Contributions

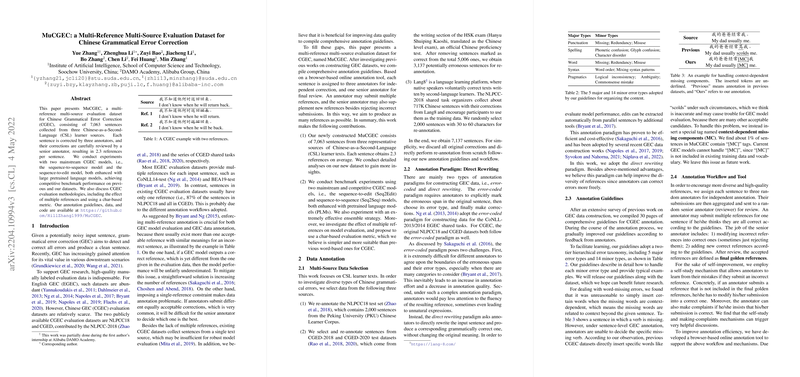

- Multi-Source Data Collection: MuCGEC comprises data from three distinct sources: the NLPCC18 test set, CGED test datasets, and Lang8. This multi-source approach is intended to cover a diverse array of error types and provide more generalizable evaluation metrics.

- Multi-Reference Annotations: Unlike previous datasets with single-reference annotations, MuCGEC provides an average of 2.3 references per sentence, verified by three annotators and reviewed by a senior annotator. This enhances the fairness and accuracy of model evaluation by acknowledging the variability in acceptable corrections.

- Annotation Techniques: The paper advocates for the direct rewriting paradigm over error-coded annotation, arguing it is cost-effective and improves the naturalness of corrections. The researchers provide detailed annotation guidelines, addressing issues like context-dependent missing components with special tags like "MC".

- Evaluation Methodology: The paper emphasizes char-based span-level evaluation metrics instead of traditional word-based evaluations. This approach reduces dependency on potentially erroneous word segmentation and aligns well with the inherent properties of the Chinese language.

- Benchmarking with State-of-the-Art Models: The research evaluates two mainstream CGEC models—Seq2Edit and Seq2Seq—enhanced with pretrained LLMs. The ensemble strategy, combining multiple instances of these models, demonstrates substantial performance improvements on MuCGEC, highlighting the dataset’s ability to differentiate and validate model performance.

Experimental Results

The experiments show competitive performance of the Seq2Edit and Seq2Seq models on MuCGEC, with noticeable improvements through model ensembling. The paper reports superior precision metrics when experimentally applying multiple references, validating the efficacy of the multi-reference approach.

Theoretical and Practical Implications

The MuCGEC dataset sets a new standard for CGEC resources by mitigating issues of underestimation inherent in single-reference datasets. Practically, it provides a robust platform for developing more effective grammatical correction systems, critical for language learning applications. Theoretically, it lays the groundwork for future research to explore diverse Chinese grammatical constructions and error types.

Future Directions

Future research can further exploit MuCGEC for developing novel correction algorithms, while also expanding annotation strategies for additional native and non-native language texts. The dataset encourages exploration of more sophisticated ensemble techniques and advanced neural architectures.

Conclusion

MuCGEC stands out as a significant advancement in CGEC research resources, with its multi-source, multi-reference construction fostering a more nuanced understanding of grammatical errors and correction techniques in Chinese. This dataset not only supports improved model evaluation but also paves the way for innovative NLP solutions in educational and professional contexts.