Overview of LayoutLMv3: Pre-training for Document AI with Unified Text and Image Masking

The presented paper discusses LayoutLMv3, a model designed to enhance the performance of Document AI by unifying the pre-training tasks for textual and visual modalities through a novel approach of text and image masking. LayoutLMv3 stands out in the field of multimodal representation learning by addressing inconsistencies in pre-training objectives between text and image modalities, which have hindered effective cross-modal alignment in prior models. The unified architecture and training objectives aim to equip LayoutLMv3 to excel in both text-centric and image-centric document analysis tasks.

Key Contributions

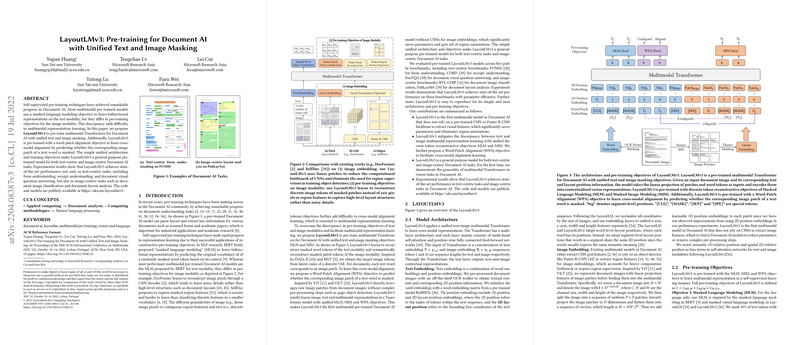

- Unified Text and Image Masking: LayoutLMv3 employs a coherent masking strategy across text and image inputs. This involves the reconstruction of both masked word tokens and image patch tokens, aiming for a balanced learning of textual and visual features. The methodology draws inspiration from models like DALL-E and BEiT.

- Word-Patch Alignment Objective: This approach introduces cross-modal alignment capabilities by designing a task to predict the masking status of an image patch associated with a specific word. It anchors text and image modalities, promoting superior multimodal representation.

- Elimination of CNN and Region Features: Unlike previous Document AI models, LayoutLMv3 avoids dependency on convolutional neural networks or object detection models like Faster R-CNN for feature extraction, instead using linear embeddings for image patches. This design choice drastically reduces parameter count and computational overhead.

- State-of-the-Art Performance: The model demonstrates top performance in multiple benchmark datasets, including text-centric tasks (form and receipt understanding on FUNSD and CORD and document visual question answering on DocVQA) and image-centric tasks (document image classification on RVL-CDIP and layout analysis on PubLayNet).

Implications and Future Directions

The implications of this research are twofold: for practical applications, LayoutLMv3 provides a highly efficient and scalable solution to document understanding tasks, reducing both resource requirements and system complexity. Theoretically, this research contributes to the understanding of cross-modal representation learning by establishing a unified pre-training strategy that balances the disparate objectives of text and image modalities.

Future research might examine scaling LayoutLMv3 to manage even larger datasets or more diverse document types. Moreover, further exploration could focus on zero-shot or few-shot learning scenarios, which have practical relevance in rapidly evolving and varied document understanding needs. Additionally, while the current paper is predominantly in English, extending the model's capabilities to other languages could significantly broaden its applicability and impact.

In summary, the paper delivers substantive advancements in the field of multimodal AI, employing innovative methods to achieuspdat performance improvements while mitigating traditional limitations associated with document preprocessing and feature extraction.