Multi-Stage Prompting for Knowledgeable Dialogue Generation

The paper, "Multi-Stage Prompting for Knowledgeable Dialogue Generation," introduces a sophisticated methodology for enhancing dialogue systems by leveraging multi-stage prompting with pretrained LLMs (LMs). This approach seeks to address the limitations of existing knowledge-grounded dialogue systems, which often depend on exhaustive external knowledge bases and finetuned LMs.

Key Contributions

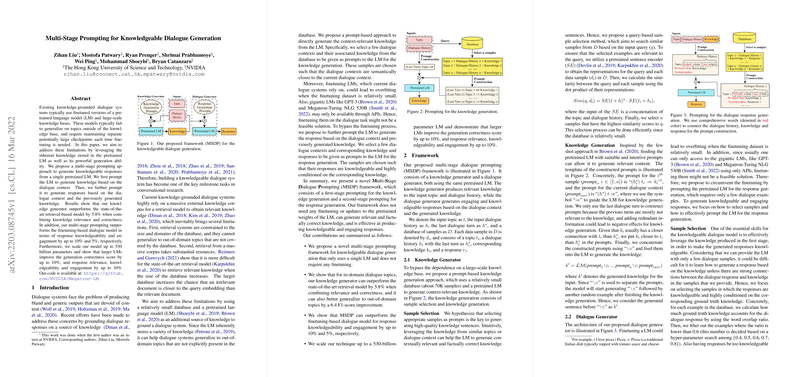

- Multi-Stage Dialogue Prompting Framework: The framework consists of two distinct stages. Initially, it generates knowledge based on dialogue context using a pretrained LM. Subsequently, it generates responses by integrating dialogue context with the previously generated knowledge, again employing a pretrained LM.

- Reduced Dependency on Large Knowledge Bases: By utilizing the inherent knowledge and generation ability of LMs, this approach circumvents the need for continuous finetuning and heavy reliance on large external corpuses, which often constrain generalization over out-of-domain topics.

- Significant Performance Improvement: The knowledge generator outstrips the ability of state-of-the-art retrieval-based models, surpassing them by 5.8% in relevance and correctness. Additionally, the prompting approach has demonstrated an uptick in response knowledgeability and engagement compared to finetuning-based models, achieving improvements of up to 10% and 5%, respectively.

- Scalability with Larger Models: Scaling the model to 530 billion parameters resulted in a 10% enhancement in generation correctness, alongside improvements in response relevance, knowledgeability, and engagement by approximately 10%.

Methodology

The framework, named Multi-Stage Dialogue Prompting (MSDP), adopts a novel strategy comprising knowledge generation and dialogue generation stages:

- Knowledge Generation: Instead of a large-scale knowledge base, the paper utilizes a compact database and pretrained LM for generating context-relevant knowledge. The authors employ a query-based sample selection to enhance the relevance and accuracy of the generated knowledge by selecting semantically similar examples.

- Dialogue Response Generation: This stage removes the finetuning necessity, instead opting for a sample selection paradigm that prioritizes examples with knowledgeable responses deeply rooted in corresponding knowledge.

Evaluation and Results

The evaluation employed standard datasets such as Wizard of Wikipedia (WoW) and Wizard of Internet (WoI). The proposed MSDP model achieved substantial performance gains in both automatic and human evaluations, especially notable in out-of-domain generalization scenarios like the WoI dataset. The paper demonstrates that larger LMs significantly enhance the quality of generated dialogue.

Implications and Future Directions

This research holds substantial implications for the development of more adaptive and contextually aware dialogue systems. Eliminating finetuning reduces computational overhead and complexity associated with updating large models, while also enhancing the model's ability to handle diverse and unexpected conversational topics.

Future research should focus on further optimizing the sample selection strategy to amplify performance in less well-defined contexts and exploring additional domains for which extensive curated knowledge bases may not exist. Moreover, investigating the interpretability and internal mechanisms of such large-scale LMs could provide further insights into optimizing dialogue generation tasks.