RegionCLIP: Region-based Language-Image Pretraining

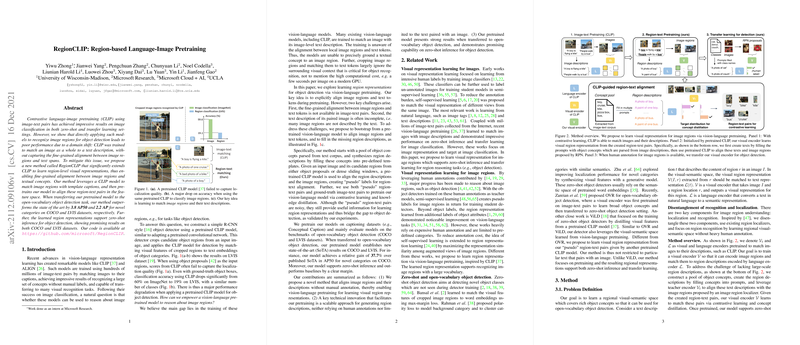

The paper "RegionCLIP: Region-based Language-Image Pretraining" presents an advanced approach to enhancing the capabilities of vision-LLMs in handling tasks involving image regions. The primary focus is on overcoming the limitations observed in models like CLIP when applied to object detection, where such models often fail to align specific image regions with corresponding textual information. This paper introduces a method termed RegionCLIP, which leverages a region-level pretraining strategy to improve this alignment significantly.

Key Contributions

- Region-Level Representation Learning: The paper highlights the novel extension of the CLIP model to learn region-specific visual representations, enabling refined alignment between image regions and textual concepts.

- Improved Object Detection Performance: By applying the RegionCLIP method, the paper reports significant improvements in open-vocabulary object detection benchmarks, surpassing existing state-of-the-art performance. Specifically, RegionCLIP outperformed prior techniques by margins of 3.8 AP50 on COCO and 2.2 AP on LVIS datasets for novel categories.

- Scalable Approach to Region-Text Alignment: The proposed method involves synthesizing region descriptions from large pools of object concepts and aligning these with image regions through a pretrained CLIP model. This effectively generates pseudo labels for region-text pairs, which are employed for contrastive learning and knowledge distillation.

- Zero-shot Inference Capabilities: The learned representations also facilitate zero-shot inference for object detection, demonstrating robust results on COCO and LVIS datasets.

Methodology

The RegionCLIP approach is distinct in its application of contrastive language-image pretraining at a regional level. The process involves the following steps:

- Creating Region Descriptions: Concepts are parsed from image descriptions to create synthesized region descriptions using templates, which are then aligned with candidate image regions using CLIP.

- Pseudo Label Generation: The CLIP model aligns these synthesized region-text pairs, creating training data without the need for additional manual annotations.

- Pretraining and Knowledge Distillation: The model is pretrained using both these pseudo region-text pairs and existing image-text pairs, employing contrastive and distillation losses to refine the regional representations.

Implications and Future Directions

The implications of this research are notable in both practical and theoretical realms. Practical implications include enhanced performance in object detection tasks without extensive manual annotations, thereby broadening the applicability of vision-LLMs to diverse datasets.

Theoretically, this work challenges the conventional boundaries of vision-LLMs by demonstrating the feasibility and benefits of regional representations. Future developments might explore further enhancements in token-level language embeddings, integration with attributes, and relations between image regions to address or complement holistic object recognition tasks. Moreover, expanding this approach to handle larger and more varied datasets could solidify its place in the next generation of visual recognition technologies.

In conclusion, the RegionCLIP method introduced in this paper represents a significant advancement in the field, offering a refined strategy for aligning visual and textual modalities at a granular level. The achievements in object detection benchmarks indicate the potential for broader applications and set a foundation for future innovations in AI-driven visual understanding.