Learning to Prompt for Continual Learning: A Technical Overview

The paper "Learning to Prompt for Continual Learning" presents an innovative approach to address the challenges inherent in continual learning (CL), particularly the pervasive problem of catastrophic forgetting. Continual learning involves training a model on sequentially presented tasks with a non-stationary data distribution, which often results in forgetting previously acquired knowledge as new tasks are introduced.

Key Contributions

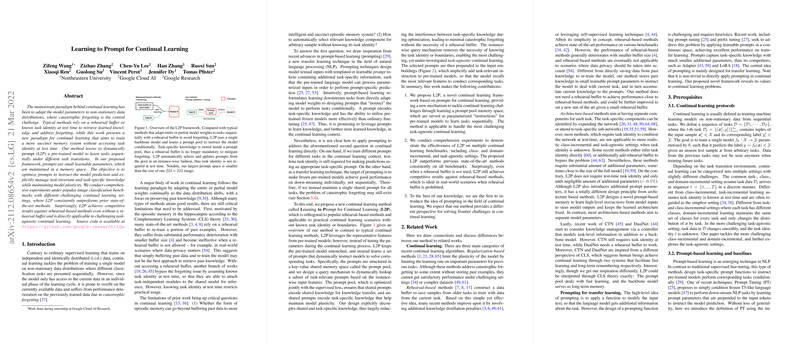

- Dynamic Prompting without Rehearsal Buffers: Traditional CL methods often rely on using rehearsal buffers to mitigate forgetting by storing past samples for retraining. This method, however, proposes a novel strategy utilizing a pre-trained model where small learnable parameters, termed 'prompts', are maintained within a memory space. These prompts are dynamically selected and optimized, facilitating task learning without needing a rehearsal buffer or prior task identity during inference.

- Instance-wise Query Mechanism: The framework introduces an instance-wise query mechanism that selects relevant prompts based on input features, addressing the challenge of enabling task-specific and task-invariant knowledge sharing. This mechanism supports task-agnostic applications, where specific task boundaries or identities are unknown during test time.

- Comprehensive Benchmarks and Results: The research demonstrates the robustness of their approach through a series of experiments on popular benchmarks. The Learning to Prompt (L2P) framework surpasses existing state-of-the-art methods when evaluated on standard image classification tasks. Notably, L2P achieves competitive performance compared to methods dependent on rehearsal buffers, even in their absence.

- Introduction of Prompt-based Learning in CL: By leveraging the concept of prompt-based learning from natural language processing, the paper positions prompts as a novel way to store and manage task-specific information within a model architecture. This strategy reformulates knowledge retrieval from buffering past data to learning high-level instructions embodied in prompts.

Implications and Future Directions

Theoretical Insights: The proposed introduction of a prompt pool appears to effectively decouple knowledge components, thus enhancing the model's ability to recall task-specific information dynamically. It is a significant move towards reducing the inherent interference between learned tasks encountered by most CL techniques.

Practical Applications: The application of L2P is particularly valuable in scenarios where memory constraints or privacy concerns restrict the use of rehearsal buffers. Its task-agnostic capability makes it adaptable to real-world environments with continuous and unsupervised data streams, such as robotics and adaptive control systems.

Speculative Advancements: The utilization of prompt techniques may inspire new research directions in continual learning frameworks beyond image classification, extending into multimodal and complex real-world data environments. Additionally, implementing efficient prompt update mechanisms and exploring the scalability of this approach across more diverse and larger-scale datasets could be worthwhile pursuits.

Conclusion

The framework sets a promising precedent for employing prompt-based methodologies in ongoing learning paradigms, offering a credible alternative to mitigate catastrophic forgetting without relying on rehearsal mechanisms. While further explorations are necessary to adapt and enhance this method for broader applications, the foundational advancements introduced by L2P contribute significantly to the field of continual learning.