An In-Depth Examination of Explainable AI: Challenges and Future Directions

The paper, "Explainable AI (XAI): A Systematic Meta-Survey of Current Challenges and Future Opportunities," provides a comprehensive meta-survey on the multifaceted challenges and prospective paths in the domain of Explainable Artificial Intelligence (XAI). As AI systems become more complex and embedded in critical domains, the necessity for transparency and comprehensibility becomes increasingly paramount. The authors, Waddah Saeed and Christian Omlin, synthesize the disparate challenges and potential avenues into a coherent roadmap for future research in XAI.

Key Themes and Challenges in XAI

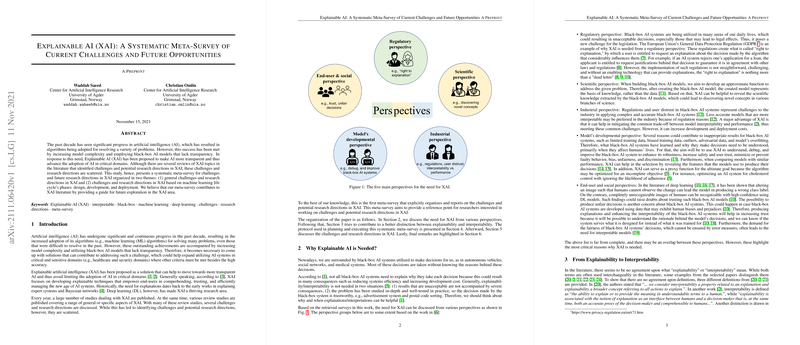

The paper is strategically organized into two overarching themes consisting of general challenges and those specific to phases in the machine learning lifecycle, namely design, development, and deployment phases.

General Challenges

- Formalism in XAI: The necessity for systematic definitions and rigorous evaluations is underscored. The absence of unified definitions and evaluation standards encumbers progress in comparing XAI techniques. Formal quantification and abstraction efforts are seen as critical to advancing XAI.

- Interdisciplinary Collaboration: The authors advocate for collaborative efforts with fields such as psychology, human-computer interaction, and neuroscience, to enhance understanding and development of XAI methods.

- Tailoring Explanations to User Expertise: Different users possess varying requirements for explanations based on their expertise and experience. Consequently, explanations must be tailored to address the diverse cognitive backgrounds of users.

- Trustworthy AI: XAI not only aids transparency but supports accountability and fairness. However, ensuring AI systems are trustworthy involves addressing biases and ensuring adherence to regulatory requirements.

- Trade-off between Interpretability and Performance: A perennial issue in AI is the trade-off between accuracy and model interpretability. While complex models may offer higher performance, they tend to be less interpretable—a critical issue XAI aims to address.

Specific Challenges in ML Lifecycle Phases

- Design Phase: Emphasizes challenges like ensuring data quality and facilitating ethical data sharing, especially regarding privacy-preserving methods.

- Development Phase: Focuses on incorporating domain knowledge into AI models, developing debugging techniques, and improving comparability of models through interpretability.

- Deployment Phase: Addresses post-deployment considerations such as maintaining system explainability while ensuring security and privacy. Furthermore, the integration of ontologies with XAI methods is suggested as a way to enhance comprehension.

Future Opportunities and Implications

The paper posits several future research directions. The evolution of XAI may see advancements in areas such as causal and counterfactual explanations, enhancing machine-to-machine explanations, and the integration of XAI within reinforcement learning frameworks. Moreover, the deployment of AI systems in real-world applications such as autonomous systems and healthcare demands more robust explanation methods that can dynamically adapt to user needs and domain-specific challenges.

The role of XAI is also projected to expand with the proliferation of automated ML (AutoML) solutions, anticipating the emergence of XAI as a service, thereby underscoring the utility of automated and user-friendly explanation tools.

Conclusion

The systematic meta-survey by Saeed and Omlin delivers an exhaustive account of the intersection of AI explainability and its applications across various domains. By distilling a broad array of challenges and potential future research direction, the paper serves as a pivotal reference for researchers in the XAI domain. As AI technologies continue to evolve, so too must our approaches to ensuring their transparency, fairness, and acceptance in society. This paper not only captures the current state-of-the-art but also acts as a beacon for future exploration in the field of XAI, encouraging the academic and industrial communities to collectively address these challenges.