Summary of "LAION-400M: Open Dataset of CLIP-Filtered 400 Million Image-Text Pairs"

The paper "LAION-400M: Open Dataset of CLIP-Filtered 400 Million Image-Text Pairs" addresses the conspicuous absence of publicly available, large-scale datasets required for training multi-modal language-vision models from scratch. The dataset and the methodology for its creation are presented by Christoph Schuhmann and colleagues from the Large-scale Artificial Intelligence Open Network (LAION).

Background and Motivation

Recent advancements in multi-modal models such as CLIP and DALL-E have underscored the importance of large-scale datasets for achieving zero-shot and few-shot learning capabilities. The authors note that while the efficacy of these models in transfer learning is evident, the reliance on proprietary, large-scale datasets poses a significant barrier to widespread research and development. LAION-400M is introduced to bridge this gap by providing an openly accessible dataset that consists of 400 million image-text pairs filtered and embedded using CLIP.

Methodology

Dataset Construction

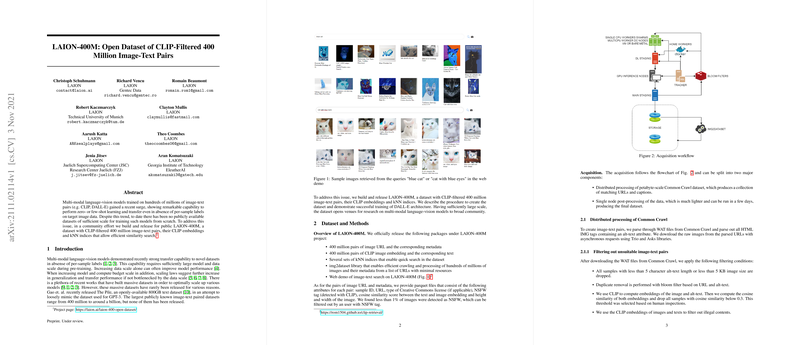

The creation of LAION-400M follows a two-tiered approach: distributed processing of the terabyte-scale Common Crawl dataset to identify candidate image-text pairs and post-processing to refine and save the final dataset.

- Image-Text Pair Extraction: Images and their associated alt-text were extracted from HTML tags in Common Crawl's WAT files. Asynchronous requests ensured efficient downloading of raw images.

- Filtering Criteria: Several criteria were implemented to filter out unsuitable pairs:

- Alt-texts shorter than 5 characters and images smaller than 5 KB were discarded.

- Duplicates were removed using a bloom filter on URLs and alt-texts.

- A cosine similarity score threshold of 0.3, computed through CLIP embeddings, was set to retain semantically similar pairs.

- CLIP embeddings were additionally used to exclude illegal content.

- Post-Processing: The obtained data underwent post-processing using the img2dataset library. This workflow enabled efficient downloading, resizing, and storage of the image-text pairs in a webdataset format, facilitating large-scale data handling with minimal computational resources.

Dataset and Features

LAION-400M includes:

- 400 million image URL and metadata pairs.

- Corresponding CLIP image embeddings and text embeddings.

- kNN indices for efficient similarity searches.

- A web demo illustrating the dataset's utility in semantic search functions.

The distribution of image sizes in LAION-400M confers flexibility to researchers, enabling the creation of customized subsets tailored to specific model training requirements.

Evaluation and Results

The effectiveness of LAION-400M was empirically validated through a series of experiments utilizing a subset of approximately 7.2 million samples to train the DALL-E model. Despite the small subset and limited epochs, significant progress in model training was observed, with generated samples exhibiting acceptable quality. These findings substantiate the dataset's potential in supporting large-scale, high-quality training of language-vision models.

Implications and Future Work

The release of LAION-400M democratizes access to a large-scale dataset, previously confined to proprietary domains. This contributes significantly to the research community by enabling extensive empirical studies and experimentation in the field of multi-modal models. Theoretical implications include enhanced understanding of data scaling laws and their effects on model performance.

Future work might involve:

- Enhancing the dataset through incremental updates and the inclusion of more diverse image-text pairs.

- Investigating the interplay between dataset characteristics (e.g., image resolution, text complexity) and model performance.

- Expanding the application scope of LAION-400M for different multi-modal architectures beyond CLIP and DALL-E.

Conclusion

The authors of the LAION-400M dataset have made a consequential contribution to the field of multi-modal models by addressing the scarcity of publicly available, high-scale datasets. This open dataset not only facilitates extensive research but also underscores the value of community-led initiatives in advancing the frontiers of AI.