Analysis of "Salient Phrase Aware Dense Retrieval: Can a Dense Retriever Imitate a Sparse One?"

Dense and sparse retrieval methods serve as crucial components of information retrieval in NLP, each possessing unique strengths. This paper presents a significant advancement in dense retrieval techniques by introducing the Salient Phrase Aware Retriever (SPAR), a dense retriever augmented with the lexical matching capabilities inherent in sparse models. This approach challenges the prevalent belief that dense retrieval methods inherently lack the ability to capture rare entities and salient phrases effectively.

Overview of the Paper

Background and Motivation:

Dense retrieval models have cemented their place in NLP due to their proficiency in learning semantic representations and addressing vocabulary mismatch issues. However, a notable challenge with dense retrievers, such as Dual Encoder models, is their struggle to effectively capture specific or rare phrases within queries. On the contrary, sparse retrievers, like BM25, excel at lexical matching due to their bag-of-words representation but fall short in semantic understanding. The authors propose SPAR to overcome these limitations by bridging the gap between dense semantic understanding and sparse retrieval's lexical matching capabilities.

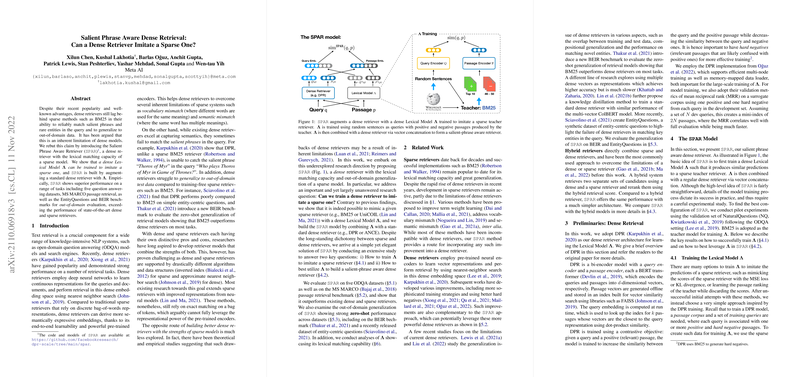

Architecture of SPAR:

SPAR introduces a dense Lexical Model designed to mimic a sparse teacher model. This model is integrated into a standard dense retriever framework, leveraging the strength of both approaches. The authors select DPR (Dense Passage Retriever) as the foundational architecture for dense retrieval, further improved by a dense Lexical Model trained using data-label pairs derived from a sparse teacher model (e.g., BM25 or UniCOIL). The final SPAR model incorporates these enhancements via vector concatenation, allowing it to retain semantic and lexical information effectively.

Empirical Evaluation:

SPAR was evaluated across several tasks, including question answering benchmarks such as NaturalQuestions and MS MARCO passage retrieval, achieving superior performance over existing dense, sparse, and hybrid retrievers. Notably, on SQuAD, SPAR closed the performance gap previously unbridged by dense retrievers. Additionally, SPAR demonstrated robust out-of-domain generalization, excelling in zero-shot challenges on the BEIR benchmark and EntityQuestions, where it outperformed both dense and sparse retrievers significantly.

Key Findings

- Successful Lexical Mimicking: SPAR successfully captures and mimics the lexical matching capabilities of sparse models, making it a valuable tool for retrieval tasks that demand lexical precision.

- Performance Superiority: SPAR's dense architecture, augmented with sparse-like capabilities, consistently outperforms separate dense or sparse models and matches or exceeds complex hybrid systems across various benchmarks.

- Efficient and Simple Architecture: Unlike hybrid models requiring dual-system management, SPAR maintains the simplicity and efficiency of dense retrieval techniques, functioning within a single FAISS index for storage and retrieval.

Implications for Future Work

SPAR underscores the potential of training dense retrievers to incorporate salient phrase and rare entity matching, challenging the notion of inherent limitations in dense models. The framework opens avenues for exploring training dense models without reliance on sparse teachers, potentially uncovering new methodologies that break free from the constraints of sparse retrieval.

Furthermore, future work can delve into understanding the underpinnings of SPAR’s unique traits, such as its performance gain compared to hybrid models and the unexpected effectiveness of post-hoc vector concatenation over joint training. These insights might lead to innovations in retrieval systems that capitalize on sparse and dense strengths without compromising computational efficiency or simplicity.

Overall, SPAR’s development marks a step forward in the evolution of dense retrieval methods, providing a pathway to more robust and versatile information retrieval systems. Through comprehensive experimentation and analysis, this paper contributes significantly to the discourse on retrieval architectures and their practical applications in AI and NLP.