An Examination of Recursive Summarization with Human Feedback

The paper "Recursively Summarizing Books with Human Feedback" presents a novel methodology in the field of natural language processing that tackles the complex task of summarizing book-length texts using a combination of machine learning and human feedback. The research primarily focuses on abstractive summarization of fiction novels, addressing challenges in generating summaries that are coherent and representative of the source material. This paper explores scalable oversight techniques by incorporating recursive task decomposition with human-in-the-loop training strategies, leveraging LLMs such as GPT-3.

Methodology

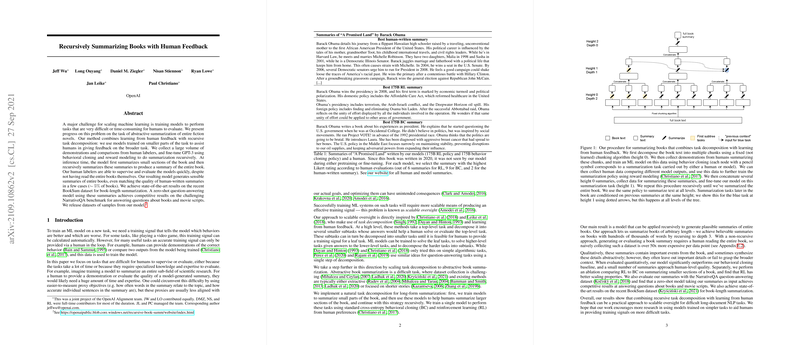

The authors propose a framework that utilizes recursive summarization, where a book is broken down into smaller sections, each of which is independently summarized. These intermediate summaries are then recursively combined until a full summary of the book is produced. The decomposition strategy allows models to focus on local context before synthesizing these details into a broader narrative, facilitating a more efficient and manageable summarization process for both the model and human supervisors.

The method relies heavily on human feedback. By incorporating demonstrations and comparisons from human evaluators, the approach facilitates behavioral cloning and reinforcement learning (RL) to refine summary quality continuously. The humans-in-the-loop approach allows for scalable oversight, where models are trained not just on raw data but guided observations that help align outputs with human judgment.

Results

The experimental results demonstrate the effectiveness of this recursive approach. The model achieved state-of-the-art performance on the BookSum dataset for book-length summarization tasks. The approach enabled zero-shot question answering that yields competitive results on the NarrativeQA benchmark. A notable outcome is the model's ability to produce summaries that align closely to human-written text—approximately 5% of the generated summaries were comparable to those written by humans. Such findings highlight the potential of combining task decomposition, human feedback, and reinforcement learning in enhancing machine comprehension capabilities on tasks demanding high-level understanding.

Discussion and Implications

This research points to significant advancements in large-scale abstractive summarization by prioritizing human-like understanding of extensive content through strategic decomposition and feedback-driven refinement. The recursive model design shows promise for application not just in books but in other domains requiring in-depth document processing. Moreover, the combination of human feedback in training exemplifies a path toward scalable and efficient machine learning processes. Models resulting from this framework could be applied broadly—ranging from improving automated assistance in literary analysis to developing advanced AI systems capable of maintaining conversational agents with deep contextual awareness.

Given the dependency on human feedback, questions around the reproducibility of results based on varied human input quality arise. The scalability advantage is tempered by the need for quality human inputs, suggesting future directions in diminishing the extent of human involvement by enhancing the model's capability to self-evaluate and learn.

Future Directions

The potential for further development in this recursive summarization approach includes expanding the context and effectiveness of the model by training with even wider datasets encompassing diverse fields beyond fiction. Additionally, further research could explore optimizing task decomposition procedures, potentially developing AI methods to self-determine optimal decomposition strategies independently. A future focus is also advised towards balancing model’s scalability with fidelity in capturing intricate narrative elements, ensuring that summaries are not merely synthetic but encapsulate implicit subtleties in narratives.

In conclusion, this paper contributes significantly to the AI field, particularly within natural language processing, by establishing a recursive summarization framework that integrates human-like discernment in processing complex textual information. It opens new avenues for research in generating succinct and meaningful summaries from extensive texts while simultaneously enriching our understanding of practical AI-human collaboration mechanisms.