An Analysis of Interpretable Directed Diversity for Enhanced Crowd Ideation

Introduction

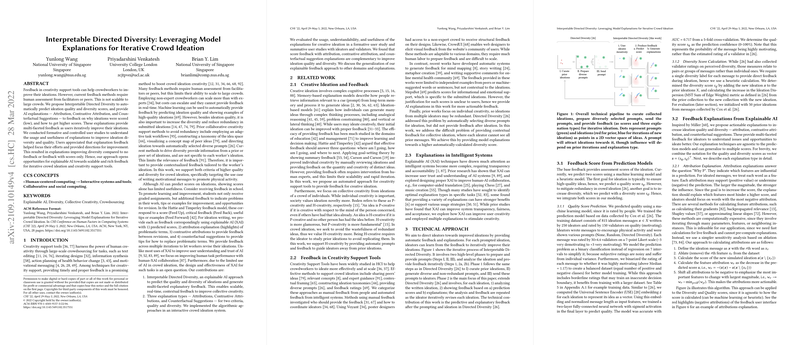

The paper "Interpretable Directed Diversity: Leveraging Model Explanations for Iterative Crowd Ideation" explores the scalability limitations of current feedback methods in creativity support tools, especially those requiring human assessment and real-time feedback, and introduces an automated approach. The core proposal consists of using explainable AI (XAI) to predict the quality and diversity of ideations through Interpretable Directed Diversity (IDD), providing specific feedback via AI explanations like Attributions, Contrastive Attributions, and Counterfactual Suggestions. These are aimed at guiding ideators to iteratively improve their ideas in crowdsourcing environments.

Key Features of Interpretable Directed Diversity

The proposed method involves several phases, including the preparation and provision of prompts and the iterative analysis and feedback on ideations. There are two primary measured criteria:

- Quality Score Prediction: A binary classification method using the Universal Sentence Encoder (USE) to predict whether an idea is highly motivating. Firstly, a model assigns ideations a quality confidence score based on existing data.

- Diversity Score Calculation: This is configured to reduce redundancy, calculated using heuristic measurements of Ideation Dispersion.

Explanation Strategies

Three primary explanation types are provided to ideators:

- Attribution Explanations: These focus on identifying specific word features influencing prediction scores, highlighting those detracting from ideation quality or diversity.

- Contrastive Attribution Explanations: They compare iterations of ideation messages to provide feedback on edits made, highlighting beneficial changes.

- Counterfactual Suggestions: These offer alternative words from a knowledge graph (ConceptNet), suggesting replacements aimed to increase scores while guiding ideators.

Evaluation and Findings

The paper details rigorous formative and summative studies. These studies assess the interaction of various feedback interfaces incorporating the XAI explanations and standard score feedback, ultimately focusing on crowd ideators in creating motivational messages to promote physical activity.

- The Formative Study highlighted that designers appreciated the explanations for directional ideation improvement, though struggled without clear understanding of how to apply some suggestions without losing context relevance.

- The Summative Ideation Study confirmed that using AI explanations significantly improved both diversity and quality of ideations over baseline methods which utilized no AI-driven feedback.

- Validation through human judges affirmed increased diversity in ideation, although quality scores showed no significant improvement beyond simple score predictions.

Practical and Theoretical Implications

The research goes beyond traditional systems, opening new opportunities for AI in providing contextual, automated feedback that scales with task size and complexity. This has practical implications for supporting large-scale collaborative ideation and reducing cognitive load on facilitators.

Theoretically, this brings focus to the importance of explainability in AI, not just for system debugging or understanding, but as a creativity aid—emphasizing a nuanced understanding of how multiple explanation methods can reinforce human cognition in novel task domains.

Speculation on Future Developments

There is potential expansion into diverse application domains beyond text, such as graphical or conceptual design, leveraging more advanced embeddings and domain-specific models. Improvements are suggested for XAI explanation relevancy, especially semantic alignment in generated suggestions, requiring possibly hybrid AI-human feedback systems.

In conclusion, by blending DID systems with explainable AI, this research provides a robust framework to enhance crowd creativity, opening new avenues to explore the intricate interplay between AI and human ideation.