Learning to Prompt for Vision-LLMs

This paper, authored by Kaiyang Zhou, Jingkang Yang, Chen Change Loy, and Ziwei Liu from S-Lab, Nanyang Technological University, Singapore, addresses a pertinent issue in leveraging large pre-trained vision-LLMs like CLIP for downstream tasks: the need for prompt engineering. The proposed solution, termed Context Optimization (CoOp), introduces a method to learn task-specific prompts efficiently, thereby bypassing manual prompt engineering, which can be time-consuming and lacks guarantee of optimal performance.

Focus of the Research

Traditional visual recognition systems have typically relied on training vision models using discrete labels, which limits their capacity to handle open-set visual concepts without additional data for new categories. Vision-LLMs, however, align images and textual descriptions in a common feature space, facilitating zero-shot transfer to various downstream tasks. In practice, however, deploying such models necessitates prompt engineering—a process requiring significant domain expertise and extensive tuning of wordings for optimal performance.

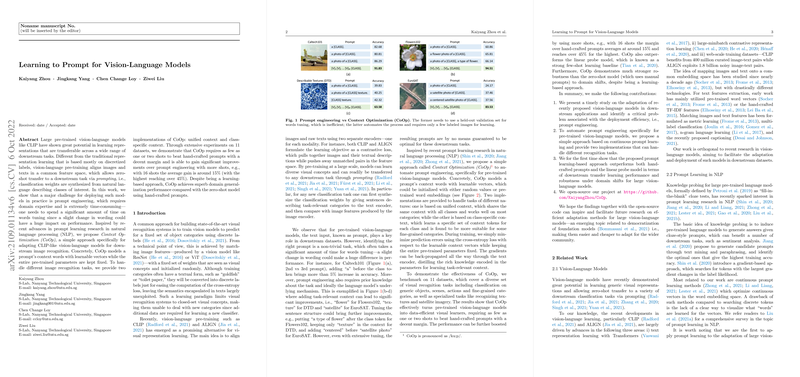

The Proposed Approach: Context Optimization (CoOp)

CoOp aims to automate the prompt engineering process specifically for vision-LLMs like CLIP. It models a prompt's context words with learnable vectors while keeping the pre-trained parameters fixed, thereby adapting the models for downstream image recognition tasks. The paper details two implementations:

- Unified Context: This approach shares the same context vectors across all classes.

- Class-Specific Context (CSC): This method assigns different context vectors for each class, which is beneficial for fine-grained classification tasks.

The CoOp approach minimizes prediction errors using a cross-entropy loss function with respect to the learnable context vectors. Training is conducted with a small number of shots, demonstrating improvements over manually crafted prompts.

Experimental Setup and Results

The effectiveness of CoOp was evaluated on 11 datasets encompassing a wide range of visual recognition tasks, from generic object classification to specialized tasks like texture and satellite imagery recognition. The results indicate that CoOp requires as few as one or two shots to surpass hand-crafted prompts and achieves substantial improvements with more shots. For instance, with 16 shots, CoOp provides an average performance gain of around 15%, with the highest gains observed in the EuroSAT and DTD datasets (over 45% and 20%, respectively).

In comparison to a linear probe model—a known strong baseline for few-shot learning—CoOp exhibited superior performance, particularly in extreme low-data regimes. Further analyses revealed that unified context generally outperforms CSC in generic object and scene datasets, while CSC holds an edge in fine-grained and specialized tasks.

Domain Generalization

One intriguing finding is CoOp's robustness to distribution shifts. Despite being a learning-based approach, CoOp showed better performance under domain shifts compared to the zero-shot and linear probe models. This counterintuitive result suggests that the learned prompts generalize well beyond the training data distribution.

Implications and Future Directions

The research demonstrated that CoOp could effectively turn pre-trained vision-LLMs into strong few-shot learners with impressive domain generalization capabilities. This automation of prompt engineering not only improves efficiency but also broadens the applicability of such models in various domains.

Further research could explore cross-dataset transfer and test-time adaptation, investigating more advanced architectures, and fine-tuning methods for enhancing performance and robustness. Given the complexity and intricacy of interpreting learned prompts, future work might aim to provide more interpretability and transparency in the context vectors.

In conclusion, this paper contributes significantly to the efficient adaptation of large vision-LLMs, offering a promising direction for future research in the democratization of foundation models. The empirical evidence and insights provided lay a robust foundation for further advancements in the field.