Overview of "EVA: An Open-Domain Chinese Dialogue System with Large-Scale Generative Pre-Training"

This paper presents a comprehensive approach to building an open-domain Chinese dialogue system named EVA, which is distinct in its utilization of a large-scale generative pre-trained model with a parameter count of 2.8 billion. The focal point of this research is the development and deployment of the largest Chinese pre-trained dialogue model, surpassing previous endeavors in both data scope and model complexity.

Key Contributions

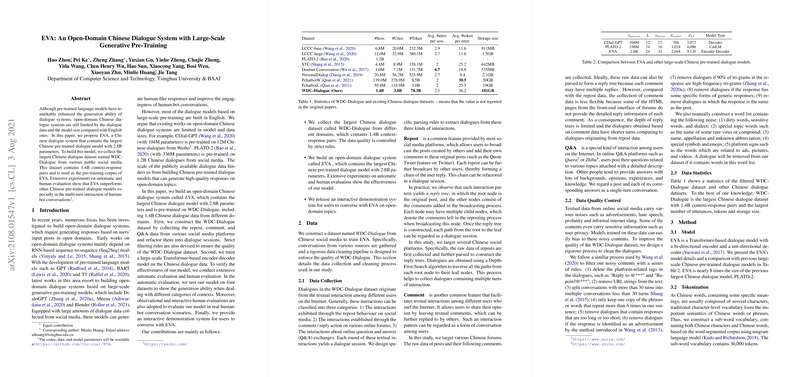

The researchers address critical limitations in data availability and model size prevalent in existing Chinese dialogue systems. They introduce a significant dataset, WDC-Dialogue, which consists of 1.4 billion context-response pairs sourced from a diverse array of public Chinese social media platforms. This dataset not only serves as a robust pre-training corpus but also stands out as the largest of its kind in Chinese dialogue arenas, emphasizing the collection, cleaning, and refinement processes employed to ensure high data quality.

The authors detail the methodological framework underpinning EVA, which involves a Transformer-based architecture with bi-directional encoder and uni-directional decoder components. Utilizing a sub-word vocabulary tailored for Chinese language idiosyncrasies, the model is trained via a sequence-to-sequence paradigm. Noteworthy is the unique data sampling strategy employed to enhance pre-training efficiency through concatenation of context-response pairs, which accommodates the maximum input limitations typical of the training process.

Experimental Results

EVA's performance is evaluated through extensive experiments incorporating both automatic metrics and human evaluations. Automatic evaluation metrics such as unigram F1, ROUGE-L, BLEU-4, and Distinct n-grams substantiate EVA's superior performance in generating relevant and diverse responses compared to baseline models such as CDial-GPT and CPM. EVA demonstrates consistently strong results across varied dialogue contexts, addressing single, multi-turn, long, and question-answering scenarios.

In terms of human evaluation, the paper presents both observational and interactive assessments. These qualitative measures reveal EVA's competence in generating coherent, specific, and contextually relevant responses, as shown by high scores in sensibleness and specificity metrics. The interactive evaluation further verifies EVA's adeptness in sustaining engaging multi-turn conversations, a haLLMark of effective dialogue systems.

Implications and Future Directions

The introduction of EVA and the associated methodologies propel the capabilities of Chinese dialogue systems significantly. This work not only fills a notable gap in the availability and scale of Chinese conversational models but also presents scalable methodologies applicable to other linguistic contexts. The robust dataset and model architecture serve as vital resources for future research and development in Chinese natural language processing.

Looking forward, the implications of this work suggest several avenues for exploration. Further refinement of dialogue models could focus on enhancing personalization and adaptive response capabilities, potentially through fine-tuning with user feedback and interactive data. Moreover, expanding the model's linguistic repertoire to include dialects or other languages could extend its applicability and utility. As EVA and similar systems are refined, they could significantly influence practical applications in customer service, content moderation, and virtual assistant technologies.

In conclusion, the paper constructs a solid foundation for advancing open-domain dialogue systems in Chinese, setting a substantial benchmark for future research endeavors in this field.