Overview of CoCoA: Contrastive Continual Learning

The paper "CoCoA: Contrastive Continual Learning" presents a novel approach to addressing the challenge of catastrophic forgetting in continual learning domains by leveraging self-supervised contrastive learning techniques. The motivation arises from recent findings indicating that representations learned through self-supervised methods, such as contrastive learning, exhibit superior transferability and robustness compared to those trained jointly with task-specific supervision. Such robustness is particularly valuable in continual learning, where the primary challenge lies in maintaining previously acquired knowledge when exposed to new tasks.

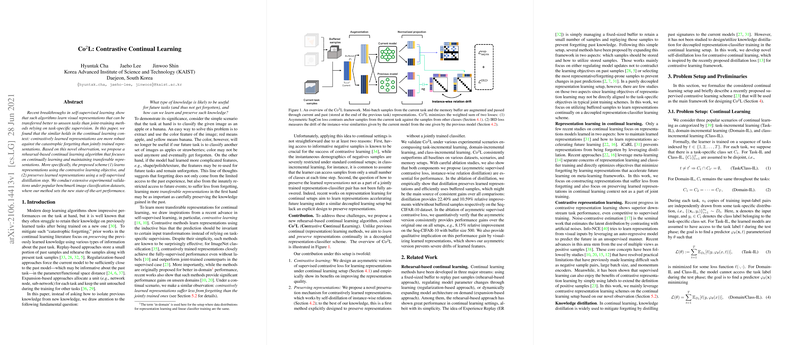

The authors introduce a rehearsal-based continual learning algorithm, CoL (Contrastive Continual Learning), which focuses on learning transferable representations and preserving them through a dedicated self-supervised distillation process. The key contributions of the CoL framework include:

- Asymmetric Supervised Contrastive (Asym SupCon) Loss: This novel loss function is designed to address continual learning setups by adapting supervised contrastive learning methods. It contrasts samples from the current task against past samples, effectively leveraging limited negative samples in memory buffers and the current task.

- Instance-wise Relation Distillation (IRD): CoL introduces a self-distillation technique to preserve the learned representations. IRD minimizes the instance-level similarity drift between current and past models, ensuring learned features remain stable as new data is introduced.

Extensive experimentation across popular image classification benchmarks demonstrates the efficacy of CoL. The proposed method consistently achieves state-of-the-art performance across diverse experimental setups, significantly reducing the effects of catastrophic forgetting when compared to baseline methods. Notably, CoL yields a 22.40% improvement in accuracy on the Seq-CIFAR-10 dataset with the use of IRD and buffered samples. Such results highlight the importance of designing continual learning algorithms that not only shield representations from forgetting but also optimize for transferability to future tasks.

Implications and Future Directions

The introduction of contrastive methodologies in continual learning, as proposed by this paper, opens a new avenue for exploring self-supervised approaches in varied machine learning contexts. By focusing on transferable knowledge rather than task-specific features, CoL aligns the learning process closer to the ideal of human-like learning capabilities. The paper emphasizes the relevance of representation quality in mitigating forgetting and suggests that a decoupled approach to representation and classifier training can be fruitful.

While CoL showcases remarkable improvements, several aspects warrant further exploration. Among these are investigations into optimizing IRD hyperparameters for various domains, examining potential computational overheads associated with the distillation process, and experimenting with different neural architectures or additional datasets beyond image classification tasks. Furthermore, advancing these contrastive learning techniques under unsupervised or semi-supervised scenarios could enhance their applicability across a broader array of real-world conditions.

In conclusion, CoL marks a significant step forward in continual learning, providing a scalable and effective solution for representation retention and transferability. As machine learning systems continue to evolve, leveraging self-supervised frameworks like CoL will likely become pivotal in designing future adaptive, enduring models, capable of learning continuously amidst growing complexity.