Graph Transformer Networks: Learning Meta-path Graphs to Improve GNNs

Graph Neural Networks (GNNs) are recognized for their proficiency in representing graph-structured data across various domains. Traditional GNNs usually operate under the assumption that the input graphs are fixed and homogeneous, which restricts their efficacy when dealing with heterogeneous graphs. These heterogeneous graphs, characterized by diverse node and edge types, like citation or movie networks, present unique challenges due to the variance in node and edge importance across different tasks.

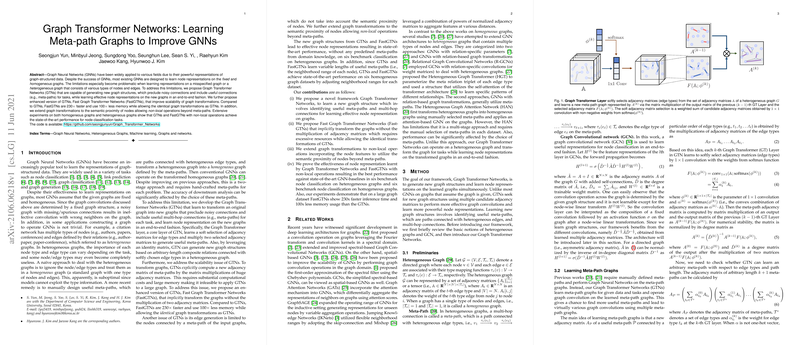

To address these challenges, the paper proposes Graph Transformer Networks (GTNs), a novel approach that dynamically generates new graph structures tailored to specific tasks. These structures exclude noisy connections and include task-relevant meta-paths. Meta-paths, in this context, are paths formed by sequences of edges of varying types, essential for capturing rich relational information.

Key Contributions

- Graph Transformer Networks (GTNs): GTNs go beyond traditional GNNs by learning to transform original graphs into new ones that feature informative meta-paths. These transformations are executed in an end-to-end fashion. The key mechanism is the Graph Transformer layer, which selects adjacency matrices via a soft attention mechanism, allowing the model to synthesize meta-paths by multiplying selected adjacency matrices.

- Fast Graph Transformer Networks (FastGTNs): To address the scalability limitations of GTNs, FastGTNs are introduced. While GTNs explicitly compute new adjacency matrices through large-scale matrix multiplications, FastGTNs mitigate this computational burden. They conduct implicit graph transformations, avoiding the computationally expensive multiplications, leading to a model that is 230 times faster and utilizes 100 times less memory.

- Extended Transformations with Non-local Operations: Recognizing the limitation of meta-path-centric transformations, the paper incorporates non-local operations. These operations enhance GTNs by facilitating transformations that consider the semantic proximity of nodes, thus enabling non-local semantic connections beyond traditional meta-paths.

Empirical Evaluation

The paper reports extensive experiments across both homogeneous and heterogeneous datasets. In node classification tasks, GTNs and FastGTNs consistently achieve state-of-the-art performance. This is attributed to their ability to learn variable-length meta-paths and effectively tune neighborhood ranges for each dataset.

Insights and Interpretability

The GTNs model provides interpretable insights via attention scores on adjacency matrices, reflecting the importance of various meta-paths. This attention mechanism aids in understanding the complex interactions within heterogeneous data, guiding future enhancements in GNN architectures.

Implications and Future Directions

The introduction of GTNs marks a significant step in the evolution of graph learning frameworks. By adapting the graph structure based on task-specific needs, these networks optimize the representation learning process. The scalability improvements made possible by FastGTNs open the door for applying these methods to even larger datasets.

Practically, the methods proposed can be pivotal in domains like social networks and biological data, where heterogeneity is prevalent. Theoretically, this work suggests avenues for further research into adaptive and interpretable graph transformations.

In conclusion, the paper successfully demonstrates the potential of GTNs and FastGTNs to enhance GNN performance through innovative graph structure learning. Future research could explore the integration of these approaches with other machine learning paradigms, potentially revolutionizing their application to complex relational data.