Analysis of Factuality in Abstractive Summarization Using the FRANK Benchmark

The paper, "Understanding Factuality in Abstractive Summarization with FRANK: A Benchmark for Factuality Metrics," presents an analytical overview of factuality in modern abstractive summarization models. The authors highlight the notable disconnect between linguistic fluency and factual reliability in contemporary summarization systems. A significant portion of machine-generated summaries, approximately 30%, contain factual errors, underscoring the necessity for robust evaluative metrics that extend beyond traditional n-gram-based measures like BLEU and ROUGE, which are widely considered insufficient in correlating with human judgments of factual accuracy.

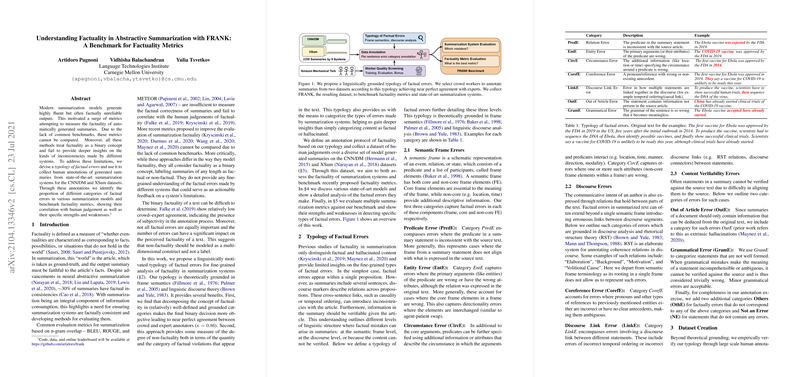

Development of a Typology of Factual Errors

A core contribution of the paper is the development of a typology for factual errors, grounded in theoretical frameworks like frame semantics and linguistic discourse analysis. This typology facilitates an in-depth analysis of summaries by decomposing factuality into various error categories. Seven distinct categories are established, including Predicate, Entity, Circumstance, Coreference, Discourse Link, Out of Article, and Grammatical errors. These categories are critical not only in identifying factual errors but also in systematically annotating and analyzing the outputs of state-of-the-art summarization systems.

Dataset Creation and Human Annotation

The authors leverage their typology to collect human annotations of summaries generated by leading summarization models across the CNN/DM and XSum datasets. Through this annotated dataset, which they term FRANK, they seek to assess the factual accuracy of summaries and analyze factuality metrics rigorously. They find significant discrepancies in the error profile between models trained on CNN/DM versus XSum, highlighting model-specific vulnerabilities and dataset challenges. Notably, reference summaries on the more abstractive XSum dataset displayed a higher incidence of factual errors.

Benchmarking of Factuality Metrics

The FRANK dataset serves as a benchmark for evaluating factuality metrics. Upon analysis, entailment classification metrics like FactCC and dependency-level entailment models like DAE are identified as having a better correlation with human judgments of factuality compared to traditional metrics. These entailment-based approaches outperformed question answering-based metrics like FEQA and QAGS, particularly in modeling semantic frame and content verifiability errors. However, none of the assessed metrics effectively captured discourse-related errors, indicating an area for continued development.

Implications and Future Directions

This research demonstrates the pressing need for innovative metrics to evaluate model outputs in terms of factual accuracy accurately. The introduction of the FRANK benchmark can guide future methodological advancements by providing a systematic means of assessing diverse error categories. Furthermore, the insights on model-specific challenges can inform the development of more sophisticated techniques in abstractive summarization that mitigate factual inaccuracies.

In sum, while substantial progress has been made in the fluency of machine-generated language, ensuring the factual consistency of such outputs remains an evolving challenge. The FRANK benchmark is a significant step toward a nuanced understanding of factuality, holding promise for future advancements in AI-driven summarization systems.