Carbon Emissions and Large Neural Network Training: An Analysis

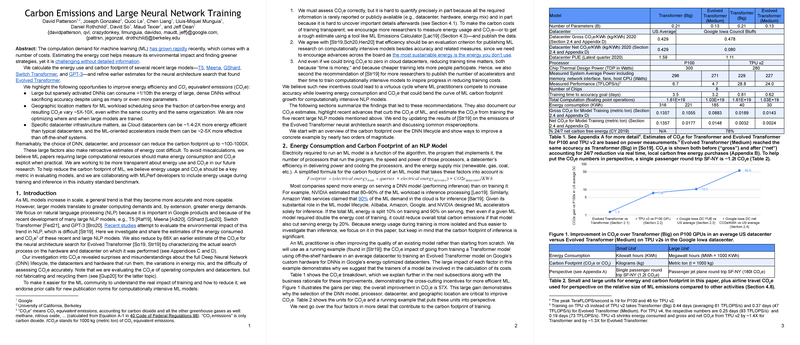

In the paper "Carbon Emissions and Large Neural Network Training," the authors, David Patterson, Joseph Gonzalez, Quoc Le, and others, present an in-depth examination of the energy consumption and carbon footprint associated with training large NLP models. The evaluation includes several state-of-the-art models such as T5, Meena, GShard, Switch Transformer, and GPT-3. They also refine the carbon estimates for the neural architecture search (NAS) that discovered the Evolved Transformer. This analysis reveals significant insights into improving energy efficiency and reducing carbon emissions in ML.

Key Findings

The paper identifies three primary opportunities to enhance energy efficiency and reduce CO₂ emissions:

- Sparse vs. Dense DNNs: The paper highlights that large but sparsely activated deep neural networks (DNNs) can significantly reduce energy consumption, approximately by an order of magnitude less than large, dense DNNs, without sacrificing accuracy.

- Geographic Considerations: The location where models are trained greatly affects the carbon emissions due to variations in the energy mix. Within the same country, carbon-free energy fractions and resulting CO₂ emissions can vary by a factor of 5 to 10. Thus, optimizing the training location and timing can be beneficial.

- Datacenter Infrastructure: The infrastructure of datacenters matters. Cloud datacenters are identified as being 1.4–2 times more energy-efficient than typical datacenters. Moreover, the machine learning-oriented accelerators in these datacenters can be 2-5 times more effective than conventional systems.

The authors present numerical results to substantiate these claims. For example, the Evolved Transformer model showed a significant reduction (up to ~88X) in carbon emissions from the initial estimates by correctly characterizing the realized search process on the specified hardware and datacenter.

Practical and Theoretical Implications

From a practical standpoint, the implications of this research are multifold. Firstly, by shifting training workloads to more environmentally friendly datacenters or optimizing training times to coincide with low-carbon energy availability, significant emission reductions can be achieved. Secondly, using sparsely activated models, firms can maintain performance levels while drastically reducing the energy footprint.

Theoretically, this research sets a precedent for integrating energy metrics into the performance benchmarks for ML models. It argues that ML papers involving substantial computational resources should explicitly report energy usage and CO₂ emissions, promoting transparency and awareness in the community.

Recommendations and Future Directions

To address the environmental impact, the authors endorse several measures:

- Enhanced Reporting: ML researchers are encouraged to measure and report energy consumption and CO₂ emissions in their publications.

- Publication Incentives: Efficiency metrics should be considered alongside accuracy metrics for ML research publications, thereby fostering advances in energy-efficient machine learning.

- Reduction in Training Time: Faster training not only reduces energy consumption but also decreases costs, making ML research more accessible.

The incorporation of energy usage during training and inference into benchmarks like MLPerf could institutionalize these practices.

Future Outlook

Looking forward, the paper speculates that if the ML community prioritizes training quality and carbon footprint over accuracy alone, it could catalyze innovations in algorithms, systems, hardware, and data infrastructure, ultimately leading to a deceleration in the growth of ML's carbon footprint.

Furthermore, there might be increased competition among datacenters to offer lower carbon footprints, driving the adoption of renewable energy sources and advancements in energy-efficient hardware designs. As a result, the carbon emissions associated with training large neural networks could see a significant decline in the foreseeable future.

Conclusion

The paper "Carbon Emissions and Large Neural Network Training" presents crucial insights into the environmental impact of large-scale machine learning models and offers pragmatic recommendations for reducing this impact. By adopting these recommendations, the ML community can take meaningful steps toward sustainability without compromising on the advancements in NLP and other domains leveraging deep learning.