CharBERT: Character-Aware Pre-Trained LLM

Introduction

The paper entitled "CharBERT: Character-aware Pre-trained LLM" presents a novel pre-trained LLM (PLM) named CharBERT, which aims to address the limitations of existing PLMs—specifically the issues of incomplete modeling and lack of robustness when dealing with subword units. Traditional models such as BERT and RoBERTa use Byte-Pair Encoding (BPE) to encode inputs, which can lead to challenges in vocabulary representation and robustness to minor typographical errors. CharBERT addresses these challenges by integrating character-level information, thereby enriching word representations and improving model robustness.

Methodology

CharBERT introduces several innovative components to enhance the standard PLM architecture:

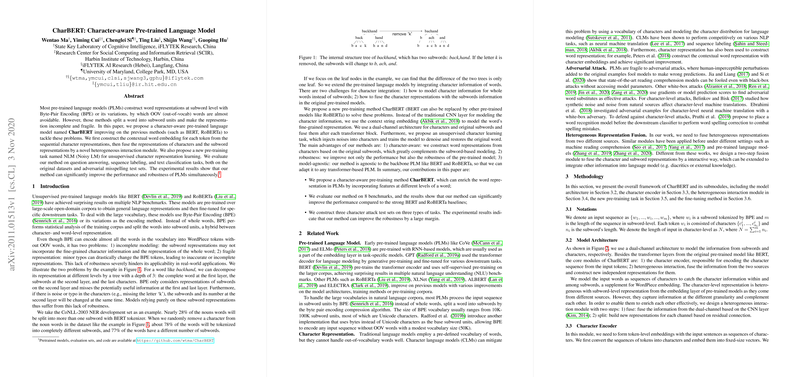

- Character Encoder: The model integrates a character encoder that constructs contextual embeddings from sequential character representations using a bidirectional Gated Recurrent Unit (GRU) layer. This helps capture fine-grained character-level features and inter-character dependencies within words.

- Heterogeneous Interaction Module: To merge the character-level representations with traditional subword-level embeddings, CharBERT employs a dual-channel architecture with a novel interaction module. This module engages in two primary functions—fusion and division—to produce enriched and robust word representations.

- Noisy LLMing (NLM): The paper introduces a new pre-training task that injects character-level noise into words and trains the model to denoise by predicting the original words. This task enhances the model's ability to handle out-of-vocabulary (OOV) words and improves robustness against typographical errors.

Experimental Evaluation

The methodology's efficacy is verified through comprehensive experiments on three types of NLP tasks: question answering (QA), text classification, and sequence labeling. The model's performance is assessed against multiple benchmarks, including SQuAD, CoNLL-2003 NER, and several GLUE tasks.

Key findings from the experiments are:

- Question Answering (SQuAD 1.1 and 2.0): CharBERT demonstrates superior performance over baseline models like BERT and RoBERTa, achieving noticeable improvements in both exact match (EM) and F1 scores.

- Text Classification: On tasks such as CoLA, MRPC, QQP, and QNLI, CharBERT exhibits an overall enhancement in accuracy and Matthew's correlation score. However, the improvements on text classification tasks are less pronounced compared to QA and sequence labeling tasks.

- Sequence Labeling (NER and POS): CharBERT sets new state-of-the-art results on the Penn Treebank POS tagging dataset and shows significant improvements in NER performance, underscoring its strength in token-level tasks.

Furthermore, CharBERT's robustness is evaluated through adversarial misspelling attacks. The model significantly outperforms the baselines, including adversarially trained models and those with typo correction modules, in terms of maintaining high performance despite typographical errors.

Implications and Future Directions

The integration of character-level information in CharBERT offers several clear benefits and paves the way for future developments:

- Enhanced Robustness: The ability to handle character-level noise through the NLM task means that CharBERT is more resilient to real-world text input variability, such as typos and OCR errors.

- Improved Representations: By capturing both subword and character-level features, CharBERT delivers richer word embeddings that can lead to better performance across various NLP tasks.

- Model-Agnostic Architecture: The techniques proposed can be generalized and applied to other PLMs, potentially benefiting a broader range of transformer-based models.

Future research can explore extending CharBERT's methodology to other languages, particularly those with rich morphological structures like Arabic and Korean, where character-level information could be even more crucial. Additionally, deploying CharBERT in real-world applications might provide further insight into its robustness and versatility, and further work on defending against other types of noise, such as word-level and sentence-level perturbations, can create more resilient NLP systems.

Conclusion

The research presented in this paper successfully addresses some critical limitations of current PLMs by proposing CharBERT—a character-aware pre-trained model. Through innovative integration of character-level information and new pre-training tasks, CharBERT not only enhances performance on standard benchmarks but also significantly improves robustness against character-level noise. This approach holds great promise for advancing the versatility and resilience of LLMs in various practical applications.