Analyzing Multi-Hop Reasoning in Commonsense-Augmented Language Generation

The paper "Language Generation with Multi-Hop Reasoning on Commonsense Knowledge Graph" presents an innovative method, Generation with Multi-Hop Reasoning Flow (GRF), designed to enhance text generation tasks by integrating commonsense reasoning capabilities. The challenge addressed by this research lies in the current limitations of generative pre-trained LLMs (GPLMs), such as GPT-2, which perform suboptimally when required to reason over implicit commonsense knowledge not present in the explicit context.

Key Contributions and Methodology

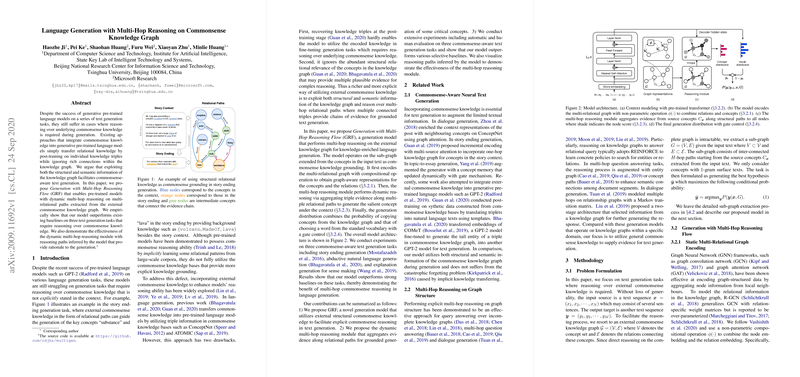

The authors propose GRF, a model leveraging dynamic multi-hop reasoning on multi-relational paths extracted from external commonsense knowledge graphs. The central hypothesis is that exploiting both the structural and semantic dimensions of a knowledge graph could significantly boost the ability of LLMs to produce commonsense-aware text.

The approach this paper takes contrasts sharply with previous methods that merely transfer relational knowledge through post-training on isolated knowledge triples. GRF dynamically reasons over paths connecting multiple triples, a process that inherently captures the rich interconnections within a knowledge graph such as ConceptNet.

- Static Multi-Relational Graph Encoding: The model employs a non-parametric Graph Neural Network to encode the structural knowledge graph, incorporating both node and relation embeddings to derive graph-aware representations.

- Dynamic Multi-Hop Reasoning: This module is the crux of the GRF model, performing reasoning during text generation by updating node scores through evidence aggregation over multi-hop relational paths.

- Integration with Pre-Trained Models: The approach seamlessly integrates with the GPT-2 architecture, effectively combining LLM predictions with node selection from the knowledge graph via a gating mechanism.

Experimental Evaluation

The paper evidences the efficacy of this approach through rigorous experimentation on tasks that necessitate commonsense reasoning, including story ending generation (SEG), abductive natural language generation (NLG), and explanation generation (EG). GRF consistently outperforms strong baselines, including a fine-tuned GPT-2 model and a commonsense-enhanced variant trained on Open Mind Common Sense (OMCS) data. Significantly, GRF demonstrates superior performance, quantified through metrics such as BLEU, ROUGE-L, and CIDEr, thereby validating the benefits of dynamic multi-hop reasoning.

Furthermore, through an ablation paper, the research isolates the impact of GRF's components, confirming that both the static graph encoding and dynamic reasoning contribute positively to performance. The paper implies that the model's ability to conduct explicit reasoning aligns closely with generating text that is both fluent and reasonable given its context.

Implications and Future Directions

The integration of structural commonsense knowledge into LLMs, as demonstrated by GRF, provides a crucial step forward in AI tasks that demand nuanced comprehension and reasoning, offering a performant alternative to existing methods relying on implicit knowledge transfer. This work lays the groundwork for future investigations into more complex reasoning paradigms within language generation, particularly those that might leverage additional layers of graph connectivity or heterogeneity in knowledge types.

Moreover, the dynamic reasoning integrated in GRF marks a step towards more interpretable AI, as reasoning paths provide tangible rationales for decision-making processes during generation. This interpretability is essential for applications requiring accountability and transparency.

In conclusion, the approach outlined in this paper represents a notable advancement in utilizing external commonsense knowledge to enhance language generation tasks fundamentally, offering potential pathways for further innovation in creating AI systems capable of deeper understanding and reasoning.