Insights and Implications of "Counterfactual VQA: A Cause-Effect Look at Language Bias"

The paper "Counterfactual VQA: A Cause-Effect Look at Language Bias" by Niu et al. explores the complexities associated with Visual Question Answering (VQA), a critical component for several multidisciplinary AI applications including visual dialogs, multi-modal navigation, and visual reasoning. Over the years, VQA models have been found to rely heavily on language biases, which significantly impairs their multi-modal reasoning capabilities. This research presents a novel counterfactual inference framework to address language biases, thus encouraging robust VQA model development.

Technical Overview

The primary contribution of this paper is the introduction of a counterfactual inference framework to decipher and mitigate the dominant language biases in VQA models. Language bias is viewed as the direct causal effect of a question on the answer. This framework is crafted to subtract this direct causal effect to emphasize multi-modal understanding, an element pivotal to several VQA applications.

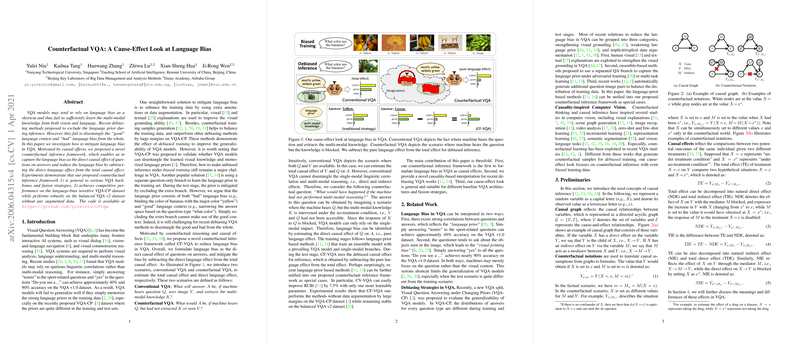

The paper outlines two main scenarios—Conventional VQA and Counterfactual VQA. The conventional scenario involves deploying the VQA model with available multi-modal inputs (both images and questions). The counterfactual setting, conversely, isolates language input effects by removing image data to calculate the direct language effect on answers accurately.

Empirical Evaluation

The experimental section evidences the general applicability of the counterfactual framework across various VQA models and scenarios. Specifically, it demonstrates exceptional performance on datasets sensitive to language biases, such as VQA-CP, while maintaining robustness on balanced datasets like VQA v2.

The proposed CF-VQA outperforms several advancements in the field, particularly on benchmarks like VQA-CP v2, without relying on data augmentation—a conventional approach seen in earlier models. The framework notably improves performance on yes/no questions while sustaining accuracy in context-based inquiries, showcasing its ability to address language biases effectively.

Furthermore, CF-VQA serves to unify and extend previous methodologies, such as RUBi and Learned-Mixin, into a coherent causal inference perspective. The authors empirically show that adopting their framework with only minimal code adjustments leads to substantial accuracy improvements for existing models.

Implications and Future Directions

This research holds significant implications for both the development of more nuanced VQA systems and the broader field of AI. It aligns with a growing emphasis on model transparency and robustness, particularly in settings where disparate linguistic and visual cues must be reconciled in decision-making processes.

Future directions could include enhancing the reliability of the counterfactual models under variable language biases across distinct datasets, or assimilating contextual understanding more profoundly. Moreover, further exploration into balancing bias mitigation with preserving beneficial language cues could lead to more sophisticated inference models.

In conclusion, the adoption of causal inference notions within VQA models, as promoted by this paper, sets a promising foundation for addressing inherent biases while fostering deeper integration and understanding of multi-modal data. The extensibility and effectiveness demonstrated advocate for broader applications and continued refinement in this line of research, contributing substantially to the fields of AI reasoning and interpretability.