- The paper presents an open platform that bridges simulation and real-world testing for embodied AI to address the Sim2Real transfer challenge.

- It leverages modular, reconfigurable physical environments and a corpus of 89 scenes to enable accessible, cost-effective research.

- Domain adaptation techniques, including CycleGAN, are evaluated, revealing performance gaps that highlight the need for robust feature extraction.

Introduction

The "RoboTHOR: An Open Simulation-to-Real Embodied AI Platform" paper presents a versatile platform aimed at bridging the gap between simulation-based and real-world experiments for embodied AI agents. This work strives to democratize embodied AI research by providing an open, accessible environment where researchers can develop AI models in a simulated world and subsequently test these models in real-world scenarios. The primary aim is to address the persisting challenge of transferring learned behaviors from simulation to reality, known as the Sim2Real transfer problem.

The RoboTHOR platform is built upon AI2-THOR and is designed to replicate both simulation and real-world environments closely. It consists of a corpus of 89 apartment-like scenes, with 75 devoted to training and validation and 14 available for testing in both simulated and physical settings.

- Modularity and Reconfigurability: The physical environments are constructed using modular components, facilitating rapid reconfiguration and scene diversity. This approach allows for efficient scaling of test environments with minimal physical and financial overhead.

- Accessibility: A key design goal is ensuring that researchers worldwide can access the physical testing apparatus remotely and at no cost. This is supported by a scheduling system that allows different teams to reserve time on the physical robots and leverage the API for seamless transitions from simulation to physical testing.

- Benchmarking: The platform supports various embodied AI tasks, with initial benchmarks focusing on visual semantic navigation. It measures the performance of models trained in simulation and deployed in real-world environments, uncovering significant performance drops and identifying key transfer challenges.

Sim2Real Challenges

The paper's experimentation with semantic navigation tasks highlights several challenges in Sim2Real transfer:

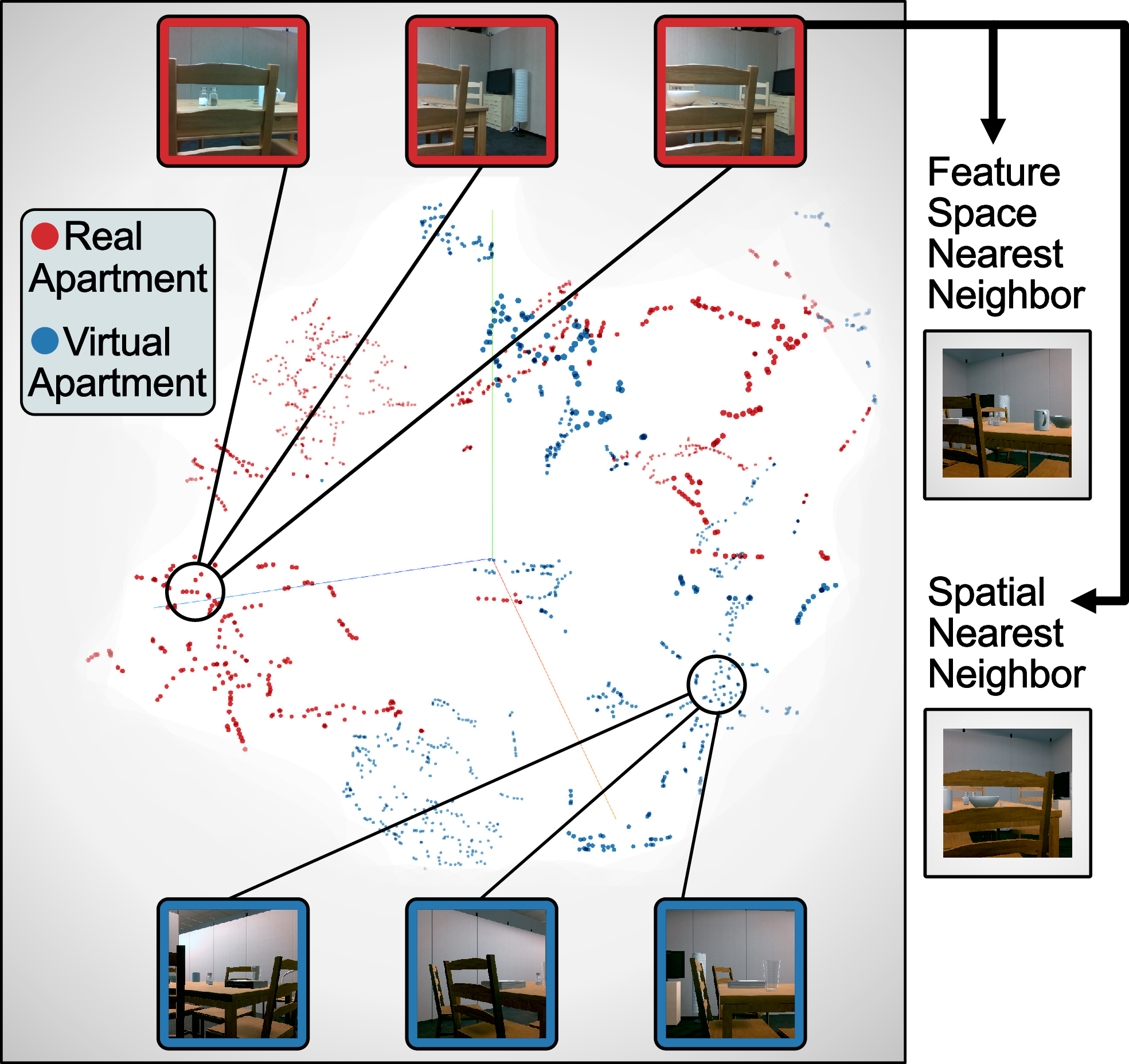

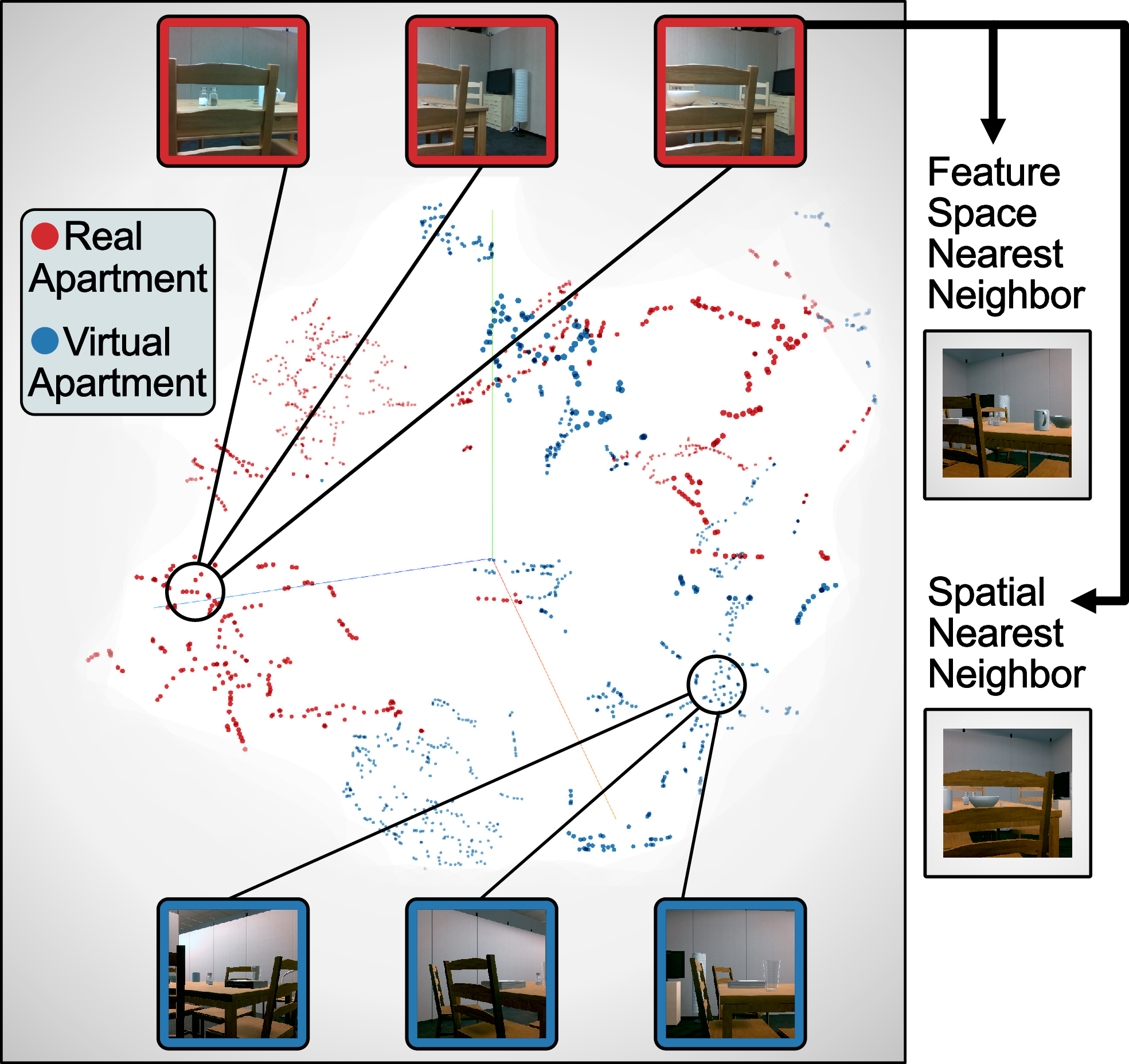

- Appearance Disparities: Although visual similarities between simulated and real images are perceptible, significant disparities exist at the feature level when embeddings are analyzed using t-SNE, hindering model transfer.

Figure 1: Comparison of embeddings for real and synthetic images, highlighting clear separation in t-SNE visualization.

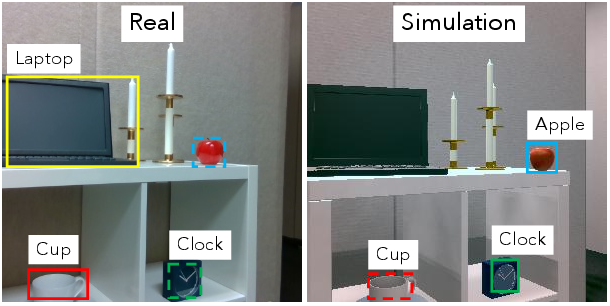

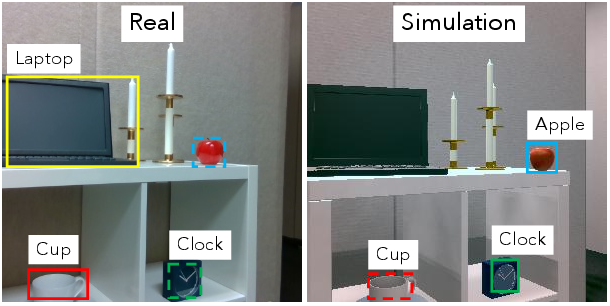

- Detection Variability: Object detection performance degrades when models trained on natural image datasets, such as MS-COCO, are evaluated on simulation-rendered images versus real-world images.

Figure 2: Object detection results in both real and simulated images showing differences in detection confidence.

- Control Dynamics: Variations in real and simulated robotic control, due to factors like motor noise and physical interactions, further complicate transfer efforts. Experiments reveal that deterministic movements learned in simulation do not translate well to the noisy mechanics of real-world robots.

Domain Adaptation Strategies

In addressing the Sim2Real gap, the authors experiment with domain adaptation techniques, such as CycleGAN-based image translation, to align image domains between simulation and real-world scenarios.

Conclusion

The RoboTHOR platform represents a significant advancement for embodied AI research, particularly in addressing the Sim2Real challenge. While current benchmarks illustrate substantial performance gaps, the open nature of RoboTHOR provides a foundation for researchers to innovate and develop improved models that leverage both simulation and real-world testing. Future developments could focus on enhancing model robustness to visual and control dynamics variability, ultimately advancing the deployment of AI agents in real-world applications.