Multi-Turn Dialogue Reasoning: Evaluation with MuTual Dataset

The paper introduces MuTual, a curated dataset devised to evaluate complex reasoning capabilities in non-task oriented dialogue systems. The current conversational AI models have seemingly plateaued in generating coherent responses, but they struggle with responses that require logical and commonsense reasoning. This paper highlights these deficits by presenting MuTual, a dataset structured to require nuanced reasoning over multi-turn dialogues.

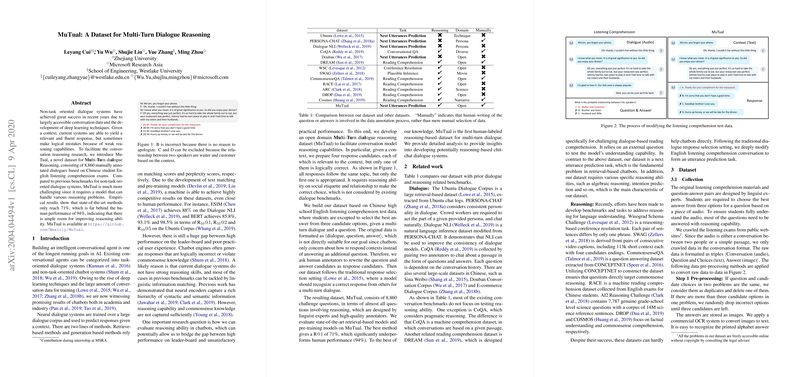

Dataset Composition and Rationale

MuTual is comprised of 8,860 dialogues derived from Chinese student English listening comprehension exams. The dataset is annotated manually to ensure quality, making it a challenging benchmark compared to prior dialogue systems. Each dialogue is accompanied by multiple response options, where only one response is logically coherent within the context provided, while others are designed to mislead models relying on simplistic text matching algorithms.

The dataset is distinct because it explicitly targets reasoning in dialogue, an essential component if conversational agents are to interact naturally and intelligently. Unlike previous datasets that might be solved through syntactic matching, MuTual demands semantic understanding, situational awareness, and even basic algebraic reasoning, testing models on their ability to infer intentions, attitudes, and real-world relationships between concepts.

Empirical Findings

Empirical results indicate significant room for growth. Even state-of-the-art models achieve a best performance of only 71% R@1 with the tested methodology, markedly lower than the human benchmark of 94%. This underlines a critical gap where models need to evolve to better mirror human cognitive processing capabilities.

Several methods were evaluated, including conventional retrieval-based and generation-based models alongside advanced pre-trained models like BERT and RoBERTa. While these pre-trained models exhibit improved performance over older paradigms, they still fall short of human-like reasoning. This suggests current models may learn latent linguistic patterns rather than engaging in true reasoning.

In particular, RoBERTa demonstrates notable, albeit insufficient, performance improvement. These findings suggest that while training on expansive datasets enriches linguistic comprehension, it does not translate seamlessly to enhanced reasoning, likely because existing training objectives do not adequately emulate reasoning tasks.

Implications and Future Directions

The introduction of MuTual paves the way for developing more sophisticated methodologies that prioritize reasoning. Enhancement strategies might include training more intensive models with heterogeneous data or integrating reasoning-focused objectives during pre-training phases. Additionally, fine-tuning techniques could benefit from targeted datasets like MuTual to develop dialogue agents' reasoning capabilities uniquely.

Practically, improving reasoning within dialogue systems has compelling implications for fields like automated customer service, educational technologies, and human-machine collaboration. Theoretical advancements in this domain may also contribute insights into the intersection of language comprehension and cognitive processing in AI, further bridging the gap between human and machine interaction.

The paper hints at readiness and need for continued efforts to build models capable of not just answering queries, but engaging in dialogues that are logical and contextually aware. Future research may draw from MuTual to develop novel architectures or innovative training paradigms that push the boundary of what is possible in dialogue reasoning.

Conclusion

MuTual serves as both a critical assessment tool and a catalyst for advancing reasoning capabilities in dialogue systems. The dataset provides a compelling challenge to the AI community, emphasizing that while linguistic fluency is a milestone, genuine discourse understanding is the true frontier. As systems improve in multi-turn reasoning, the real-world applications of dialogue systems will grow correspondingly, leading to more meaningful and reliable interactions with advanced AI agents.