Heterogeneous Graph Transformer: A Comprehensive Overview

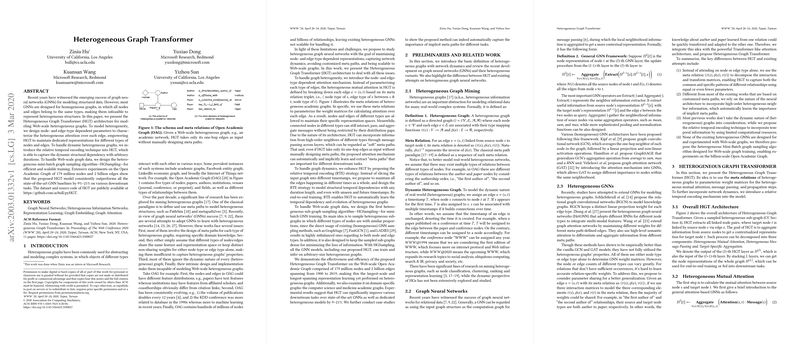

In recent advancements of graph neural networks (GNNs), the predominant focus has been on homogeneous graphs, where the uniformity of node and edge types is assumed. However, real-world data is often heterogeneous, comprising multiple types of nodes and edges, necessitating more sophisticated models for accurate representation and learning. This paper introduces the Heterogeneous Graph Transformer (HGT), an architecture designed to address the challenges of modeling web-scale, dynamic heterogeneous graphs.

Key Contributions

The paper makes several key contributions, notably:

- Heterogeneous Attention Mechanism: HGT incorporates node- and edge-type dependent parameters to characterize the heterogeneous attention over each edge, allowing the model to handle diverse node and edge types efficiently.

- Relative Temporal Encoding: To account for dynamic changes, HGT employs a relative temporal encoding technique that captures the temporal dependencies in graphs of arbitrary durations, enhancing the model's ability to deal with dynamic data.

- Heterogeneous Mini-Batch Graph Sampling: The paper introduces HGSampling, an algorithm tailored for efficient and scalable training of heterogeneous graphs, ensuring balanced and dense sub-graph sampling.

Experimental Evaluation

The model was tested on the vast Open Academic Graph (OAG) dataset, containing 179 million nodes and 2 billion edges. HGT consistently outperformed state-of-the-art GNN baselines by 9–21% across various downstream tasks. This performance improvement demonstrates the model's effectiveness in handling large-scale heterogeneous graphs.

Model Architecture

Heterogeneous Attention Mechanism

The HGT model uses meta relation triplets to design heterogeneous mutual attention, which decomposes each edge based on its source node type, edge type, and target node type. This design allows for maintaining distinct representation spaces for different node and edge types. Through the node- and edge-type dependent attention mechanism, HGT effectively aggregates information from diverse types of high-order neighbors.

Relative Temporal Encoding (RTE)

The RTE technique enhances HGT by enabling it to incorporate temporal aspects directly into the graph structure. By maintaining all edges with their corresponding timestamps and using sinusoidal functions for encoding temporal information, the model can learn structural temporal dependencies, crucial for accurately representing evolving graphs.

Model Training with HGSampling

HGSampling is designed to address the inefficiencies of existing homogeneous graph sampling methods when applied to heterogeneous graphs. By maintaining a balanced node budget for each type and using importance sampling based on normalized degrees, HGSampling ensures dense and informative sampled sub-graphs, which is vital for training GNNs on large-scale data.

Results

HGT's performance was rigorously evaluated on several tasks, including paper-field prediction, paper-venue prediction, and author disambiguation. Across all tasks and datasets—namely, the CS, Med, and OAG graphs—the model demonstrated substantial improvements in NDCG and MRR metrics compared to leading GNNs like GCN, GAT, RGCN, HetGNN, and HAN. Furthermore, HGT managed these tasks with fewer parameters and comparable computational efficiency.

Implications and Future Directions

The strong performance of HGT highlights its robustness in dealing with the complexity of heterogeneous and dynamic graph data. The model's ability to automatically identify important implicit meta paths without manual intervention makes it particularly valuable for real-world applications.

Future research could explore the generative capabilities of HGT, potentially allowing for the prediction of new entities and their attributes within the graph. Additionally, leveraging pre-training strategies on HGT could further improve its performance on tasks with limited labeled data, expanding its applicability across domains with scarce annotated resources.