Overview of "Algorithmic Fairness" by Dana Pessach and Erez Shmueli

"Algorithmic Fairness," authored by Dana Pessach and Erez Shmueli, provides a comprehensive examination of the domain of algorithmic fairness, emphasizing the significant role AI algorithms play in decision-making across various sectors like healthcare, recruitment, and criminal justice. The increasing reliance on AI necessitates the development of fair and unbiased algorithms, especially given the well-publicized instances of algorithmic bias affecting individuals based on race, gender, and other protected characteristics.

Summary of Key Components

1. Causes of Algorithmic Unfairness:

The paper identifies several sources of algorithmic unfairness, primarily biases inherently present in training datasets and biases that arise from algorithmic objectives that traditionally aim for accuracy over fairness. Issues like selection bias and proxies for sensitive attributes further exacerbate this unfairness, making it crucial to address data representativeness before algorithmic deployment.

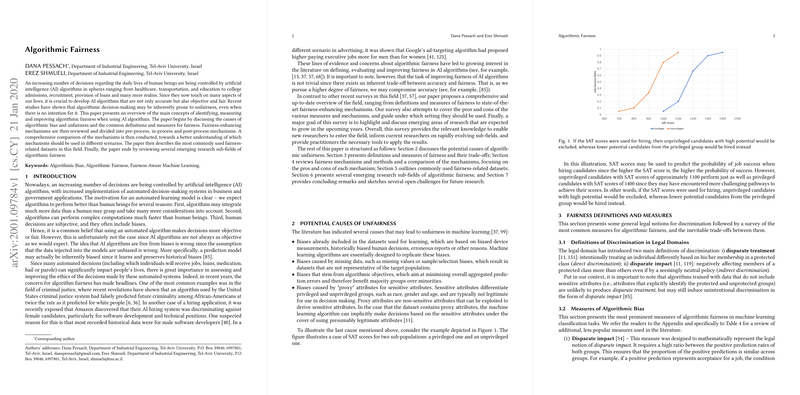

2. Definitions and Measures of Fairness:

The paper details various definitions and measures of fairness, both from legal and algorithmic perspectives. Disparate treatment and disparate impact are introduced as pivotal legal definitions. In algorithmic terms, measures like disparate impact, demographic parity, equalized odds, and individual fairness are explored. The paper discusses the trade-offs between these measures, highlighting the incompatibility between some fairness definitions and the challenges of balancing fairness with model accuracy.

3. Fairness-Enhancing Mechanisms:

Pessach and Shmueli categorize fairness-enhancing mechanisms as pre-process, in-process, and post-process interventions. Pre-process methods alter datasets to reduce bias before training. In-process methods modify algorithms to incorporate fairness metrics during training. Post-process methods, on the other hand, adjust model outputs to meet fairness criteria. The paper further provides guidelines on choosing appropriate mechanisms based on context and intended fairness outcomes.

4. Datasets and Emerging Research Areas:

The review outlines commonly used datasets for fairness research, such as the ProPublica risk assessment dataset and the UCI Adult Income dataset. The authors also indicate emerging research areas like fair sequential learning, fair adversarial learning, and fair causal learning, all of which contribute to expanding the understanding and methodologies of fairness in AI.

Implications and Future Directions

Practically, this paper serves as an essential resource for researchers aiming to design AI systems that do not perpetuate social inequities. Fairness in AI impacts not only technical fields but also intersects significantly with social sciences and ethics, necessitating cross-disciplinary approaches to research and development.

Theoretically, the survey highlights the ongoing challenges in defining and operationalizing fairness in real-world AI applications. The trade-offs between fairness and accuracy remain a significant hurdle, as fairness often demands sacrifice in efficiency or precision. Moreover, the interpretability and transparency of fairness measures are crucial, not just for ethical compliance but also for public trust.

The authors suggest the need for continuous development of robust fairness frameworks and benchmarks, a recommendation supported by the rapidly evolving AI landscape where new applications can generate unforeseen fairness concerns.

Overall, "Algorithmic Fairness" by Pessach and Shmueli effectively consolidates existing knowledge while pointing towards necessary areas of future exploration. It thus provides a solid foundation for both new entrants and experienced researchers committed to advancing fairness in AI.