An Analysis of "Discrimination-aware Network Pruning for Deep Model Compression"

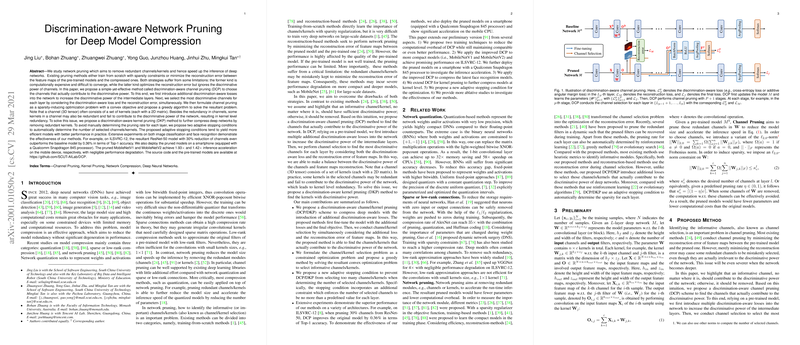

The paper "Discrimination-aware Network Pruning for Deep Model Compression" introduces a novel approach to network pruning aimed at enhancing the efficiency of deep neural networks (DNNs) without sacrificing their discriminative performance. This method—Discrimination-aware Channel Pruning (DCP)—addresses the limitations of traditional pruning techniques by focusing on preserving the discriminative power of the model, making it a compelling strategy for deep model compression.

Context and Motivation

Deep neural networks have achieved significant breakthroughs in numerous applications, including image classification and face recognition. However, their deployment on resource-constrained devices remains challenging due to their substantial memory and computational requirements. Traditional compression methods, such as network pruning, have been primarily categorized into two strategies: training-from-scratch with sparsity constraints and reconstruction-based methods. These approaches either suffer from high computational costs and convergence difficulties or fail to maintain the discriminative power of the network by focusing solely on minimizing reconstruction errors.

Discrimination-aware Channel Pruning (DCP)

The paper introduces DCP, which proposes a two-fold strategy: enhancing the discriminative power of intermediate layers and simultaneously considering both discrimination-aware loss and reconstruction error during pruning. The key components of this approach include:

- Discrimination-aware Loss: Additional losses are incorporated into the network to ensure that the intermediate representations contribute significantly to the discriminative task. This is a departure from traditional methods that often overlook the discriminative relevance of channels.

- Optimization Framework: The pruning process is framed as a sparsity-inducing optimization problem. A greedy algorithm is proposed to solve this, effectively selecting channels that maintain the network’s ability to distinguish between different classes.

- Adaptive Stopping Criteria: Two adaptive stopping conditions are proposed to automatically determine the number of selected channels or kernels, effectively balancing model complexity with performance.

Empirical Evaluation

The proposed DCP method is evaluated extensively across various architectures such as ResNet and MobileNet on datasets like CIFAR-10 and ILSVRC-12. The results indicate that DCP can achieve substantial reductions in the number of parameters and FLOPs while occasionally even improving the model's accuracy—a significant achievement indicative of effective channel selection.

For instance, the ResNet-50 model pruned to reduce 30% of its channels not only decreases computational overhead but also marginally improves its Top-1 accuracy by 0.36%. Additionally, the pruned MobileNet models exhibit considerable inference acceleration on a Qualcomm Snapdragon 845 processor, demonstrating the method’s practical applicability in mobile environments.

Discrimination-aware Kernel Pruning (DKP)

Further extending the concept, the paper explores Discrimination-aware Kernel Pruning (DKP) to address redundancy at the kernel level, proposing a more fine-grained compression strategy. The DKP approach leverages the same principles of discriminative power preservation and indicates significant potential for deeper network compression without notable loss of performance.

Implications and Future Directions

The implications of this research are two-fold: it provides a methodology for more efficient deployment of neural networks on mobile and embedded devices and sets the stage for further advancements in discrimination-aware model compression techniques. Future research could explore extension into quantization alongside pruning, potentially offering further performance gains through simultaneous reduction in bit precision and architectural complexity.

In summary, the paper convincingly demonstrates that discrimination-aware methods for network pruning can be a robust path forward in the ongoing endeavor to optimize neural networks for diverse computational budgets while maintaining high performance. This work lays crucial groundwork for ongoing research into efficient, scalable deep learning models.