Overview of "LayoutLM: Pre-training of Text and Layout for Document Image Understanding"

The paper "LayoutLM: Pre-training of Text and Layout for Document Image Understanding" introduces a novel model that addresses the limitations of traditional NLP models by incorporating both text and layout information in document image processing. This research proposes the LayoutLM model, which leverages a multimodal approach to enhance document image understanding tasks such as information extraction, classification, and form recognition.

Key Contributions

- Multimodal Integration: Traditionally, pre-trained models for NLP focus only on text. LayoutLM, however, integrates text with 2-D layout and image information. This joint modeling is crucial for tasks where spatial relationships impact understanding, such as interpreting forms or complex document layouts.

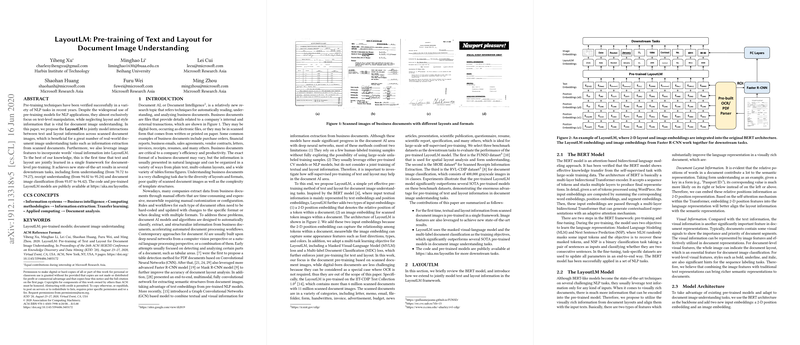

- Model Architecture: The model builds upon BERT's architecture, extending it with additional layers for 2-D positional embeddings and image embeddings sourced from Faster R-CNN. This allows LayoutLM to process the spatial arrangement of text, which is critical for understanding visually rich documents.

- Pre-training Objectives: LayoutLM introduces new pre-training tasks, including a Masked Visual-LLM (MVLM) that adapts BERT's masked LLMing to jointly consider visual and textual context. It also employs a Multi-label Document Classification (MDC) objective to enhance document-level representations.

- Performance: LayoutLM achieves state-of-the-art results across several benchmarks—spatial layout analysis (FUNSD dataset), scanned receipt information extraction (SROIE), and document classification (RVL-CDIP). Notably, it shows significant performance improvements over existing pre-trained models, underscoring the effectiveness of joint text-layout modeling.

Implications and Future Directions

The inclusion of layout and visual signals marks a significant advancement in how models can understand and interpret document images. By incorporating visual context, LayoutLM captures more nuanced document features, facilitating automation in business document processing.

The results suggest substantial potential for improving tasks in document AI by leveraging joint pre-training techniques. Future work could focus on scaling the model with more extensive datasets and exploring advanced network architectures that might further benefit from multimodal pre-training. Additionally, expanding the scope to handle more varied and complex documents or integrating additional visual signals could enhance understanding capabilities.

Conclusion

The LayoutLM model represents an essential development in the field of document AI by successfully merging text and layout information. Its architecture and pre-training strategies present significant improvements, showcasing the value of multimodal approaches in enhancing document image understanding. As such, LayoutLM lays the groundwork for future explorations in bridging text and visual domains, providing a foundation for more effective applications in automated document processing.