Analysis of "Multilingual is not enough: BERT for Finnish"

The paper detailed in "Multilingual is not enough: BERT for Finnish," authored by Virtanen et al., provides a comprehensive evaluation of LLMs applied to Finnish. The focus is on contrasting the performance of a new Finnish-specific BERT model, FinBERT, against the multilingual BERT (M-BERT) across several NLP tasks that reflect the complexity and uniqueness of the Finnish language.

Evaluation and Results

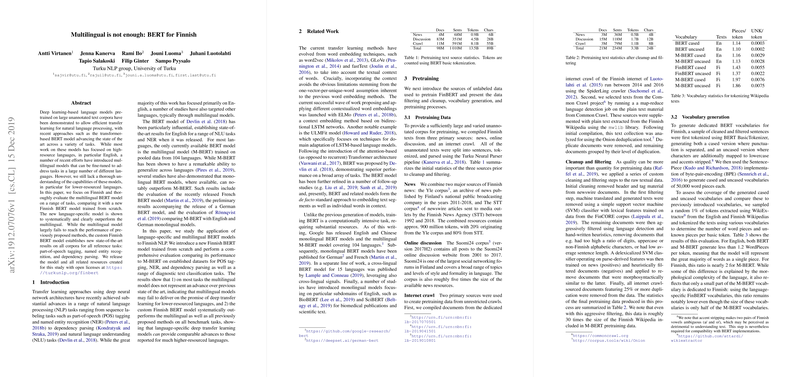

The paper's primary evaluation involves a rigorous comparison of FinBERT and M-BERT across diverse NLP tasks in lower-resourced language contexts. The tasks include traditional sequence labeling tasks such as part-of-speech (POS) tagging, named entity recognition (NER), and dependency parsing—alongside diagnostic classification tasks. The paper presents compelling evidence of FinBERT's superiority over the multilingual model:

- Part-of-Speech Tagging: FinBERT surpasses M-BERT on the Turku Dependency Treebank, FinnTreeBank, and Parallel UD treebank datasets. Notably, FinBERT cased model reduces the error rate on the FinnTreeBank to less than half compared to previous best outcomes.

- Named Entity Recognition: FinBERT establishes a new high watermark in NER performance for Finnish, outperforming both M-BERT and existing methods significantly.

- Dependency Parsing: The evaluation using the Udify parser further reinforces FinBERT's capabilities, achieving higher labeled attachment scores (LAS) than state-of-the-art methods from the CoNLL 2018 shared task.

- Text Classification Tasks: The paper also demonstrates FinBERT’s robustness in text classification tasks via datasets created from the Yle news and Ylilauta online discussion platforms. FinBERT notably excels, showing consistent advantage over M-BERT especially in informal domain texts.

These results collectively affirm the hypothesis that for lower-resourced languages like Finnish, language-specific models provide substantial gains over multilingual counterparts. The improvements are observed across tasks despite Finnish's morphological richness and complexity, which defy straightforward transfer learning success.

Implications and Future Directions

The implications of this research are several-fold:

- Language-Specific Models: For languages with resources comparable to or greater than Finnish, language-specific models should be considered to enhance NLP applications. The success of FinBERT implies potential advantages for numerous other languages.

- Data Quality and Domain Relevance: The authors highlight the importance of pretraining data quality and domain characteristics, which significantly impact model performance. This reinforces the need for careful data curation and domain-specific considerations in future model training efforts.

- Generalization Beyond Finnish: While the benefits for Finnish are evident, extending the approach to additional languages could result in scaling benefits. Given the architectural similarities in BERT models, replicating this method should be feasible, provided sufficient language data is available.

The future could involve adapting FinBERT’s training methodology to other languages and exploring challenges specific to each linguistic context—expanding the repertoire of high-performance models in the NLP community.

Conclusion

Virtanen et al.'s contribution through forming a Finnish-specific BERT model marks a critical assessment of multilingual and language-specific BERT models. Their findings underscore the limitations of multilingual models such as M-BERT and spotlight the necessity and value of dedicating resources toward language-specific models for non-English languages. By providing open-access to FinBERT and related resources, the authors also significantly contribute to advancing NLP capabilities for the Finnish language, setting a precedent for similar efforts involving other languages.