Cross-Lingual Transferability of Monolingual Representations

The paper "On the Cross-lingual Transferability of Monolingual Representations" presents an in-depth analysis into the cross-lingual capabilities of monolingual LLMs, particularly in the context of state-of-the-art transformer-based masked LLMs (MLMs) such as BERT.

Key Contributions and Hypotheses

The research tests the prevailing hypothesis that cross-lingual generalization in multilingual models like multilingual BERT (mBERT) arises from the use of a shared subword vocabulary and joint training across multiple languages. The primary contributions of the paper include:

- Alternative Approach for Cross-Lingual Transfer:

- The authors propose an approach that transfers a monolingual LLM to a new language at the lexical level by learning a new embedding matrix while freezing other model parameters.

- This method diverges from the norm as it does not rely on a shared vocabulary or joint multilingual training.

- Benchmark Comparisons:

- The approach is evaluated against several benchmarks, including XNLI, MLDoc, and PAWS-X. It demonstrates competitive performance when compared to mBERT and other joint multilingual models.

- New Dataset: XQuAD:

- The paper introduces the Cross-lingual Question Answering Dataset (XQuAD), consisting of 240 paragraphs and 1190 question-answer pairs translated into ten languages, offering a rigorous test for cross-lingual abilities.

- Empirical Findings:

- The research undermines the common assumptions about multilingual models' reliance on shared subword vocabularies and joint training, showing that deep monolingual models inherently learn abstractions that generalize across languages.

Experimental Details

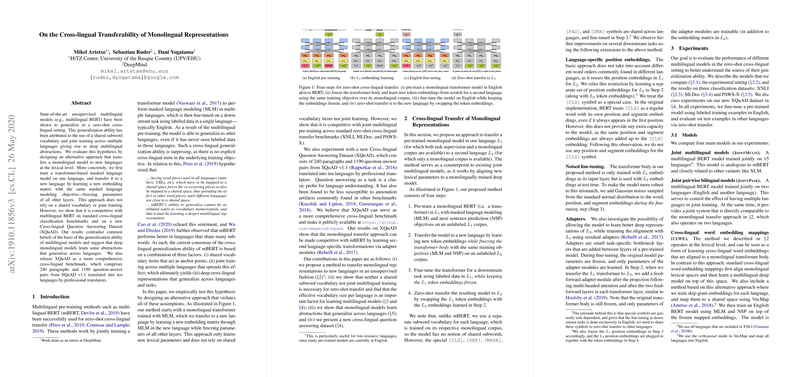

The paper's methodology includes training a monolingual transformer-based BERT in one language (English), followed by transferring the model to new languages. The detailed steps are:

- Pre-train:

- A monolingual transformer model is pre-trained using MLM and next sentence prediction (NSP) objectives on a corpus in the source language.

- Vocabulary Training:

- New token embeddings for the target language are learned by freezing the transformer's parameters and training a new embedding matrix specific to the target language using similar pre-training objectives.

- Fine-Tuning:

- The model is fine-tuned on the source language for downstream tasks, with target language embeddings frozen during this phase.

- Zero-Shot Transfer:

- The model, fine-tuned on the source language, is then applied to the target language by swapping the token embeddings, enabling zero-shot transfer.

Results and Analysis

Performance on Benchmarks:

- The proposed model demonstrated strong performance, often rivalling mBERT and joint multilingual models on tasks like XNLI, MLDoc, and PAWS-X.

- Vocabulary size investigation revealed that a larger vocabulary invariably improves the performance of joint multilingual models, indicating the effective vocabulary size is crucial for achieving strong results.

Insights from XQuAD:

- The new dataset XQuAD underscored that the proposed monolingual transfer method could still be effective for complex tasks like question answering, albeit with some performance gaps compared to joint models.

- Language-specific position embeddings and adapter modules yielded further improvements, reinforcing the adaptability of the proposed method.

Implications and Future Directions

Practically, the findings suggest that it is feasible to transfer monolingually trained models to new languages without the need for joint multilingual corpora, which could be advantageous for low-resource languages. The approach also opens pathways for lifelong learning applications where models need continual updates to incorporate new languages without retraining from scratch.

Theoretically, the results challenge the conventional beliefs about the necessity of shared subword vocabularies and joint training in achieving cross-lingual representations. This forms a basis for future research to explore more nuanced mechanisms of multilingual representation learning.

Future research directions could focus on:

- Enhancing the transfer mechanism, particularly for syntactically diverse languages.

- Investigating the integration of monolingual and multilingual models for more robust cross-lingual NLP systems.

- Expanding the scope of evaluation benchmarks to include a wider variety of languages and tasks.

Conclusion

This paper offers significant insights into cross-lingual representation learning, presenting strong evidence against the necessity of joint training and shared vocabularies in multilingual models. The introduction of XQuAD further enriches the resources available for assessing cross-lingual capabilities. These contributions lay the groundwork for more flexible, scalable, and inclusive approaches to developing multilingual NLP systems.