Reward Optimization in Reinforcement Learning for Summarization

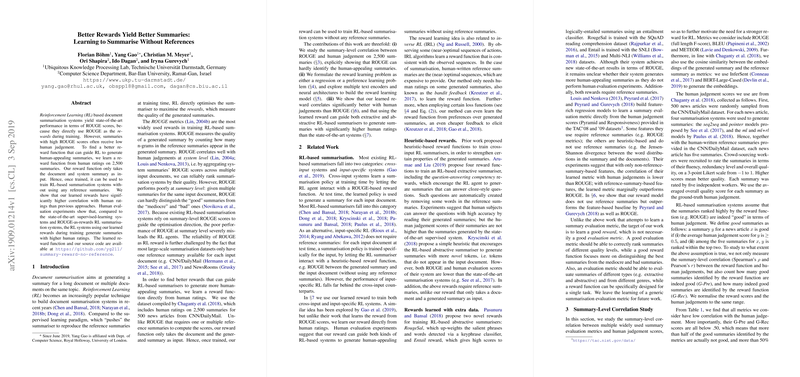

The paper "Better Rewards Yield Better Summaries: Learning to Summarise Without References" explores a novel approach to enhancing reinforcement learning (RL) based document summarization systems by improving the reward functions. The central issue addressed is that while existing RL-based systems typically rely on ROUGE scores as rewards due to their correlation with human judgements at the system level, these rewards do not necessarily translate into high-quality summaries at the summary level from a human perspective.

Main Contributions

The authors introduce a methodology for learning reward functions directly from human ratings. This means that, post-training, the summarization models can produce high-quality summaries without relying on reference summaries. The approach demonstrated a superior correlation with human judgments compared to traditional metrics such as ROUGE, BLEU, and METEOR.

Key Innovations:

- Reward Learning:

- The reward function leverages human ratings from a dataset of 2,500 summaries. This dataset provides the foundation for modeling a reward function that can more accurately guide summarizers towards generating human-pleasing output.

- Experimental Evaluation:

- The paper rigorously evaluates the learned reward against traditional benchmarks. The results show a significant improvement in summary ratings according to human evaluators when the learned reward is used in comparison to traditional metrics.

- Neural Architectures:

- Various architectures were explored for reward learning, including Multi-Layer Perceptron (MLP) models with different encoders like CNN-RNN, PMeans-RNN, and BERT. The BERT-based approach using MLP was particularly successful in closely correlating with human judgments.

- Practical Applications:

- By integrating the learned reward into RL-based summarization models, significant gains in human-perceived quality were achieved, showing that RL can be effectively guided with non-traditional metrics derived from human feedback.

Implications and Future Directions

The findings significantly impact both theoretical and practical aspects in the field of natural language processing. Theoretically, it challenges the traditional reliance on ROUGE and similar metrics, proposing a shift towards learning-based rewards that more accurately reflect human preferences. Practically, it provides a viable method for improving summarization systems with relatively minimal human input compared to the vast datasets typically required for training supervised systems.

For future research, several promising directions can be delineated:

- Generalization to Other Tasks: The learning of robust reward functions from human feedback could be expanded beyond summarization to other generative tasks like translation and dialogue systems.

- Dataset Expansion and Diversity: Exploring broader and more diverse datasets for reward learning could enhance the robustness and applicability of the approach across different genres and applications.

- Incorporation of Different Feedback Types: Exploring how different kinds of human feedback (e.g., preferences versus direct scoring) could affect the quality and applicability of the learned rewards.

In conclusion, this paper presents a sophisticated approach to refining RL-based summarization by directly learning from human judgments, offering a new direction that challenges conventional evaluation methods and sets the stage for enhanced summarization systems guided by human-centric metrics.