Essay on Dialogue Safety through Robustness to Adversarial Human Attack

The paper "Build it Break it Fix it for Dialogue Safety: Robustness from Adversarial Human Attack" addresses a critical aspect of NLP — the detection and mitigation of offensive language in dialogues. The authors present a comprehensive approach to bolster the resilience of conversational AI systems against adversarial human attacks, utilizing a method they refer to as "Build it Break it Fix it" (BIBIFI).

Overview and Methodology

The core idea behind the proposed method is to enhance the robustness of dialogue systems by continuously exposing them to adversarial behavior. The process follows an iterative cycle:

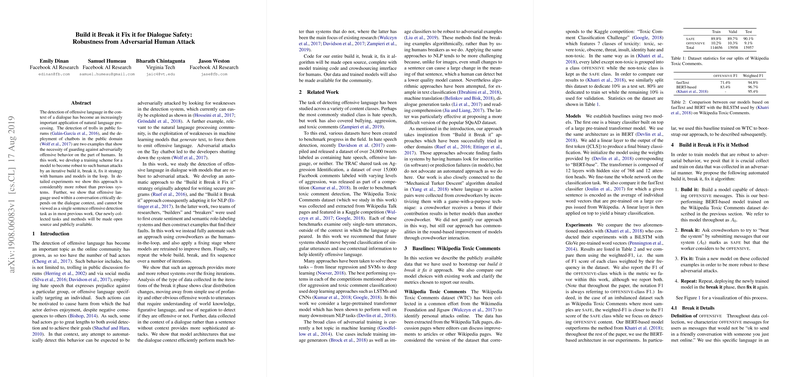

- Build it: Develop an initial model capable of detecting offensive content, informed by existing datasets like the Wikipedia Toxic Comments (WTC).

- Break it: Involve human participants, acting as adversaries, who attempt to find weaknesses in the model by crafting messages that are offensive yet undetected by the model.

- Fix it: Retrain the model using the newly identified adversarial examples to strengthen its defenses.

In subsequent iterations, this BIBIFI strategy involves both baseline models, pretrained on standard datasets such as WTC, and models that have been improved in previous fix-it stages.

Experimental Results

The experimental findings are multifaceted. Firstly, it is evident that models trained using the BIBIFI approach significantly outperform traditional models in robustness tests. These models demonstrate an increased ability to correctly classify adversarially constructed offensive language, demonstrating their heightened sensitivity to nuanced language structures that were previously more challenging for models.

Notably, the paper points out that a simplistic reliance on profanity detection is insufficient; rather, adversarial examples frequently omit common offensive words and instead exploit contextual or figurative language that requires deeper understanding. This underscores the importance of considering context in developing dialogue safety models.

Implications and Future Directions

The implications of this research stretch beyond immediate improvements to dialogue systems. The iterative adversarial training cycle not only produces more resilient models but also sheds light on the evolving nature of adversarial behavior. Understanding these patterns can inform the broader field of AI safety, particularly in NLP applications.

For practical implementations, the public release of the code and data underlying the BIBIFI procedure presents an opportunity for the NLP community to collaborate on enhancing dialogue safety further. This open access facilitates the replication and extension of experiments, lending itself to community-driven advancements in this domain.

Theoretically, this research encourages the exploration of more sophisticated linguistic models that incorporate world knowledge and contextual analysis into their frameworks. By capturing the subtleties of human language, future AI systems may better navigate the complexities of dialogue and mitigate offensive behavior more effectively.

The transition from single-turn to multi-turn tasks proposed in the paper introduces another layer of complexity, where the context of a dialogue history is pivotal for accurate offensive language detection. This aspect highlights an area ripe for further investigation — the development of models that understand dynamic conversations and the changing nature of language usage over time.

Conclusion

In conclusion, the paper provides a systematic and empirical approach to enhancing dialogue safety through the BIBIFI framework. It establishes a foundation for future work that not only seeks to detect but also preemptively guard against adversarial attacks in conversational AI systems. The insights gained from this research have practical and theoretical impacts, suggesting a path forward for robust, context-aware dialogue systems within the field of AI.