An Analysis of ERNIE 2.0: A Continual Pre-Training Framework for Language Understanding

The paper entitled "ERNIE 2.0: A Continual Pre-Training Framework for Language Understanding" introduces an innovative approach to enhancing pre-trained LLMs. The authors, Yu Sun et al., propose a framework that iteratively incorporates new pre-training tasks through continual multi-task learning, diverging from traditional methods which often limit themselves to a few fixed pre-training objectives. The ERNIE 2.0 framework aims to capture comprehensive lexical, syntactic, and semantic information from extensive text corpora.

Key Contributions

The paper delineates several key contributions:

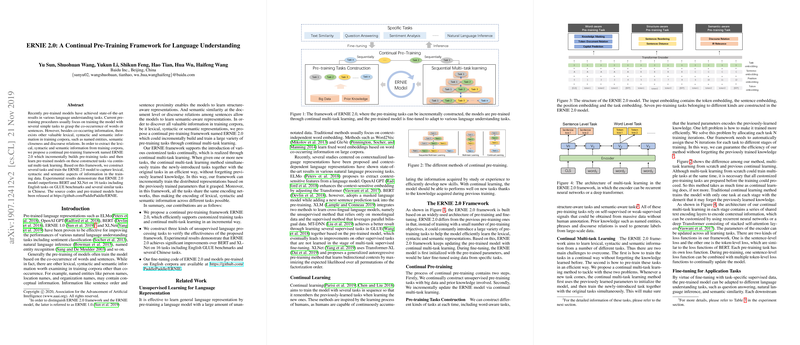

- Continual Multi-Task Learning Framework: The ERNIE 2.0 framework is designed to continually integrate and learn from new pre-training tasks without forgetting previous tasks. This method contrasts sharply with static pre-training approaches and aims to maintain the knowledge acquired from earlier iterations.

- Varied Pre-training Tasks: The framework supports diverse pre-training tasks that go beyond simple co-occurrence statistics. These tasks are categorized into word-aware, structure-aware, and semantic-aware tasks. Notable tasks include Knowledge Masking, Sentence Reordering, and IR Relevance prediction, each contributing to the model's ability to understand and represent nuanced language features.

- Empirical Validation: The model was evaluated on 16 tasks including the GLUE benchmark and several Chinese NLP tasks. The results demonstrate that ERNIE 2.0 consistently outperforms existing models such as BERT and XLNet across multiple dimensions.

Experimental Setup and Results

The ERNIE 2.0 framework employs a multi-layer Transformer encoder similar to BERT and other pre-trained models. It uses extensive corpora for pre-training, including data from Wikipedia, BookCorpus, Reddit, and other domains, ensuring comprehensive language representation coverage.

English Tasks

The performance on the General Language Understanding Evaluation (GLUE) benchmark highlights ERNIE 2.0’s superiority. For instance, on the CoLA task, ERNIE 2.0 achieved a Matthew’s correlation of 63.5 compared to BERT’s 60.5. Moreover, the model attained an accuracy of 94.6 on the QNLI task, surpassing BERT’s 92.7. Similarly, significant improvements were observed on other tasks such as SST-2 and MNLI.

Chinese Tasks

The model also excels in various Chinese NLP tasks. For example, ERNIE 2.0 obtained an F1 score of 89.9 on the CMRC 2018 dataset for machine reading comprehension, contrasting with 85.1 by ERNIE 1.0. In named entity recognition, ERNIE 2.0 recorded an F1 score of 95.0 on the MSRA-NER task, evidencing noteworthy advancements.

Methodological Insights

Continual Pre-Training: The framework’s ability to integrate new tasks continually while retaining previously acquired knowledge is facilitated by a unique training regime that allocates training iterations across multiple tasks dynamically. This is in contrast to traditional continual learning which risks catastrophic forgetting.

Acknowledging Referential Information: The introduction of tasks such as the Token-Document Relation and Sentence Distance tasks allows the model to encode document-level and discourse-level relations, which proved effective in tasks demanding a deeper understanding of document context.

Practical and Theoretical Implications

Practically, the ERNIE 2.0 framework sets a new benchmark for NLP tasks, indicating its utility in various applications requiring robust LLMs. Theoretically, the framework challenges the limits of traditional pre-training models by demonstrating the efficacy of continual and dynamic task integration.

Future Directions

The potential for future exploration in the ERNIE 2.0 framework is vast. Future research could incorporate even more diverse pre-training tasks, possibly extending to multimodal data. Additionally, investigating more sophisticated methods for continual learning and task management could further optimize the framework's efficiency and performance.

In conclusion, ERNIE 2.0 presents a significant advancement in the field of pre-trained LLMs. By leveraging continual multi-task learning, it surpasses traditional models in both performance and scope, offering promising directions for future research and practical applications in NLP.