Deep Modular Co-Attention Networks for Visual Question Answering

The paper "Deep Modular Co-Attention Networks for Visual Question Answering" presents an innovative approach to Visual Question Answering (VQA) by leveraging deep modular co-attention networks (MCANs). VQA requires the confluence of fine-grained visual and textual understanding to infer accurate answers from images based on given questions. The authors emphasize the significance of co-attention mechanisms which associate key words in questions with relevant regions in images.

Summary of Contributions

The major contributions of the paper revolve around introducing a novel architecture that optimally combines deep co-attention layers through stacking and encoder-decoder mechanisms. The MCAN consists of several Modular Co-Attention (MCA) layers. Each MCA layer vertically integrates self-attention (SA) and guided-attention (GA) units inspired by the Transformer model. The SA unit captures dense intra-modal interactions within a modality (either words in questions or regions in images), while the GA unit models dense inter-modal interactions between the question and image modalities.

Detailed Contributions

- Modular Co-Attention Layer Design:

- The MCA layer is a composition of SA and GA units. This modular design enables capturing specific attention dynamics within and across modalities.

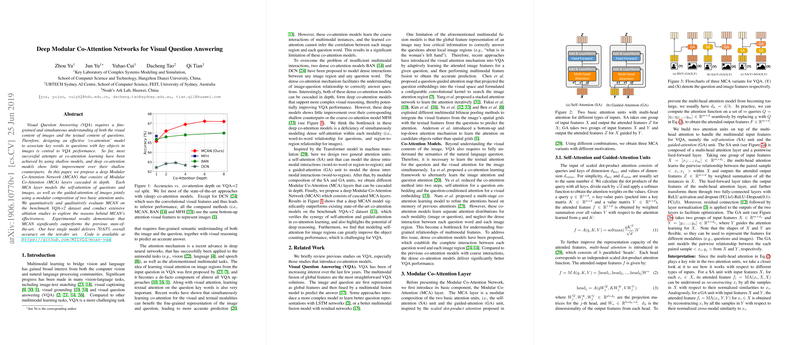

- Three variants of MCA layers are proposed: ID(Y)-GA(X,Y), SA(Y)-GA(X,Y), and SA(Y)-SGA(X,Y). Empirically, SA(Y)-SGA(X,Y) demonstrates superior performance due to its comprehensive handling of intra-modal interactions in both visual and textual modalities.

- Deep Co-Attention Networks:

- The stacking strategy and the encoder-decoder strategy are proposed for cascading the MCA layers to deeper architectures. While both approaches show improvements with added depth, the encoder-decoder strategy consistently outperforms the stacking approach, particularly as the number of layers increases.

- Experimental outcomes manifestly illustrate that a deep MCAN model surpasses state-of-the-art models on the VQA-v2 dataset, achieving a top single-model accuracy of 70.63% on the test-dev set.

- Ablation Studies and Qualitative Analysis:

- Extending an extensive suite of ablation studies, the authors illustrate the significant role self-attention plays within each MCA layer. Particularly, modeling self-attention for image regions markedly enhances performance on questions related to object counting.

- Visualization of attention maps from various layers within the MCAN elucidates the reasoning process and affirms the effectiveness of MCA units in focusing on critical regions and words, thereby ensuring precise VQA predictions.

Implications and Future Directions

The practical implications of the proposed MCAN are profound, particularly for applications requiring precise multimodal fusion and reasoning. Image recognition tasks, autonomous systems, and interactive AI applications could greatly benefit from the capabilities demonstrated by the deep modular co-attention approach. Notably, the improvement in understanding intra-modal and inter-modal interactions highlights the model's potential for broader application in complex vision-language tasks beyond VQA.

From a theoretical standpoint, the findings underline the necessity of deep, well-structured attention mechanisms. Future research might build on this work by exploring optimizations to further stabilize training for very deep networks, given the noted difficulties when the model depth exceeds six layers. Moreover, integrating additional auxiliary tasks or leveraging unsupervised pre-training strategies could offer further performance gains and new insights into the capabilities of multimodal deep learning architectures.

By exploring the core mechanisms of attention and modularity in neural networks, this paper opens avenues for the continued evolution of VQA systems and sets a high benchmark for future research in the domain.