An Overview of Reinforcement Learning Integrated with Natural Language Processing

The paper entitled "A Survey of Reinforcement Learning Informed by Natural Language" embarks on a meticulous examination of the intersection between Reinforcement Learning (RL) and NLP. The crux of the paper postulates that integrating natural language understanding into RL is not only timely but also necessary for the advancement of AI systems capable of adeptly handling real-world tasks characterized by complexity and variability.

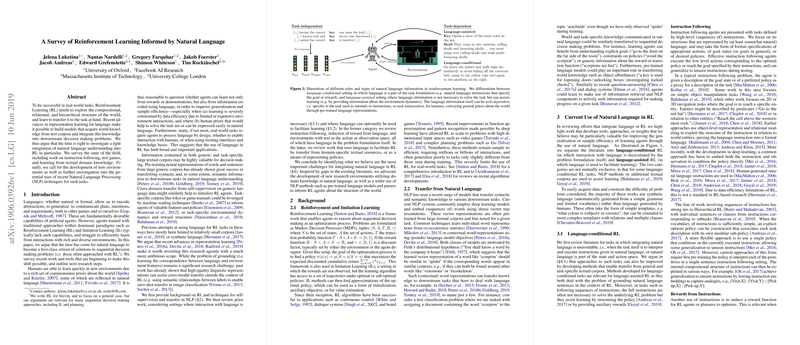

In its exploration, the paper categorizes the current state of research into two primary paradigms: language-conditional RL and language-assisted RL. Language-conditional RL refers to settings where interaction with language is an intrinsic part of the RL task, such as in instruction following or text-based games. Within this paradigm, the agents are tasked with interpreting and executing commands, where language inherently dictates the rewards and actions permissible in the environment. This setting necessitates that RL systems are equipped with mechanisms to ground language into actionable policies or goals. Methodologies in this domain also engage with the challenges of sparse rewards, employing techniques such as inverse reinforcement learning and adversarial processes for reward induction.

Conversely, language-assisted RL examines the utilization of language as an auxiliary tool to enhance RL performance. This includes leveraging corpora for domain knowledge or utilizing textual annotations to improve learning efficacy. The implication here is that language can provide additional context, structure, and abstractions that facilitate more efficient policy learning and generalization, reducing the traditionally high sample complexity associated with RL algorithms.

The paper underscores the significance of recent NLP advances, particularly in representation learning, which have the potential to augment RL systems with pre-trained models that convey syntactic and semantic understanding. This infusion of NLP techniques is poised to address limitations in RL, such as the inefficiency in learning from interactions and the struggle to generalize beyond training environments.

The authors advocate for the creation of complex and scalable environments enriched with real-world semantics to test and foster the development of RL integrated with natural language. Such environments can simulate open-world scenarios, providing a fertile ground for real-time adaptation and learning, which are pivotal for the practical deployment of RL in dynamic and multifaceted tasks.

The paper concludes by emphasizing the transformative potential of NLP-enhanced RL, predicting significant strides in AI capable of processing intricate and unstructured natural language. This direction holds promise not only for academia but also for real-world applications across domains such as autonomous systems, human-computer interaction, and robotics, where natural language offers a rich medium for communication and decision-making.

In summary, the paper provides a comprehensive survey of the current landscape, progress, and future directions of RL informed by natural language. It presents a cogent argument for the seamless integration of these fields, underscoring the necessity for further research and development to unlock the full potential of AI systems that can naturally and efficiently interact with and learn from the world around them.