An Assessment of Multilingual BERT's Cross-Language Capabilities

This paper provides an extensive empirical investigation of Multilingual BERT (mBERT) to understand its ability to perform zero-shot cross-lingual model transfer. The researchers systematically probe mBERT to quantify its efficacy in transferring learned tasks from one language to another, even when there is no lexical overlap. Multilingual BERT, trained on the concatenated Wikipedia corpora of 104 languages without any specific cross-lingual supervision, surprisingly exhibits a robust capacity for cross-lingual generalization. This paper not only reveals mBERT's strengths but also exposes systematic deficiencies affecting certain language pairs.

Probing Experiments and Main Findings

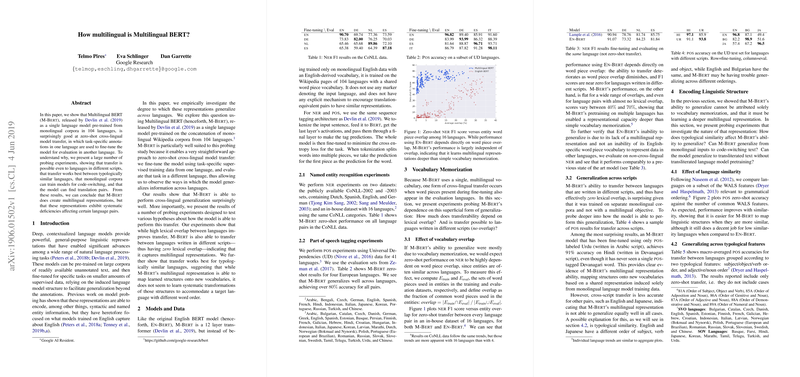

The core of the paper revolves around various probing experiments targeting Named Entity Recognition (NER) and Part of Speech (POS) tagging across multiple languages.

NER Experiments:

Using the CoNLL-2002 and -2003 NER datasets and an in-house dataset of 16 languages, mBERT's zero-shot performance is highlighted. For instance, English-German NER transfer shows a decrease from monolingual fine-tuning (F1 score of 90.70 for English to 69.74 for German). However, mBERT demonstrates reasonably high scores across other language pairs, underscoring its capability to capture multilingual representations.

POS Tagging Experiments:

The researchers utilize the Universal Dependencies dataset for 41 languages for POS tagging. Results indicate mBERT achieves over 80% accuracy in zero-shot scenarios for languages such as English, German, Spanish, and Italian. This reveals mBERT's competence in generalizing syntactic information across typologically akin languages.

Vocabulary Memorization versus Deeper Representations:

Interestingly, the paper reveals that language generalization using mBERT is not solely dependent on superficial vocabulary overlap but on more profound, multilingual representations. Even for languages with different scripts and consequently zero lexical overlap, such as Urdu and Hindi, mBERT performs significantly well (91% accuracy for POS tagging from Urdu to Hindi).

Effect of Typological and Script Similarity:

Typological similarity, like common word order features (SVO, SOV), amplifies transfer performance. For instance, SVO to SVO language transfer shows better POS accuracy compared to SVO to SOV transfers. This insight accentuates that while multilingual representations map learned structures effectively, they struggle with transformations in differing syntactic orders.

Code-Switching and Transliteration Challenges

Experiments with code-switched Hindi-English and transliterated Hindi datasets reveal that generalizing to transliterated text remains challenging for mBERT. It underperforms compared to dedicated models trained explicitly with transliteration signals. However, for script-corrected inputs, performance remains comparable, suggesting robustness in multilingual representation.

Investigating Feature Space and Vector Translation

A unique aspect of the paper explores mBERT's hidden representations through vector space translations. By translating sentence embeddings from one language to another and measuring nearest neighbor accuracy, it is evident that mBERT's intermediate layers contain informed, language-agnostic linguistic representations.

Implications and Future Directions

The implications of this research are two-fold: practically, it is evident that mBERT can be employed effectively for multilingual NLP tasks without extensive cross-lingual supervision; theoretically, it provides a foundation for further exploration into the nature of multilingual representations within deep learning models. This opens avenues for enhancements in multilingual and low-resource language processing, development of more linguistically informed pre-training objectives, and sophisticated handling of code-switching and transliteration.

Future developments may focus on refining mBERT to better handle non-typological similarities, incorporating more explicit cross-lingual signals during pretraining, and addressing its deficiencies in generalizing specific language pairs. This will contribute to more robust and versatile multilingual models in the ever-evolving landscape of AI language understanding.