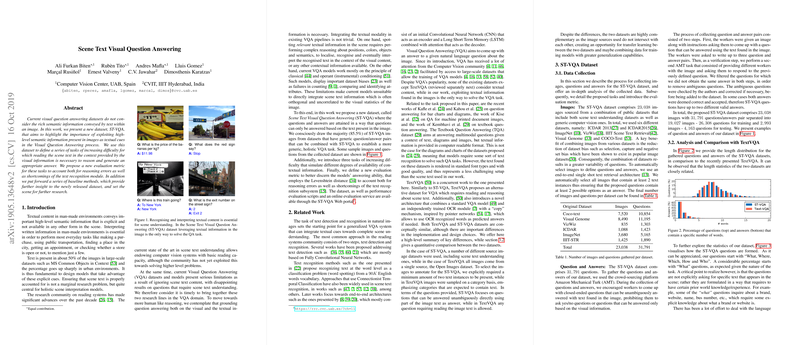

Scene Text Visual Question Answering: A New Dataset for Exploiting Textual Information in Images

The paper "Scene Text Visual Question Answering" introduces the ST-VQA dataset, designed to enhance the Visual Question Answering (VQA) field by incorporating textual content found in images. The research underscores the importance of scene text as a source of high-level semantic information within images, emphasizing its often-overlooked potential in existing VQA models and datasets. By focusing on integrating textual cues present in scenes with visual data, the paper aims to advance the understanding and reasoning capabilities required for VQA tasks.

The proposed ST-VQA dataset is distinguished by its novel approach to VQA, demanding a fusion of vision and text understanding to accurately answer questions based solely on scene text. Current VQA datasets fall short in accounting for scene text, which significantly restricts their ability to address questions necessitating text comprehension. The paper addresses this gap by providing a dataset specifically tailored for scenarios where textual information is pivotal.

Core Contributions and Methodological Advances

- Introduction of the ST-VQA Dataset: Comprising 23,038 images and 31,791 question-answer pairs, sourced from various public datasets, ST-VQA encapsulates text-rich images from urban and natural environments. The dataset was meticulously curated through crowd-sourcing to ensure the questions necessitate interpretation of scene text, demonstrating a broad spectrum of question complexity and topics.

- Task Definition and Evaluation Metric: The research outlines three tasks of increasing complexity—strongly contextualized, weakly contextualized, and open vocabulary—which are defined by the availability and nature of lexical prior information provided. This task classification paves the way for developing models that either leverage extensive contextual information or operate in a more open-ended setting. A novel evaluation metric, the Average Normalized Levenshtein Distance (ANLd), is introduced to accurately reflect both text recognition errors and reasoning inaccuracies.

- Baseline Methods and Comparative Analysis: The paper evaluates several baseline approaches, ranging from random selection to sophisticated CNN-LSTM VQA models integrated with scene text features. Interestingly, scene text recognition baselines often outperform VQA models that do not incorporate textual features, underscoring the need for new architectures capable of synthesizing visual and textual input.

Numerical Results and Key Findings

The results illustrate the limitations of current VQA approaches when faced with text-dependent tasks. Standard VQA models, which typically approach the problem as classification over a limited vocabulary, demonstrated poor performance with the ST-VQA dataset, achieving 7.41% to 10.46% accuracy in some instances. These results clearly highlight the necessity for generative methods or alternative machine learning architectures that can dynamically process text alongside visual analysis.

Implications and Future Directions

The introduction of ST-VQA marks a significant stride towards comprehensive scene understanding models. By emphasizing text as a primary source of semantic meaning, the dataset is set to influence subsequent research in several ways:

- Model Development: Future research can focus on the development of hybrid models that simultaneously process visual and textual data, potentially drawing from advancements in multimodal learning or NLP-based generative strategies.

- Metric Evaluation: The proposed ANLd offers a nuanced interpretation of model performance, suggesting further exploration of evaluation methods that consider the intricacies of text recognition in VQA contexts.

- Transfer and Cross-Domain Learning: The ST-VQA opens avenues for exploring transfer learning between datasets with distinct textual dynamics, fostering a deeper understanding of task generalization in AI.

Overall, the paper presents a robust framework for incorporating textual content into VQA, setting the stage for advancements that could redefine current methodologies in AI scene understanding and question answering systems.