Massively Multilingual Neural Machine Translation

The paper "Massively Multilingual Neural Machine Translation" investigates the extension of neural machine translation (NMT) models to handle an extensive range of languages simultaneously. The authors present a comprehensive exploration of training models capable of translating up to 102 languages to and from English within a singular framework. This research underscores the advantages and challenges associated with massively multilingual NMT, particularly in enhancing translation quality and managing model capacity.

Methodology and Experimental Setup

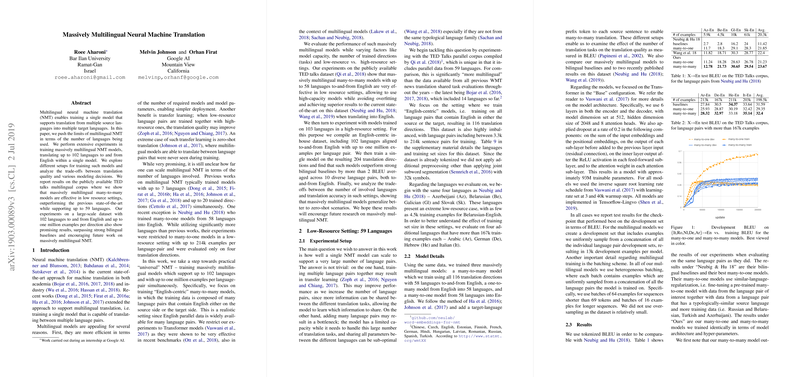

The authors evaluate the efficacy of their approach through extensive experimentation on two datasets: the TED Talks multilingual corpus and a custom in-house dataset. The TED dataset involves 59 languages with varying resource availability, while the in-house dataset includes 103 languages paired with English, with up to one million examples per language pair. These setups allow for assessing the scalability and generalization capabilities of multilingual NMT models in both low-resource and high-resource contexts.

Results and Analysis

The results indicate that massively multilingual models outperform bilingual benchmarks under specific conditions. In low-resource scenarios, such as the TED Talks corpus, the many-to-many multilingual models achieve superior performance compared to many-to-one models, particularly when translating into English. This is attributed to the potential for these models to act as regularizers, facilitating better generalization in low-resource settings.

When scaling up to 103 languages with the in-house dataset, the paper shows promising improvements over bilingual baselines, despite the increased complexity. The many-to-one models generally outperform many-to-many models in high-resource settings, revealing that adequate data availability reduces overfitting concerns. However, for certain language pairs, the performance fluctuates significantly, highlighting possible interference during training.

Key Observations

- Model Capacity vs. Translation Quality: The research highlights a trade-off between the number of languages handled by a model and its translation accuracy. While increasing the diversity of language pairs can enhance generalization in zero-shot translation scenarios, it may also lead to capacity bottlenecks affecting supervised performance.

- Zero-Shot Translation: The models are found to generalize better in zero-shot settings with increased language pairs, notable in similar language translations. This suggests that expanding the number of languages contributes to learning more generalized representations, beneficial for unseen language pairs.

- Practical Implications: These results point towards the potential for deploying universal NMT systems capable of supporting numerous languages without the need for separate models, simplifying deployment and maintenance in multilingual applications.

Future Directions

The research opens avenues for further exploration in several areas:

- Model Architecture and Hyperparameter Tuning: As the current models encounter capacity limitations, future work may benefit from exploring more efficient architectures or adaptive methods to dynamically allocate resources based on language pairings.

- Semi-Supervised Learning: Integrating semi-supervised approaches could leverage unlabelled data to enhance multilingual NMT models, particularly in low-resource languages.

- Understanding Interference Mechanisms: Investigating the reasons behind performance fluctuations and interference during multilingual training could lead to more stable and reliable models.

In conclusion, this paper provides a significant contribution to the field of NMT by demonstrating that scaling up to handle numerous languages within a single model is feasible and can potentially improve translation quality. The insights gained offer a foundational step toward building more inclusive, globally applicable NMT systems.