Dynamic Fusion with Intra- and Inter-modality Attention Flow for Visual Question Answering

The paper entitled "Dynamic Fusion with Intra- and Inter-modality Attention Flow for Visual Question Answering" tackles the pivotal challenge of multi-modal fusion in Visual Question Answering (VQA). It introduces a sophisticated framework designed to enhance the interaction between visual and textual data through a strategic combination of intra- and inter-modality attention mechanisms.

Summary of the Approach

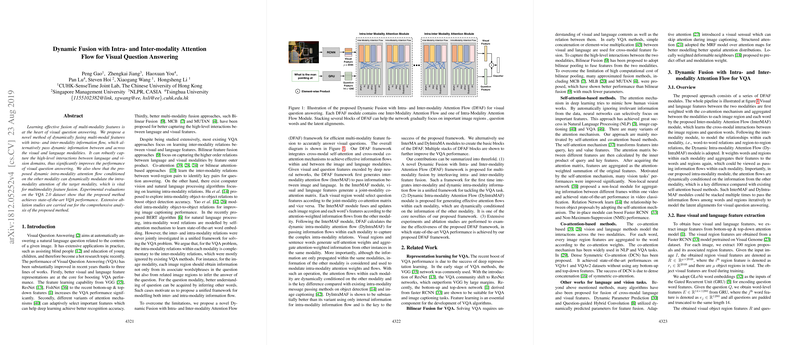

The crux of the proposed framework, named Dynamic Fusion with Intra- and Inter-modality Attention Flow (DFAF), lies in its ability to dynamically modulate the flow of information both within the same modality and across different modalities. It employs two core components: the Inter-modality Attention Flow (InterMAF) and the Dynamic Intra-modality Attention Flow (DyIntraMAF).

- Inter-modality Attention Flow (InterMAF): This module facilitates the identification and transmission of critical information between visual features and textual elements. It captures inter-modal relationships using co-attention mechanisms to dynamically fuse and update features from image regions and corresponding question words.

- Dynamic Intra-modality Attention Flow (DyIntraMAF): Unlike static intra-modality attention which examines relations within a single modality, DyIntraMAF calculates intra-modality relations with a dynamic, cross-modal conditioning context. This is achieved by modulating attention weights conditioned on information derived from another modality, thus enriching context-specific feature extraction.

The DFAF framework iteratively applies these modules in stacked blocks to refine feature representations and improve the accuracy of the VQA task. The architecture capitalizes on multi-head attention, residual connections, and the integration of dynamic gating strategies to optimize the flow of information and facilitate deep interactions between vision and language components.

Results and Implications

The experimental results on the VQA 2.0 dataset demonstrate that the DFAF approach achieves state-of-the-art performance, significantly surpassing previous methods. Ablation studies confirm the efficacy of dynamically modulated intra-modality attention over traditional static approaches. Specifically, DFAF outperformed BAN with Glove by a margin on the test-dev dataset, indicating the robust applicability of dynamic attention flows.

The implications of this paper extend to several domains ripe for VQA applications such as assistive technologies for the visually impaired and educational tools. By introducing a method that effectively captures nuanced interactions between visual cues and textual queries, this work enhances the interpretative capabilities of AI models in multi-modal contexts.

Future Directions

The DFAF framework sets a new precedent for feature fusion in multi-modality AI tasks, suggesting multiple avenues for future exploration. Further research could explore integrating more sophisticated contextual LLMs like BERT into the attention mechanisms to further boost performance. Moreover, extending the application of DFAF to other multi-modal tasks such as video understanding or interactive dialogue systems could yield valuable insights and drive broader technological advancements.

In conclusion, the paper contributes a robust framework for dynamic feature fusion in VQA, advancing the discussion on the integration of cross-modal interactions and setting a foundational structure for future investigations in the field.