An Expert Perspective on NLP Approaches for Fake News Detection

The paper "A Survey on Natural Language Processing for Fake News Detection" presents a systematic examination of automated approaches to discerning falsehoods in news content through NLP techniques. Authored by Ray Oshikawa, Jing Qian, and William Yang Wang, the survey provides an invaluable resource for researchers interested in the evolving domain of fake news detection—a field gaining significance as digital content continues to proliferate and influence public opinion on global platforms.

Challenges and Task Definitions

Automated fake news detection poses formidable challenges due to the nuanced nature of news, which often blends veracity with bias, satire, and outright falsehoods. This paper categorizes fake news detection predominantly as either a classification or regression task, directing focus on textual content ranging from succinct claims to comprehensive articles. Classification primarily dominates, with researchers employing methods from binary to multi-class categorization to address the complexity of partial truths. However, the regression model, which offers a scalar measure of truthfulness, is less frequently explored despite its potential applicability.

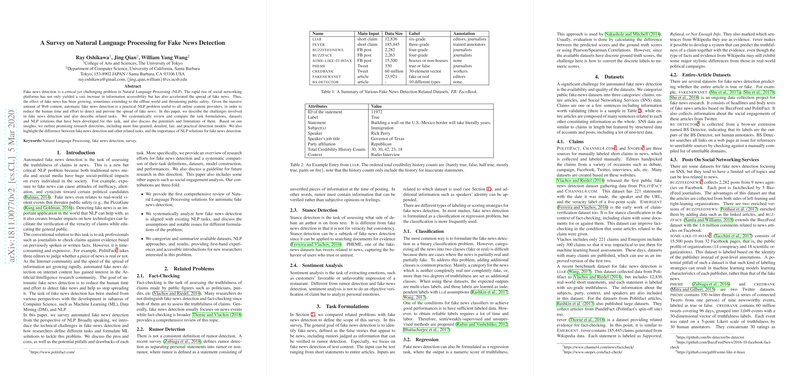

Datasets and Methodologies

The paper systematically reviews various datasets pivotal for research in this domain, such as LIAR, FEVER, and FakeNewsNet. The authors emphasize the criticality of comprehensive datasets that offer labeled instances ranging from claims to full articles. These datasets underpin the development of machine learning models that leverage different NLP techniques, including RNNs, CNNs, and attention mechanisms. Notably, attention models and LSTM architectures feature prominently in recent advancements, demonstrating superior performance in capturing context-dependent features crucial for determining veracity.

Empirical Results and Observations

Empirical investigations utilizing datasets such as LIAR and FEVER highlight the efficacy of neural network models, particularly those incorporating attention mechanisms, in predicting news veracity. Results delineated in the paper illustrate that models augmented with additional metadata—such as speaker credibility—further enhance prediction accuracies. However, the reliance on metadata also raises concerns about bias, underscoring the need for balanced utilization of both content-based and context-specific informations.

Recommendations and Theoretical Implications

The authors call for more sophisticated datasets that integrate multi-dimensional truth indices and capture a diversity of news sources and formats, emphasizing the dual necessity for rigor in both data collection and model development. They suggest the expansion of labels beyond binary classifications to include more nuanced indices of truthfulness, which could foster models capable of delivering more granular insights into news veracity.

Future Directions

Looking forward, the survey encourages research into hybrid models that adeptly merge content-based and metadata-driven methodologies. This exploration has practical implications for enhancing real-world applicability and robustness against attempts to obfuscate truth. Furthermore, while the research primarily focuses on textual data, the potential integration of multi-modal data sources could present novel avenues for enriched fake news detection systems.

In summary, through a detailed exploration of methods, datasets, and experimental results, this paper lays a foundational understanding for further exploration of NLP techniques in fake news detection. It serves as both a critical resource and a call to action for researchers to craft finely-tuned, ethically-guided models that adaptively respond to the challenges posed by the dynamic landscape of digital content.