Meta-Learning for Low-Resource Neural Machine Translation: A Summary

The paper presents a novel approach to addressing the challenge of low-resource neural machine translation (NMT) through the application of meta-learning. Traditional NMT systems, while successful with high-resource language pairs, often underperform when data is scarce. The proposed method leverages the model-agnostic meta-learning algorithm (MAML) to enhance the adaptation of NMT models to low-resource language tasks by learning from multilingual high-resource language pairs.

Methodology

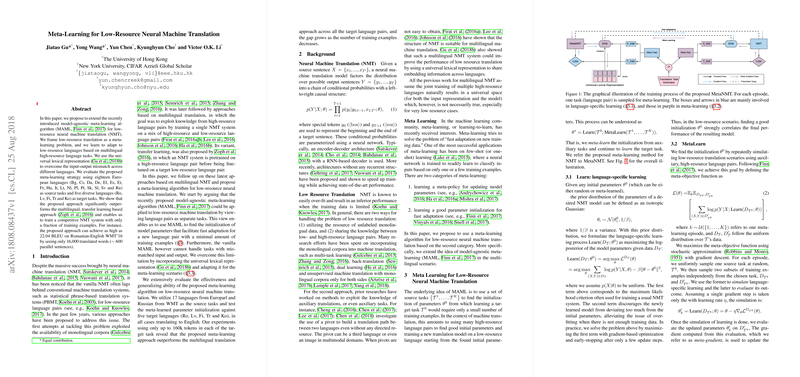

The approach treats each language pair as a discrete task, allowing the MAML framework to optimize the initial parameters of the NMT model for rapid adaptation to new, low-resource language pairs. A critical component of this methodology is the use of a universal lexical representation (ULR), which addresses the input-output mismatch across different languages. The ULR employs a key-value memory network that builds language-specific vocabularies dynamically while sharing a common embedding matrix across languages.

During meta-learning, the model is trained on multiple high-resource language pairs, learning a parameter initialization that facilitates effective and efficient fine-tuning on low-resource tasks. This is contrasted with traditional multilingual and transfer learning approaches, which lack explicit optimization for adaptation to low-resource tasks.

Evaluation and Results

The evaluation involved 18 European languages as high-resource source tasks and five diverse languages—Romanian (Ro), Latvian (Lv), Finnish (Fi), Turkish (Tr), and Korean (Ko)—as low-resource target tasks. The results demonstrate a significant performance increase over existing multilingual and transfer learning methods. Notably, the proposed system achieved a BLEU score of 22.04 on the Ro-En language pair in the WMT'16 dataset while using only approximately 600 parallel sentences. This is a marked improvement over the baseline methods, which struggled due to data scarcity.

Implications and Future Directions

The implications of this research are notable both theoretically and practically. It provides a robust framework for rapid adaptation in neural machine translation systems, significantly reducing the data dependency typically required for training effective models. Furthermore, this approach can be adapted to various architectures beyond the Transformer model utilized in the paper, suggesting broad applicability across different NMT systems.

Moving forward, the proposed meta-learning framework opens avenues for incorporating other data sources, such as monolingual corpora. It also lays the foundation for further exploration into task similarity and the selection of source tasks for optimal meta-learning outcomes. Additionally, investigating the use of different model architectures and fine-tuning strategies could yield further insights and enhancements in low-resource NMT scenarios.

In conclusion, the paper offers a significant step toward overcoming the limitations of NMT in low-resource settings, providing a scalable and effective solution through meta-learning and universal lexical representation. As the field progresses, such innovative approaches will be crucial in developing more adaptable and inclusive translation technologies.