Universal Neural Machine Translation for Extremely Low Resource Languages

The paper, "Universal Neural Machine Translation for Extremely Low Resource Languages," addresses a critical challenge faced by Neural Machine Translation (NMT) systems: the lack of sufficient parallel data for many language pairs. The authors propose a universal NMT approach leveraging transfer learning to enhance translation capabilities for languages with limited resources, through sharing lexical and sentence representations across multiple source languages converging into a single target language.

Central to their approach are two components: Universal Lexical Representation (ULR) and Mixture of Language Experts (MoLE). ULR promotes multi-lingual word-level sharing by mapping words from different languages into a universal token space, facilitating cross-lingual learning. This is achieved by using monolingual embeddings aligned to a shared semantic space, thus allowing semantically similar words from different languages to have similar representations. MoLE focuses on sentence-level sharing, using expert networks for each language to share sentence representations, enabling low-resource languages to benefit from high-resource data.

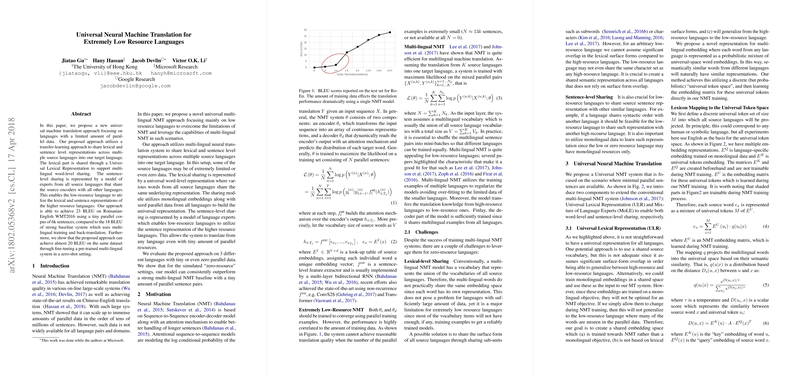

The authors evaluate their method on Romanian-English, Korean-English, and Latvian-English language pairs, in extremely low-resource contexts. Notably, the proposed model achieves a BLEU score of 23 on Romanian-English translation using a corpus of only 6,000 sentences, outperforming traditional multi-lingual baselines scoring 18 BLEU. Their model also shows effectiveness in zero-shot translation scenarios, achieving nearly 20 BLEU by fine-tuning a pre-trained multi-lingual system.

The paper highlights several critical implications. Practically, it expands the applicability of NMT to languages with minimal parallel data, potentially amplifying the reach of language technologies globally. Theoretically, it provides a framework to explore further the utility of semantic alignment and cross-linguistic representation learning in machine translation.

Moving forward, the research could stimulate developments in unsupervised and minimally supervised machine translation, exploring avenues like zero-resource applications, unsupervised dictionary alignment, and meta-learning. The strategies demonstrated could synergize with advancements in unsupervised learning, leading to more robust systems that can effectively exploit monolingual data for translation tasks. Ultimately, this work contributes a foundational step towards more inclusive language technologies, ensuring linguistic diversity receives broader computational support.