A Survey of Model Compression and Acceleration for Deep Neural Networks

Yu Cheng, Duo Wang, Pan Zhou, and Tao Zhang's paper, "A Survey of Model Compression and Acceleration for Deep Neural Networks," provides a comprehensive overview of various techniques to compress and accelerate deep neural network (DNN) models. Given the computational costs and memory requirements associated with modern DNN architectures, this survey is particularly timely and relevant for the research community.

Introduction

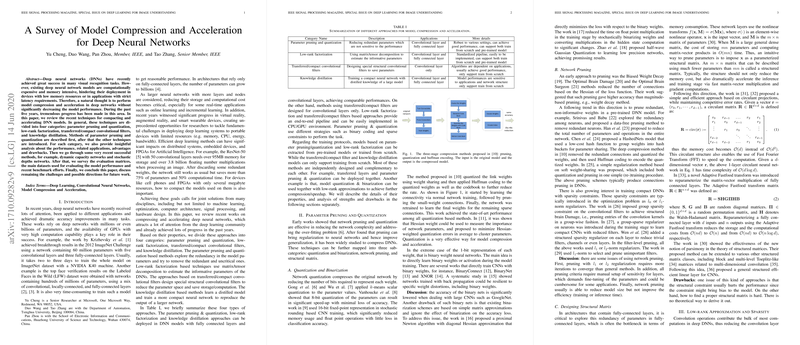

The inherent power of DNNs in visual recognition tasks is well-established, albeit at the expense of significant computational and memory resources. For instance, deep architectures like AlexNet and ResNet-50 contain millions of parameters, making them infeasible for deployment in resource-constrained environments such as mobile devices and real-time systems. This paper succinctly categorizes existing model compression and acceleration techniques into four key areas: parameter pruning and quantization, low-rank factorization, transferred/compact convolutional filters, and knowledge distillation. Additionally, it discusses emerging techniques, evaluation matrices, benchmarks, and provides insights into future research directions.

Parameter Pruning and Quantization

This category includes techniques that reduce the complexity of DNNs by removing redundant and non-informative parameters. Pruning methods, such as those inspired by Optimal Brain Damage, eliminate insignificant weights or neurons, while quantization reduces the precision of weights, often using -means clustering or scalar quantization. Notable contributions like that of Han et al. utilize a three-stage process involving pruning, quantization, and Huffman coding. Binary and quantized networks such as BinaryNet and XNOR show the extreme case of these techniques, trading off some accuracy for computational efficiency.

Discussion

While these methods effectively reduce model size and increase inference speed, they typically require extensive retraining to fine-tune the compressed model. Additionally, setting layer-specific sensitivity parameters remains a challenge.

Low-Rank Factorization

Methods in this category leverage matrix and tensor decomposition to approximate DNN parameters. Techniques like canonical polyadic decomposition and batch normalization-based low-rank approximations reduce both memory footprint and computational overhead. These techniques are particularly effective for compressing fully connected layers and share similarities with classical dimensionality reduction techniques used in signal processing.

Discussion

Low-rank approximations provide significant compression and speedup rates but often incur non-trivial computational costs during the decomposition process. Furthermore, layer-by-layer compression techniques do not fully exploit global model redundancies, highlighting an area for further optimization.

Transferred and Compact Convolutional Filters

These methods focus on the convolutional layers by employing transformations or designing compact filters to reduce parameter space. Techniques include rotation and translation of convolutional filters, as well as the use of 1x1 convolutions to replace larger filters, as seen in SqueezeNet and MobileNets. The intuitive basis here is leveraging the transformation properties of convolutional filters to reduce redundancy.

Discussion

Although beneficial for certain network architectures like VGG and AlexNet, these methods have limitations when applied to deeper networks like ResNet. The performance gains rely heavily on the nature of the transformations applied, posing stability challenges.

Knowledge Distillation

Knowledge distillation transfers the knowledge from a large teacher model to a smaller student model, typically by making the student model mimic the softened outputs of the teacher. Approaches such as FitNets and attention transfer offer innovative ways to leverage this paradigm for model compression without significantly compromising performance.

Discussion

While knowledge distillation is versatile and straightforward to implement, it is largely limited to tasks where the softmax loss function is used, thus limiting its general applicability.

Emerging Techniques and Challenges

Recent contributions utilize attention mechanisms, dynamic network architectures, and conditional computations to optimize DNNs further. Techniques like dynamic capacity networks and sparse mixture-of-experts exemplify the continual evolution of model efficiency accelerators.

Challenges and Future Work

Key challenges include the fine-tuning of compressed models, the integration of domain-specific knowledge to guide compression, and the transparency of compressed models. Future research directions propose leveraging neural architecture search, hardware-aware approaches, channel pruning, and advanced knowledge transfer techniques.

Conclusion

Cheng et al.'s survey is a thorough examination of the current landscape in DNN compression and acceleration. It delineates the strengths and weaknesses of various methodologies while providing a roadmap for both theoretical advancements and practical implementations. As the field advances, integrating interdisciplinary solutions will be crucial for developing more efficient and deployable DNN architectures.