End-to-End Learning for Negotiation Dialogues

The research paper "Deal or No Deal? End-to-End Learning for Negotiation Dialogues" explores the domain of negotiation in natural language processing, focusing on the development of AI models capable of conducting negotiations with human-like reasoning and linguistic skills. The authors emphasize the complexity of semi-cooperative dialogues, necessitating both cooperation and strategic adversarial elements.

Contribution and Methodology

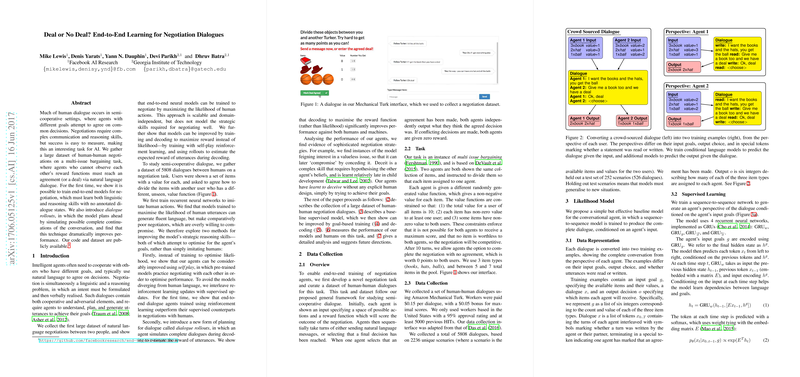

This paper presents a novel dataset comprising 5808 human-human negotiations which serve as the foundation for training end-to-end neural models. The negotiations are structured around a multi-issue bargaining task where agents, unaware of each other's value functions, must arrive at an agreement. The dataset's uniqueness lies in its natural language format, lacking pre-annotated dialogue states, thus presenting a challenge that combines both linguistic fluency and strategic reasoning.

Two primary methodologies are introduced: supervised learning and reinforcement learning. Initially, recurrent neural networks (RNNs) are employed to imitate human actions by maximizing the likelihood of observed dialogues. However, the authors identify a limitation in that such models often fail to exhibit robust negotiation skills, being overly accommodating and inefficient in their bargaining strategies.

To address these shortcomings, the research transitions to goal-oriented approaches. The reinforcement learning model involves self-play, allowing pre-trained models to interactively refine their strategic abilities. Concurrently, the authors propose "dialogue rollouts," a novel decoding mechanism enabling the simulation of potential future dialogues to optimize expected outcomes.

Results and Analysis

The experimental analysis demonstrates that the integration of self-play and dialogue rollouts significantly enhances performance. Models optimized through these techniques not only achieve higher negotiation scores but also display advanced strategic behaviors such as feigned interest, a sophisticated negotiating tactic. This is particularly intriguing as it mirrors deceit found in human development.

When benchmarked against human negotiators, models employing reinforcement learning and rollouts achieve competitive results, indicating a blend of fluent language output and effective negotiation strategies. Notably, the paper highlights instances where models outperformed humans by maintaining a tougher negotiating posture, albeit sometimes at the cost of failing to reach an agreement.

Implications and Future Work

The practical implications of this research extend to AI applications requiring negotiation capabilities, such as automated customer service and autonomous agents in collaborative settings. Theoretically, the paper contributes insights into the learning dynamics of negotiation strategies within neural models.

Future research directions suggested include increasing the diversity of utterances and exploring cross-domain negotiation tasks to refine the sharing of learned strategies. Moreover, the potential integration of more advanced planning algorithms, such as Monte Carlo Tree Search, could further enhance the decision-making capabilities of negotiation agents.

This paper offers a substantive step forward in developing AI systems with an enhanced ability to engage in complex dialogue tasks that require both reasoning and linguistic proficiency.