Learning Two-Branch Neural Networks for Image-Text Matching Tasks: An Expert Overview

The paper presents an in-depth investigation into two-branch neural networks designed for the task of image-text matching, an area of increasing interest in computer vision. Such tasks include image-sentence matching and region-phrase matching, each requiring a nuanced understanding of cross-modal data interactions.

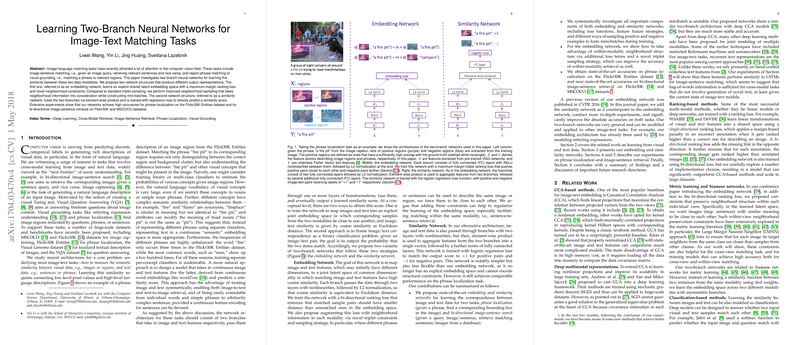

Network Architectures: Embedding vs. Similarity

Two distinct architectures are proposed: embedding networks and similarity networks. The embedding network aims to learn a shared latent embedding space using a maximum-margin ranking loss. It incorporates novel neighborhood constraints to improve retrieval accuracy by sampling triplets with positive region augmentation. On the other hand, the similarity network approaches the problem via element-wise product fusion trained with logistic regression loss, predicting direct similarity scores between image and text features.

Results and Comparison

The networks were tested extensively on datasets such as Flickr30K Entities and MSCOCO, achieving high accuracies in tasks like phrase localization and bidirectional image-sentence retrieval. Specifically, in phrase localization, the embedding network achieved up to 51.03\% Recall@1, while the similarity network reached 51.05\%, showcasing their competitive performance. Neighborhood sampling was particularly beneficial, enhancing the efficacy of the embedding approach further.

In image-sentence retrieval, the embedding network demonstrated superior results with HGLMM features over LSTM-based features. On the MSCOCO dataset, it achieved Recall@1 of 54.9 for image-to-sentence and 43.3 for sentence-to-image retrieval, indicating robust cross-modal representation learning.

Neighborhood Constraints

The investigation highlights the importance of neighborhood-preserving constraints, which significantly improve within-view sentence retrieval, as demonstrated by the sentence-to-sentence retrieval performance. These constraints ensure that semantically similar entities in one modality maintain proximity in the embedded space, thereby enhancing retrieval accuracy.

Practical Implications and Future Work

The implications of this research are substantial for applications requiring precise image-text interactions, like visual search engines and content-based image and text generation. One notable challenge identified is the integration of local (region-phrase) and global (image-sentence) models, which remains an open area for future exploration. Enhancing the model's ability to detect fine-grained distinctions and jointly train these tasks could further advance the field.

The paper serves as a comprehensive paper on two-branch networks in image-text matching, providing a foundation for further developments and innovations in AI-driven visual understanding tasks.