A Knowledge-Grounded Neural Conversation Model

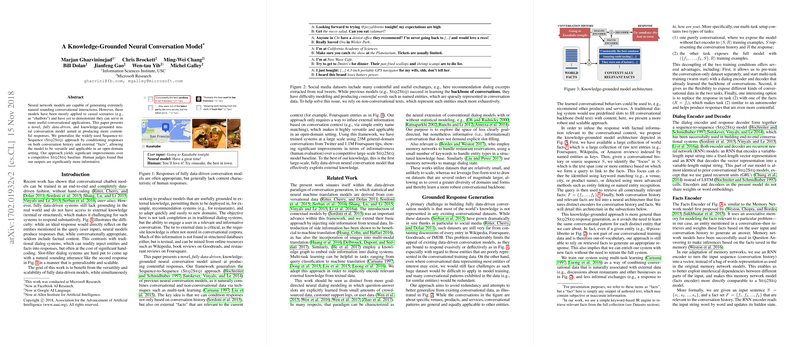

The paper "A Knowledge-Grounded Neural Conversation Model" addresses a significant gap in neural conversation systems—specifically, their historical lack of substantively informed responses. Traditional Sequence-to-Sequence (Seq2Seq) models, while capable of producing contextually appropriate conversational responses, often neglect the incorporation of factual content. This research introduces a fully data-driven neural conversation model that conditions its outputs on both the conversational context and external knowledge sources, thereby enhancing response informativeness.

Methodology Overview

The authors extend the Seq2Seq approach by embedding external "facts" as additional inputs. The model incorporates a memory network-like architecture to encode conversational histories and relevant facts, which it then uses to generate responses. By engaging in multi-task learning, the authors effectively combine conversational and non-conversational data. This facilitates training on both generic and entity-linked datasets without reliance on explicit slot-filling methods, which are prevalent in traditional dialog systems.

The dataset utilized comprises 23 million general Twitter conversations and 1.1 million Foursquare tips. The combined data enables the model to learn conversational structures and apply them across diverse domains. The grounding mechanism is built upon simple entity name matching, which ties user inputs to external knowledge snippets, significantly broadening applicability in open-domain contexts.

Numerical and Evaluation Insights

The experimental results demonstrate a clear enhancement in performance through human evaluations. The novel model outperformed a competitive Seq2Seq baseline in informativeness, although appropriateness remained closely competitive. Perplexity measures indicate that versions of the grounded model maintain comparable perplexity to those trained individually on general and grounded data, showcasing the efficacy of multi-task learning in bridging these datasets. BLEU scores and lexical diversity metrics further corroborate improvements in response diversity and quality.

Implications and Future Work

Practically, this research suggests a pathway for deploying more informative conversational agents that could be integrated into applications such as recommendation systems and open-domain conversational AI. Theoretically, it showcases the feasibility of combining conversational data with rich external knowledge sources, without necessitating the complexity of goal-directed dialog state management.

The research opens intriguing avenues for future exploration. Opportunities exist to refine grounding techniques beyond simple entity recognition, potentially integrating sophisticated techniques like knowledge graph embeddings. Additionally, extending this model to incorporate multimodal data could further enrich the dialog system's context-awareness and response capability.

In conclusion, this work represents a thoughtful progression in neural conversation systems, adeptly integrating set-piece wisdom into the generative process and opening avenues for multifaceted conversational AI systems.