Overview of "Towards End-to-End Reinforcement Learning of Dialogue Agents for Information Access"

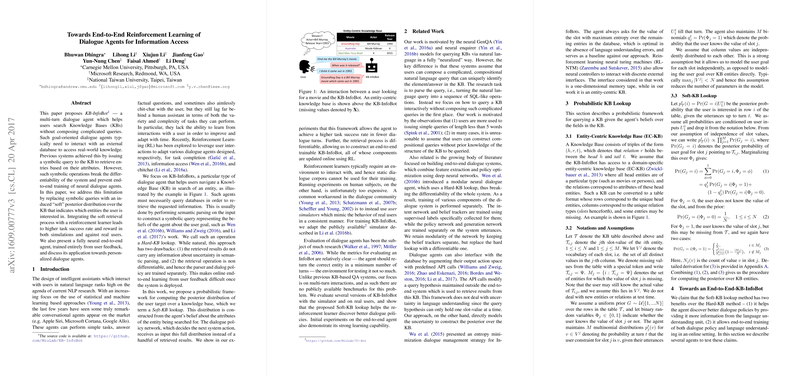

This paper presents KB-InfoBot, a novel multi-turn dialogue agent designed to assist users in searching through Knowledge Bases (KBs) without requiring the input of complex queries. The paper addresses a common challenge in the development of dialogue systems: the non-differentiability introduced when symbolic queries are employed to interact with external databases. By replacing these symbolic queries with a probabilistic framework capable of inducing a soft posterior distribution over the KB, the authors enable end-to-end training of dialogue agents via reinforcement learning (RL). This approach has demonstrated significant improvements in task success rates and dialogue efficiency both in simulated environments and in real-world user interactions.

Contributions and Methodology

The primary contribution of this work is the introduction of a soft-KB lookup framework that supports end-to-end training of dialogue agents through RL. The framework calculates a posterior distribution over entities within a KB based on the dialogue system's belief about the user's request, allowing for uncertainty handling in semantic parsing. This differentiable process contrasts sharply with traditional hard lookups in KBs, which fracture the system into indivisible parts trained separately and limit their ability to learn from continuous user feedback.

In the experiment, authors designed an end-to-end trainable dialogue agent capable of leveraging user feedback to improve its internal model, thereby achieving more personalized interactions. Key components like the belief tracker and the policy network operate seamlessly within this setup, enabling effective learning and improved decision-making capabilities.

Results and Implications

The agents leveraging the soft-KB lookup mechanism consistently outperformed their hard-KB counterparts in terms of task success rate and dialog efficiency across multiple KB scales. The paper presents results showing that the informed design leveraging the soft posterior distribution led to higher average reward and reduced dialogue length, indicating more effective and efficient interaction.

The innovative approach has broad implications for the design and training of conversational AI systems, particularly in tasks requiring dynamic knowledge retrieval and interaction. The differentiability of the retrieval process effectively integrates neural components with continuous learning strategies, paving the way for more adaptive and robust dialogue systems.

Future Directions

The findings of this paper suggest a promising future for applications of fully neural dialogue agents capable of personalization through reinforcement learning. The methodologies introduced could be extended and refined, providing potential improvements in conversational AI across various domains where adaptability and efficiency are key. Further research could explore enhancing model architecture to balance performance strengths against larger, more complex KBs effectively, as well as developing richer, more natural human-AI interactions beyond the constraints of templated language output and narrow user models.

In sum, the introduction of a soft-KB lookup represents a significant step towards more intelligent and personalized dialogue systems, steering machine learning and NLP research towards models with deeper integration with real-world feedback mechanisms. This offers an exciting avenue for the continued advancement of AI-driven communication tools.