- The paper provides a comprehensive survey of SLAM, detailing its evolution from classical probabilistic approaches to robust factor graph optimization.

- It emphasizes the integration of sensor data through front-end feature extraction and back-end MAP estimation for enhanced mapping accuracy.

- Challenges such as scalability, robustness in dynamic settings, and integration of semantic information are explored to advance next-generation SLAM systems.

Past, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Age

Introduction and Historical Background

The paper "Past, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Age" (1606.05830) provides a comprehensive survey of the SLAM (Simultaneous Localization and Mapping) problem, covering its evolution over the past three decades. The authors underscore the significant strides made from the classical age (1986-2004), which introduced probabilistic approaches such as EKF-SLAM and RBPF-SLAM, to the algorithmic-analysis age (2004-2015), marked by a deeper understanding of SLAM's fundamental properties like observability and consistency. This evolution reflects the transition from theoretical underpinnings to robust practical systems.

SLAM System Architecture

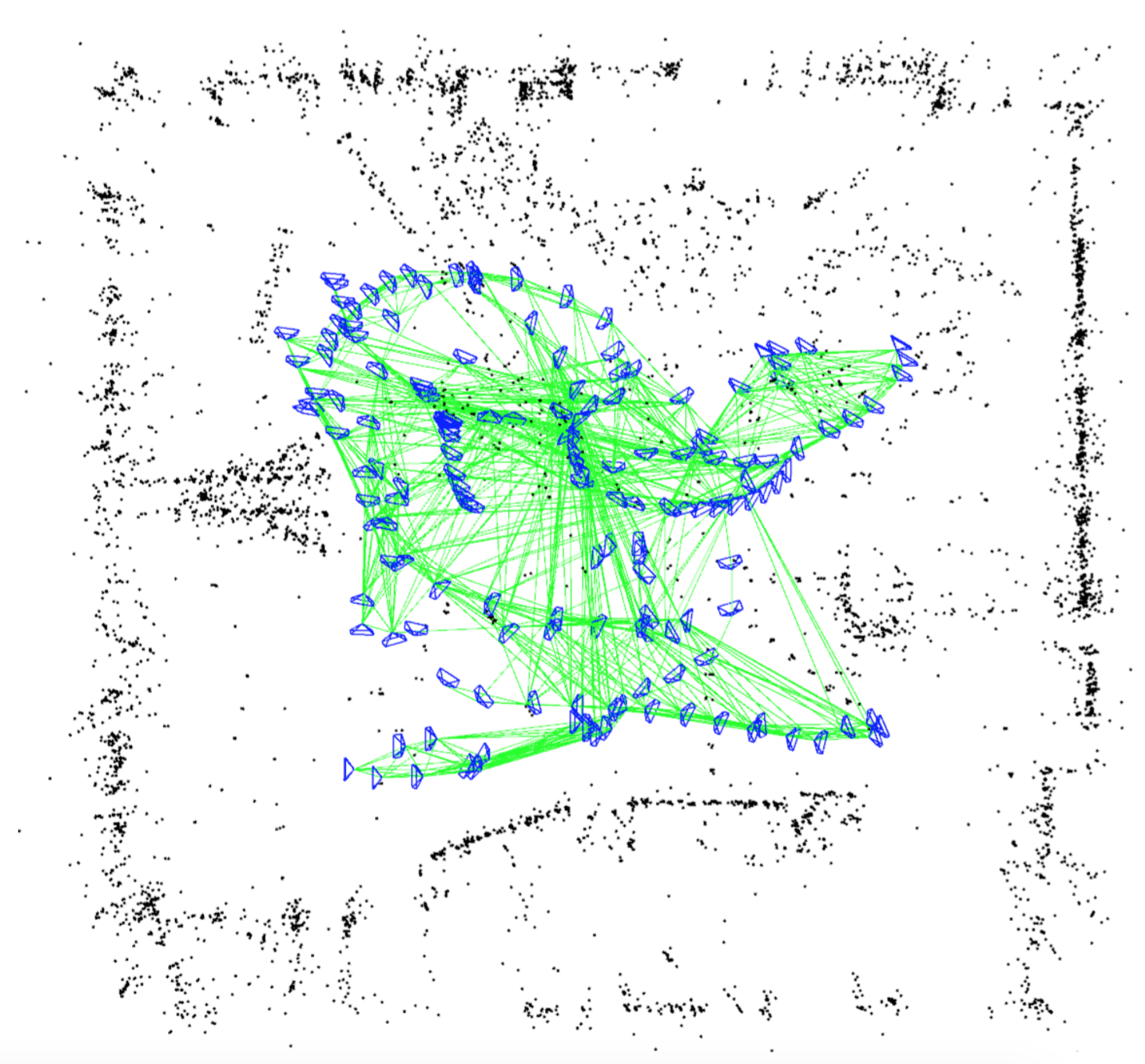

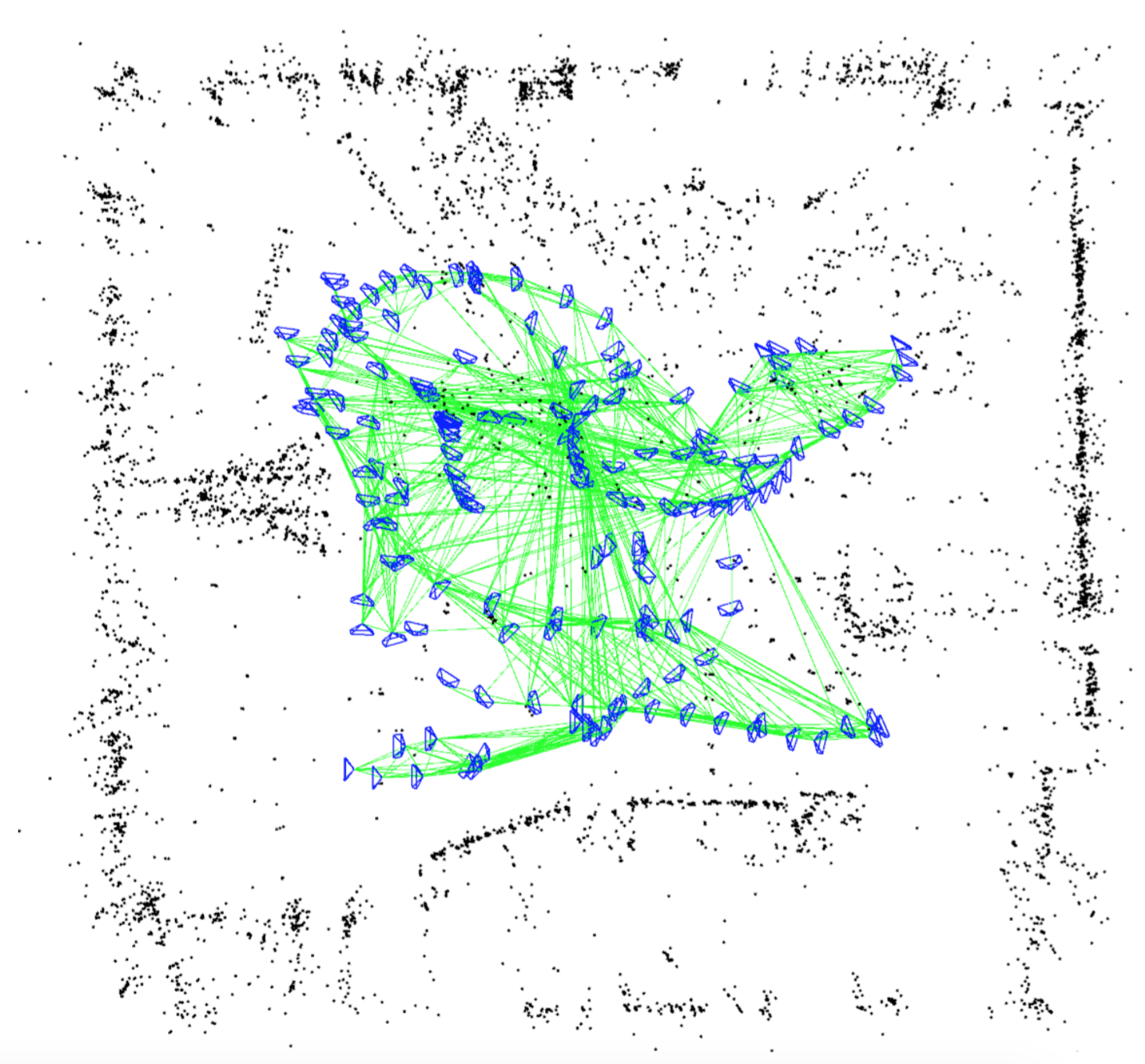

The paper delineates the architecture of a modern SLAM system into two main components: the front-end and the back-end. The front-end abstracts sensor data into models suitable for estimation, handling feature extraction, data association, and initial guess generation for mapping variables. The back-end performs inference through optimization techniques such as factor graph optimization, formulated as a Maximum a Posteriori (MAP) estimation problem. This paradigm shift towards factor graph optimization has significantly enhanced the accuracy and efficiency of SLAM by leveraging sparsity to solve large-scale problems.

Figure 1: Left: map built from odometry. Right: map built from SLAM, illustrating the discovery of shortcuts through loop closures.

Challenges and Innovations in SLAM

Robustness and Scalability

Two critical challenges in SLAM are robustness, especially in dynamic environments, and scalability for long-term autonomy. Robustness issues arise from incorrect data association, often due to perceptual aliasing. The paper highlights research efforts focusing on both the front-end, for reliable feature tracking and loop closure detection, and the back-end, for techniques resilient to outliers.

Scalability remains a pivotal challenge as robots operate over extended periods and in large environments. The paper discusses methods such as node and edge sparsification, out-of-core processing, and distributed multi-robot SLAM. These aim to manage the computational complexity by reducing redundant data and leveraging parallel processing frameworks.

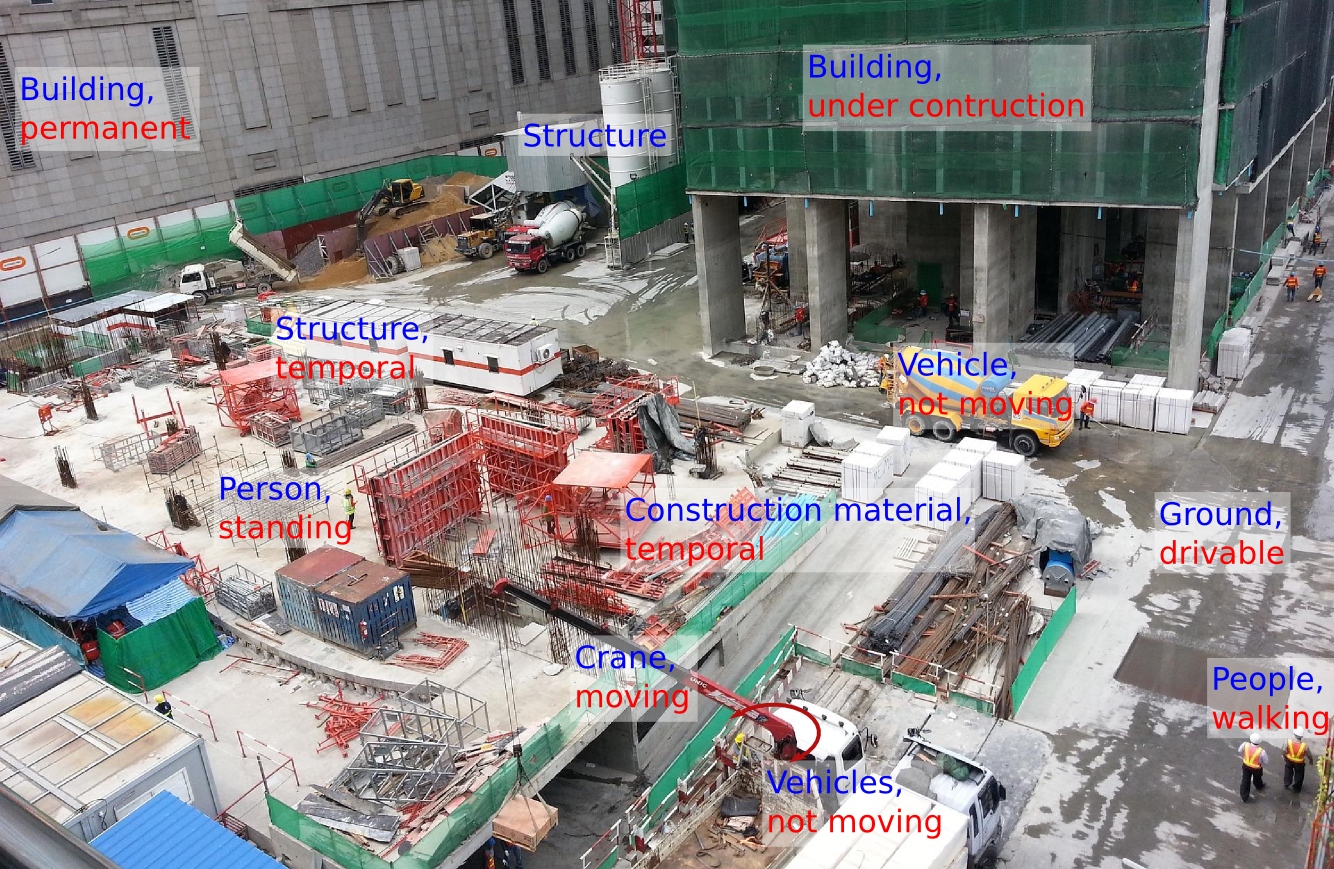

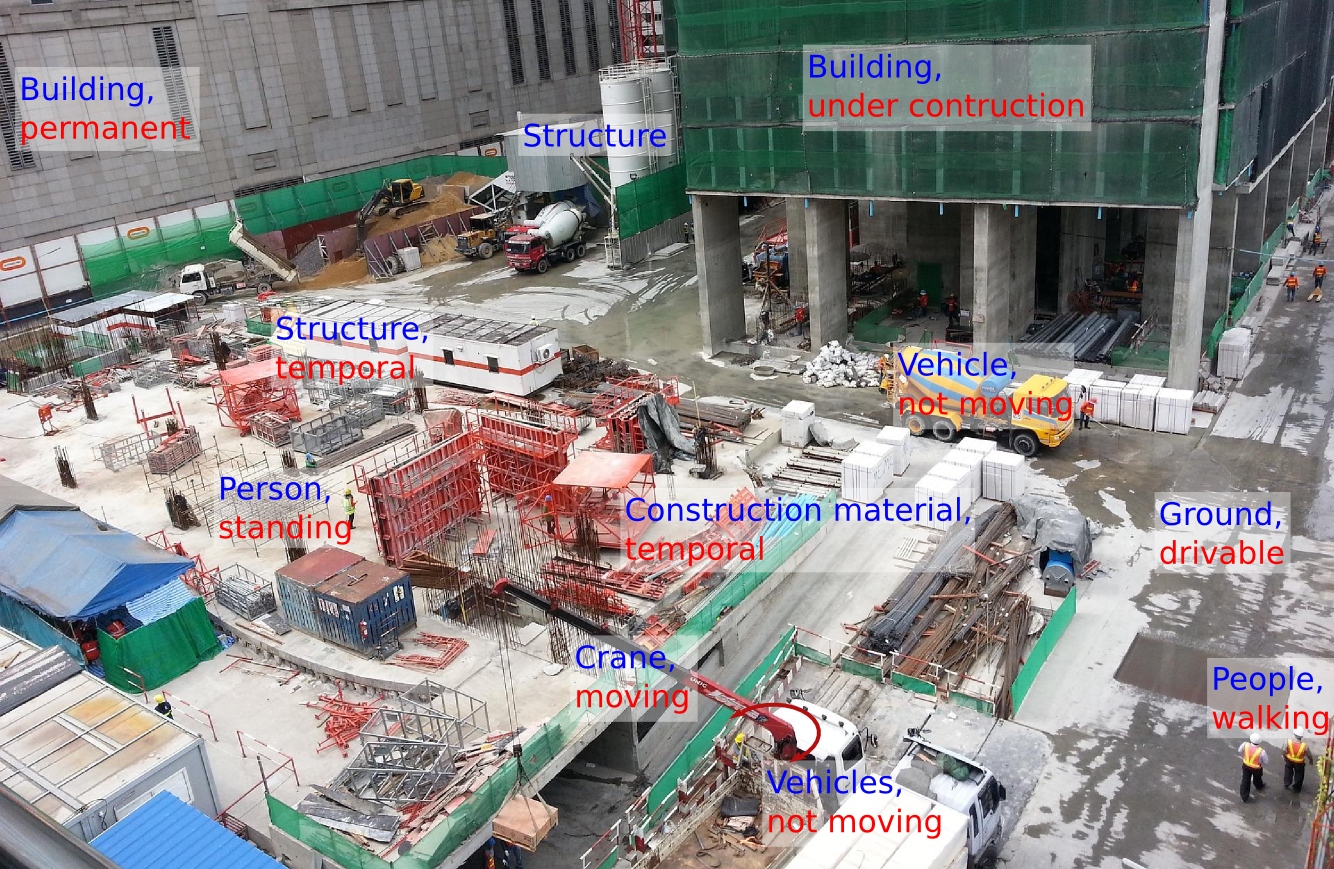

The representation of metric information has evolved from sparse feature-based maps to dense direct methods that utilize all available pixel information, enhancing robustness in texture-poor environments. The paper argues for the integration of semantic information into SLAM to provide higher-level environmental understanding, which remains an open research problem.

Figure 2: Feature-based map of a room produced by ORB-SLAM.

Theoretical Advances and New Frontiers

The theoretical analysis of SLAM includes stability, convergence, and the impact of noise, with emerging focus on global optimization methods like convex relaxation. The potential of these methods to offer guarantees against spurious measurements is promising for creating fail-safe systems.

The paper also explores new sensing technologies and computational tools poised to revolutionize SLAM. Novel sensors such as event-based cameras and light-field cameras hold potential for better performance under extreme conditions. Deep learning emerges as a tool to enhance semantic understanding and perceptual tasks within SLAM, though its integration poses challenges in real-time deployment and online learning.

Figure 3: Example of semantic reasoning challenges in dynamic environments.

Conclusion

The trajectory of SLAM research points to the robust-perception age, characterized by systems that can robustly navigate dynamic and unstructured environments through advanced understanding and resource awareness. The development of intelligent and adaptable SLAM systems is crucial for future autonomous robotics applications. The paper's insights into open problems and emerging technologies pave the way for continued innovation in this rapidly advancing field.