An Analysis of Human Attention in Visual Question Answering: Alignment with Deep Learning Models

The paper "Human Attention in Visual Question Answering: Do Humans and Deep Networks Look at the Same Regions?" presents a thorough investigation of how human attention compares to the attention mechanisms employed by deep neural networks in the context of Visual Question Answering (VQA). The authors introduce the VQA-HAT dataset, a collection of human attention maps that indicate regions within an image that humans focus on to answer specific questions. This research emphasizes understanding the correlation between human and model-generated attention, thereby evaluating the efficacy of current VQA models.

Methodology and Dataset

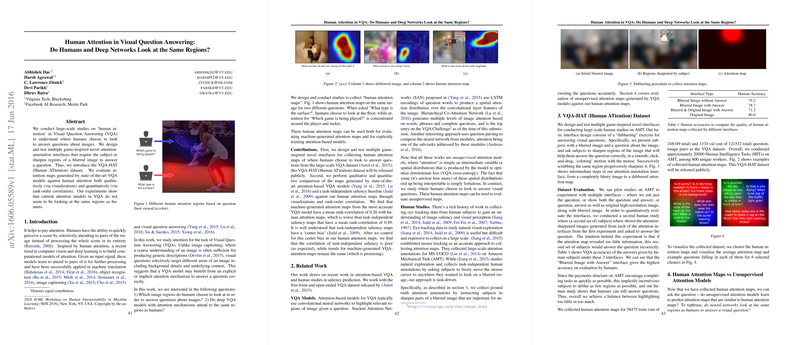

The researchers developed innovative interfaces for gathering attention data, utilizing a deblurring approach that requires users to selectively sharpen blurred parts of an image to answer questions. This unique method allows for the collection of "human attention maps" across a large number of image-question pairs. The paper resulted in the creation of the VQA-HAT dataset, which quantifies human attention across 58,475 training and 1,374 validation pairs drawn from the larger VQA dataset.

Results and Observations

The evaluation of existing attention-based models, such as the Stacked Attention Network (SAN) and the Hierarchical Co-Attention Network (HieCoAtt), demonstrates that these models do not fully align with human attention patterns. The rank-order correlation analyses show that the correlation between machine-generated and human attention maps is relatively low compared to task-independent saliency maps as produced by human eyes in natural exploration scenarios. Notably, while the mean rank-correlation of machine-generated maps was around 0.26, task-independent saliency maps achieved 0.49.

Perhaps more significantly, the analysis revealed that even the most accurate VQA models do not seem to mimic the human process of focusing on image regions that contribute directly to answering visual questions. Furthermore, the paper found that as VQA models improve in accuracy, they show a mild increase in their alignment with human attention, as evidenced by a slight uptick in mean rank-correlation values from 0.249 to 0.264.

Practical and Theoretical Implications

From a practical standpoint, the VQA-HAT dataset provides a valuable benchmark for evaluating and training future VQA models to improve their alignment with human attention processes. By incorporating human-like attention mechanisms, these models could potentially achieve higher accuracy and interpretability. Theoretically, the research raises questions concerning the nature of attention maps—specifically, the difference between necessary and sufficient visual information for effective VQA. The authors propose investigating semantic spaces where these concepts can be meaningfully explored.

Future Developments

Looking ahead, further research could focus on refining attention mechanisms within neural networks to mirror human behavior more accurately, potentially leading to marked improvements in model performance. Additionally, exploration into identifying minimal semantic units of attention and their relevance to answering visual questions could prove crucial. The VQA-HAT dataset opens new avenues for supervised attention training, possibly enhancing the capacity of deep learning models to emulate robust cognitive processes seen in human perception.

In conclusion, although current state-of-the-art VQA models, as assessed in this paper, are partially successful in mimicking human focus, the disparity in attention alignment offers fertile ground for advancements in model design and application. This work provides a foundational step towards achieving deeper, more human-centric understanding models in AI research.