Learning Deep Structure-Preserving Image-Text Embeddings

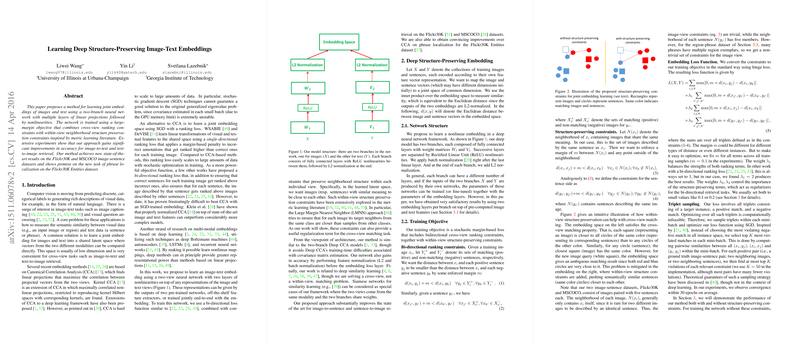

The paper "Learning Deep Structure-Preserving Image-Text Embeddings" explores the development of a novel method for learning joint embeddings of images and text. This task is framed within the emerging field of multimodal learning, where the objective is to map data from different domains (e.g., images and text) into a common latent space where semantic similarity can be directly measured. The authors introduce a two-branch neural network architecture and propose an innovative training objective combining bi-directional ranking constraints with within-view structure preservation constraints derived from the metric learning literature.

Network Structure and Training Objective

The two-branch neural network described in the paper includes multiple layers with linear projections followed by nonlinearities (ReLU) and ends with L2 normalization. Each branch of the network processes one modality, either images or text, mapping them into a shared latent space. The representations can either be pre-computed by off-the-shelf feature extractors or learned jointly end-to-end with the embeddings.

The training objective employs:

- Bi-directional ranking constraints: These ensure that correct image-text pairs are ranked higher than incorrect ones for both directions (image-to-text and text-to-image).

- Within-view structure-preserving constraints: Inspired by methods such as Large Margin Nearest Neighbor (LMNN), these constraints aim to preserve the neighborhood structure within each modality. The central idea is to ensure that similar instances (e.g., images visually close to each other or text sentences with similar semantics) remain close in the latent space.

Experimental Results

The proposed method demonstrates strong performance in multiple experiments:

- Image-to-Sentence and Sentence-to-Image Retrieval: The method was evaluated on the Flickr30K and MSCOCO datasets, where it achieved state-of-the-art results. Notably, the embedding learned by the proposed method outperformed previous approaches, including deep models and traditional methods based on Canonical Correlation Analysis (CCA).

- Phrase Localization: The paper also tackles the task of phrase localization on the Flickr30K Entities dataset. When coupled with region proposals generated by EdgeBox, the method showed significant improvements, particularly when negative mining was used to refine the embeddings.

Insights and Future Directions

The extensive experiments provided strong numerical evidence supporting the proposed method.

- Bi-directional constraints consistently improved retrieval results, especially in sentence-to-image tasks.

- Nonlinear mappings showed clear advantages over linear ones, suggesting that complex relationships between modalities are better captured by deeper architectures.

- The incorporation of within-view structure preservation further boosted performance, underlining the importance of maintaining the intrinsic structure of each modality during the embedding process.

The practical implications of this research are substantial, given the increasing need for effective multimodal representations in various applications like image captioning, visual question answering, and beyond. The theoretical grounding of the structure-preserving constraints also provides compelling directions for future work, suggesting potential benefits from deeper integration with other metric learning techniques.

Conclusion

This paper demonstrates a robust approach to learning joint image-text embeddings by leveraging a combination of bidirectional ranking loss and structure-preserving constraints. The proposed method's superior performance across multiple tasks underscores its value for both practical applications and further theoretical exploration in multimodal learning. Future research could investigate extending these ideas to other forms of data and learning paradigms, potentially uncovering even more powerful ways to unify disparate data modalities into coherent shared representations.