Overview of "Reading Scene Text in Deep Convolutional Sequences"

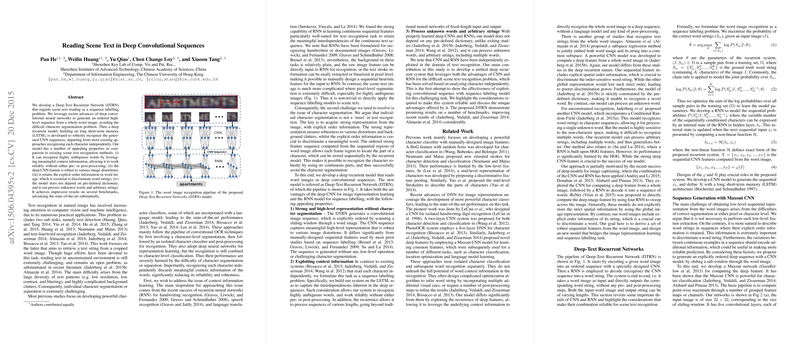

The paper "Reading Scene Text in Deep Convolutional Sequences" presents an innovative framework called Deep-Text Recurrent Network (DTRN), which addresses the task of scene text recognition as a sequence labelling problem. This approach leverages both convolutional neural networks (CNNs) and recurrent neural networks (RNNs) to jointly learn and recognize text, avoiding traditional character segmentation challenges.

The contribution of this work lies in its departure from existing methods which often handle text recognition as an isolated character classification process. Instead, DTRN employs a deep CNN to generate high-level ordered sequences from entire word images before processing these sequences with an RNN to effectively recognize characters based on context and sequential dependencies. By structurally incorporating Long Short-Term Memory (LSTM) units, DTRN maintains the continuity and order essential for understanding word strings in complex scenarios.

Key Features and Findings

- Avoidance of Character Segmentation: By recognizing text in ordered sequences rather than isolated characters, DTRN bypasses the intricate character segmentation step. This innovation allows the model to be robust against distortions and background noise frequently found in natural scenes.

- Contextual Recognition: Unlike character-independent recognition systems, the DTRN captures context information through sequence labelling, significantly enhancing its ability to resolve ambiguities inherent in text images.

- Flexibility for Unknown Words: The system does not rely on a predefined dictionary, providing the flexibility to recognize unknown words and arbitrary strings, which is crucial for handling real-world scenes with novel and varied text.

- Benchmark Performance: The DTRN model shows substantial improvements over existing methodologies and sets new performance benchmarks across datasets such as SVT, ICDAR 2003, and IIIT 5K-word. These improvements are attributed to the effective integration of CNN and RNN in handling sequences naturally.

Implications

The development of DTRN has significant implications for both practical applications and theoretical research. Practically, the model offers a robust solution for diverse and challenging text situations encountered in applications such as augmented reality, autonomous driving, and mobile document scanning. In the academic sphere, this work pushes the boundaries of how sequence labelling can be combined with deep learning frameworks to tackle complex recognition tasks, potentially inspiring future research into multi-modal sequence learning.

Future Directions

The research opens several avenues for future exploration:

- Enhanced Sequential Architectures: Further exploration into the depths and configurations of sequential architectures could yield improved performance, particularly when handling multi-scale or multi-lingual text.

- Integration with Other Modalities: Future work could also integrate text sequence models with additional modalities such as speech or scene context information to create more comprehensive and versatile recognition systems.

- Scalability to Larger Datasets: While this paper achieves substantial results with relatively limited data, scaling the model to incorporate much larger datasets, potentially involving even broader real-world applications, remains an area for future development.

In conclusion, the DTRN framework presents a significant step forward in the field of scene text recognition, leveraging deep learning's strengths to overcome traditional challenges and set a foundation for continued advancements in this domain.