Overview of Visual Question Answering (VQA) Research

The paper "VQA: Visual Question Answering," introduces and details a comprehensive paper on the task of Visual Question Answering (VQA). This research, authored by Aishwarya Agrawal, Jiasen Lu, Stanislaw Antol, Margaret Mitchell, C. Lawrence Zitnick, Dhruv Batra, and Devi Parikh, aims to bridge the gap between Computer Vision (CV) and NLP by exploring a challenging yet quantifiable AI problem. The task involves providing accurate natural language answers to open-ended questions about given images, pushing the boundaries of multi-modal AI systems.

Dataset Composition and Collection

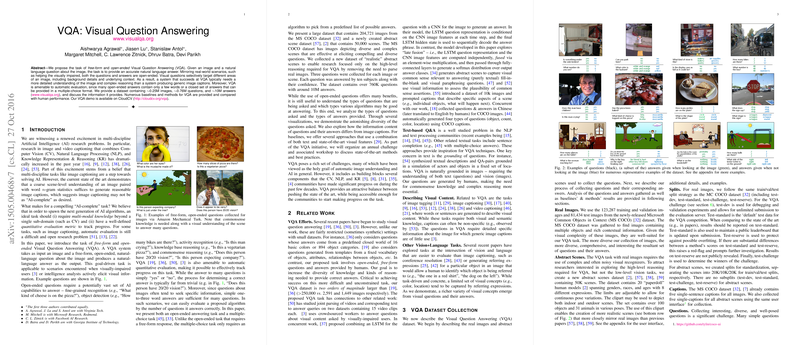

The researchers constructed a robust dataset to support the VQA task, including approximately 0.25 million images, 0.76 million questions, and 10 million answers. The images are sourced from the MS COCO dataset and a specially created abstract scene dataset. The MS COCO dataset offers real-world complexity with multiple objects and rich context, while the abstract scenes allow for high-level reasoning devoid of noisy visual data.

The dataset is meticulously curated to ensure a wide variety of questions that require diverse reasoning capabilities, such as object detection, fine-grained recognition, and commonsense knowledge. Each question is answered by ten unique workers, enhancing the validity of the dataset and providing a solid basis for evaluating VQA models.

Analysis and Baselines

The authors present insightful analysis of the dataset, breaking down the types of questions and the distribution of answers. They highlight the need for images to answer these questions accurately by comparing results from cases where subjects used only commonsense knowledge without viewing the images.

Various baselines are established, including a random guess baseline, a prior-based baseline, and a nearest-neighbor approach. These baselines serve as starting points to measure the performance of more complex VQA models.

Deep Learning Approaches

The paper details several VQA modeling techniques, across different configurations of combining image and question embeddings. Key approaches include:

- Bag-of-Words Question (BoW Q) + Image (I): Combining a simple bag-of-words representation of questions with image features.

- LSTM for Questions (LSTM Q) + Image (I): Using a single-layer Long Short-Term Memory (LSTM) network to obtain question embeddings, fused with image features.

- Deeper LSTM Q + Normalized Image Features (norm I): Employing a two-layer LSTM for questions with normalized image features from VGGNet. This configuration showed superior performance among the tested models.

Numerical Results and Comparative Analysis

The strongest model, "Deeper LSTM Q + norm I," yielded significant improvements, achieving 57.75% accuracy on the open-ended task. This is still notably behind human performance, accentuating the complexity of VQA. The detailed analysis within the paper indicates particular strengths and weaknesses of the model, such as better performance on visually straightforward questions and struggles with more nuanced queries involving detailed reasoning or higher count numbers.

Implications and Future Directions

This research contributes substantially to the AI community, particularly in how multi-modal tasks can encapsulate complex AI challenges. The meticulous dataset and the solid baselines set a high standard for future VQA research. The findings encourage further exploration into improving model architectures, exploring stronger image features, and enhancing the understanding of language-vision interactions.

With an annual challenge and workshop set to promote advancements, future research could potentially explore tighter integrations of CV and NLP techniques, improved reasoning capabilities, and more sophisticated models that better emulate human visual understanding and question answering.

The implications of VQA extend to practical applications such as aiding the visually impaired, advanced human-computer interaction systems, and automated visual content analysis. As AI systems continue to evolve, the robustness and thoroughness of research like this will be pivotal in driving forward the state-of-the-art and achieving practical, deployable solutions.

Conclusion

The "Visual Question Answering (VQA)" paper represents a significant milestone in AI research, laying down the foundation for a challenging yet evaluable task that combines vision and natural language understanding. Through extensive dataset creation and thorough analysis, it provides the necessary tools and benchmarks for further progress in the field of multi-modal AI. The insights drawn from this paper not only highlight current capabilities but also chart a clear path for future exploration and innovation.