- The paper introduces GSFix3D, a novel approach that integrates diffusion-based repair with dual-conditioning from mesh and 3DGS renderings to remove artifacts and inpaint missing regions.

- It employs a fine-tuned latent diffusion model with customized dual-input conditioning and random mask augmentation, achieving up to 5 dB PSNR improvement in challenging scenarios.

- The approach is efficient and robust, requiring minimal scene-specific fine-tuning while effectively handling real-world challenges such as pose errors and sensor noise.

Diffusion-Guided Repair of Novel Views in Gaussian Splatting: GSFix3D

Introduction and Motivation

GSFix3D addresses a persistent challenge in 3D Gaussian Splatting (3DGS): the generation of artifact-free, photorealistic renderings from novel viewpoints, especially in regions with sparse observations or incomplete geometry. While 3DGS offers explicit, differentiable scene representations and fast rendering, its reliance on dense input views leads to visible artifacts—holes, floaters, and unnatural surfaces—when extrapolating to under-constrained regions. Diffusion models, notably latent-space denoising frameworks such as Stable Diffusion, have demonstrated strong generative capabilities in 2D image synthesis but lack spatial consistency and scene awareness required for 3D reconstruction tasks.

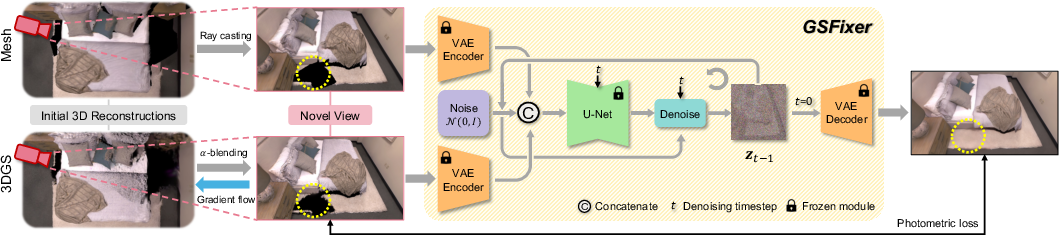

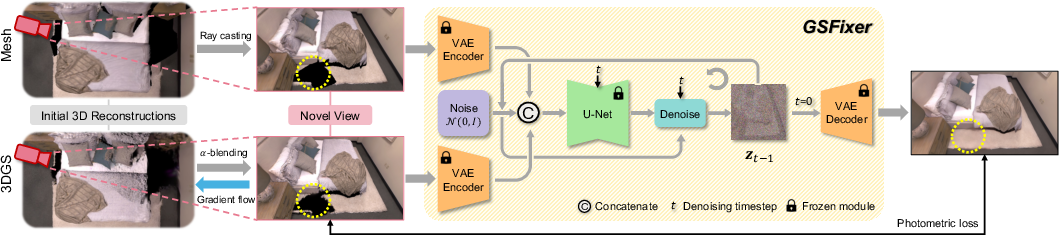

GSFix3D proposes a hybrid pipeline that leverages the generative priors of diffusion models to repair and inpaint novel views rendered from 3DGS and mesh reconstructions. The core innovation is the GSFixer module—a fine-tuned latent diffusion model conditioned on both mesh and 3DGS renderings—enabling robust removal of artifacts and plausible completion of missing regions. The repaired images are then distilled back into the 3DGS representation via photometric optimization, improving the underlying 3D scene fidelity.

Figure 1: System overview of the proposed GSFix3D framework for novel view repair. Given initial 3D reconstructions in the form of mesh and 3DGS, we render novel views and use them as conditional inputs to GSFixer. Through a reverse diffusion process, GSFixer generates repaired images with artifacts removed and missing regions inpainted. These outputs are then distilled back into 3D by optimizing the 3DGS representation using photometric loss.

Methodology

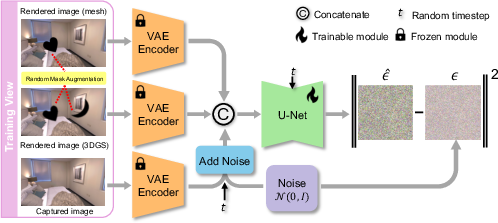

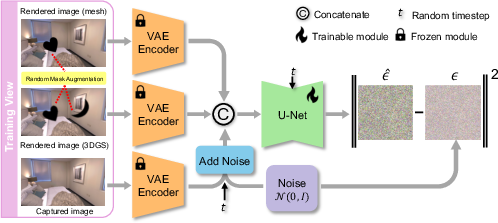

Customized Fine-Tuning Protocol

GSFixer is obtained by fine-tuning a pretrained latent diffusion model (Stable Diffusion v2) on scene-specific data. The conditional generation task is formulated as learning p(Igt∣Imesh,Igs), where Igt is the ground truth RGB image, Imesh is the mesh-rendered image, and Igs is the 3DGS-rendered image. The dual-conditioning strategy exploits the complementary strengths of mesh (coherent geometry) and 3DGS (photorealistic appearance), providing richer cues for artifact removal and inpainting.

The network architecture repurposes the U-Net backbone of the diffusion model, expanding the input layer to accommodate concatenated latent codes from both mesh and 3DGS renderings. Training follows the DDPM objective, minimizing the L2 loss between predicted and true noise in the latent space.

Figure 2: Illustration of the customized fine-tuning protocol for adapting a pretrained diffusion model into GSFixer, enabling it to handle diverse artifact types and missing regions.

Data Augmentation for Inpainting

To address the lack of missing regions in training data, a random mask augmentation strategy is introduced. Semantic masks derived from real-image annotations are randomly applied to mesh and 3DGS renderings, simulating occlusions and under-constrained regions. Gaussian blur is used to approximate soft boundaries typical in 3DGS artifacts. This augmentation is critical for enabling GSFixer to generalize to large holes and occlusions in novel views.

Inference and 3D Distillation

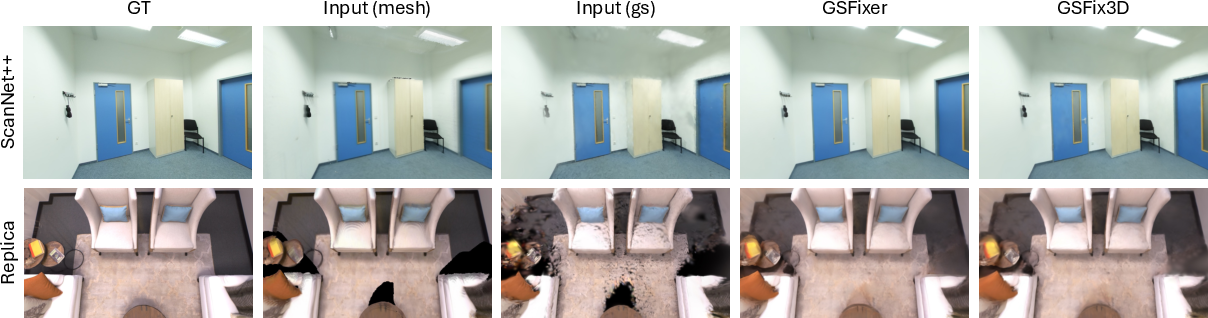

At inference, GSFixer receives mesh and 3DGS renderings from novel viewpoints, encodes them into the latent space, and iteratively denoises the target latent using a DDIM schedule. The output is a repaired image with artifacts removed and missing regions inpainted. These images are then used to optimize the 3DGS parameters via a photometric loss combining L1 and SSIM terms, with adaptive density control to fill previously empty regions. Multi-view constraints are enforced by augmenting the training set with repaired views and poses, further improving global coherence.

Experimental Results

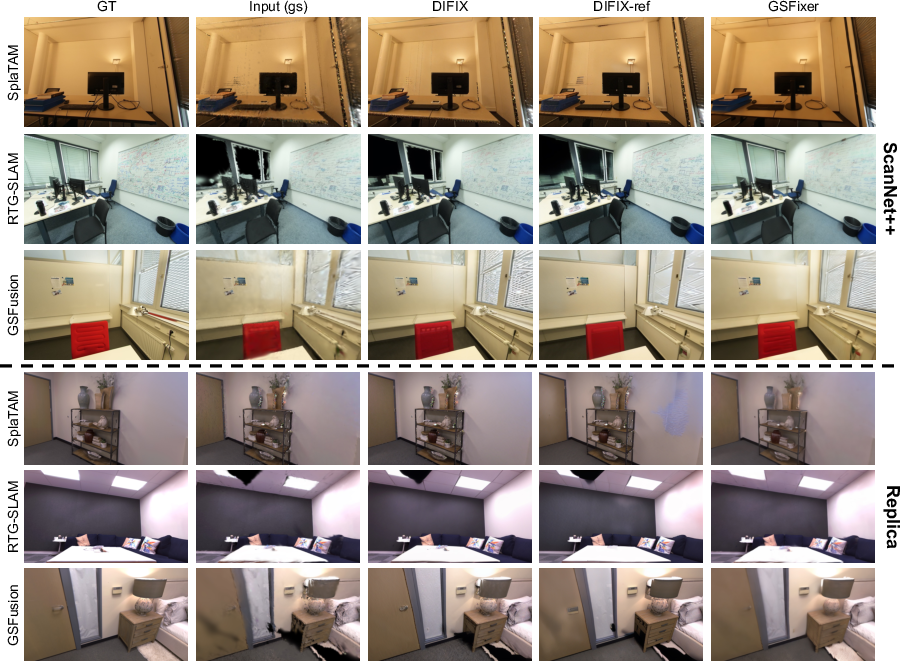

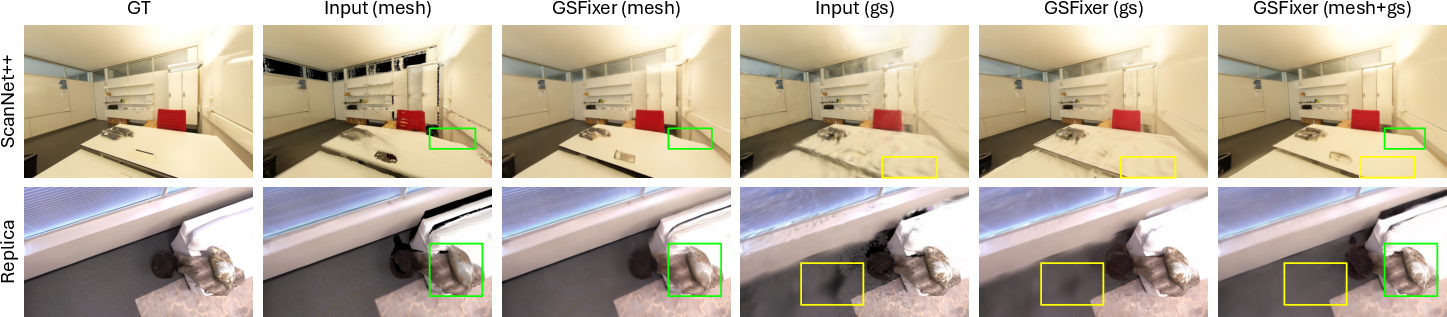

GSFix3D and GSFixer are evaluated on ScanNet++ (real-world indoor) and Replica (synthetic indoor) datasets, using PSNR, SSIM, and LPIPS metrics. Across all baselines—including DIFIX, DIFIX-ref, SplaTAM, RTG-SLAM, and GSFusion—GSFixer consistently achieves superior artifact removal and inpainting, with up to 5 dB PSNR gain over DIFIX variants in challenging scenarios. The dual-input configuration (mesh+3DGS) further boosts performance, especially in under-constrained regions.

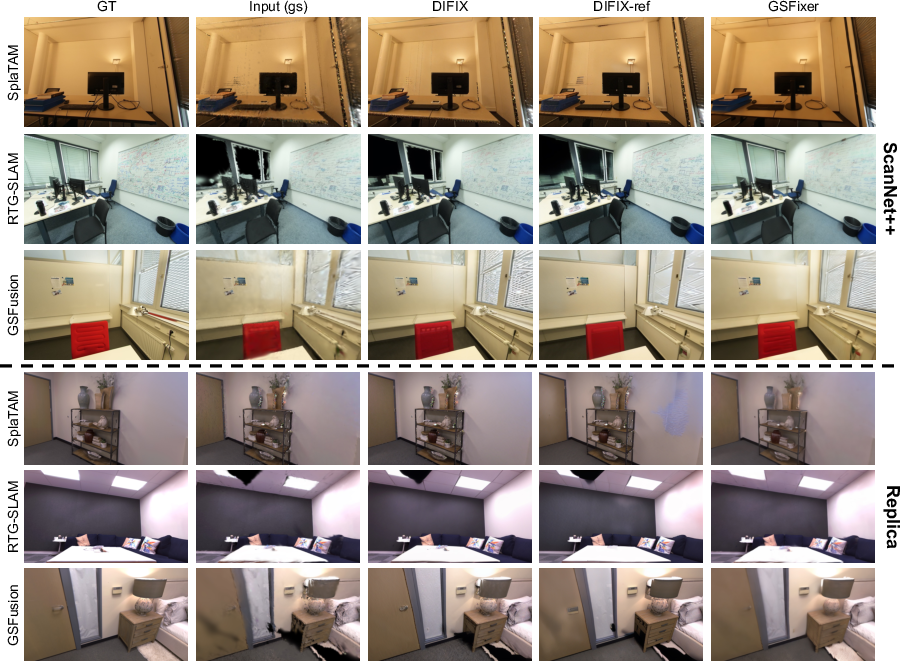

Figure 3: Qualitative comparisons of diffusion-based repair methods on the ScanNet++ and Replica datasets. All examples use only 3DGS reconstructions as the input source. Our GSFixer effectively removes artifacts and fills in large holes, where both DIFIX and DIFIX-ref fail to produce satisfactory results.

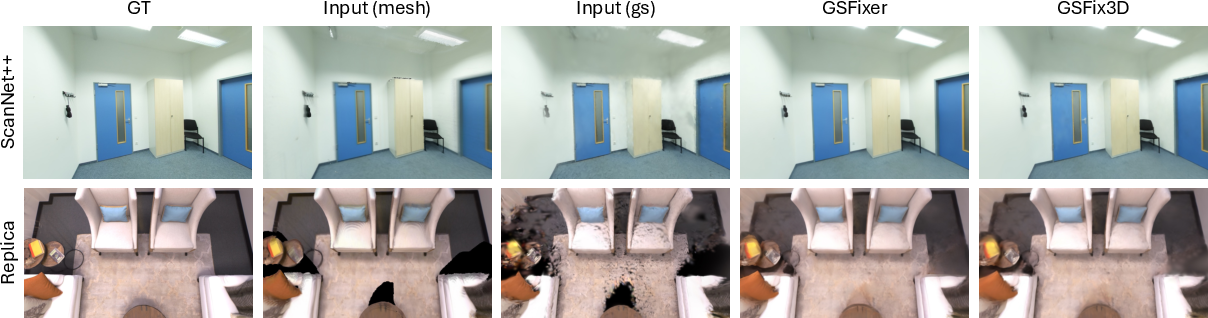

Figure 4: Qualitative comparison between GSFixer and GSFix3D on the ScanNet++ and Replica datasets. Both mesh and 3DGS reconstructions from GSFusion are used as input sources. The 2D visual improvements from GSFixer are effectively distilled into the 3D space by GSFix3D.

Real-World Robustness

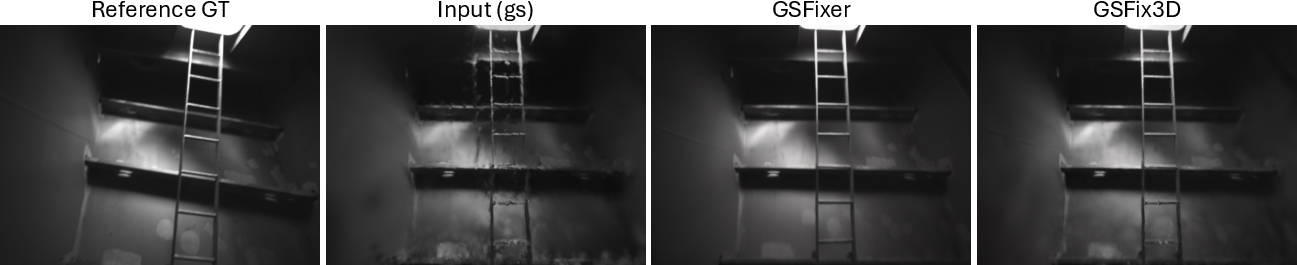

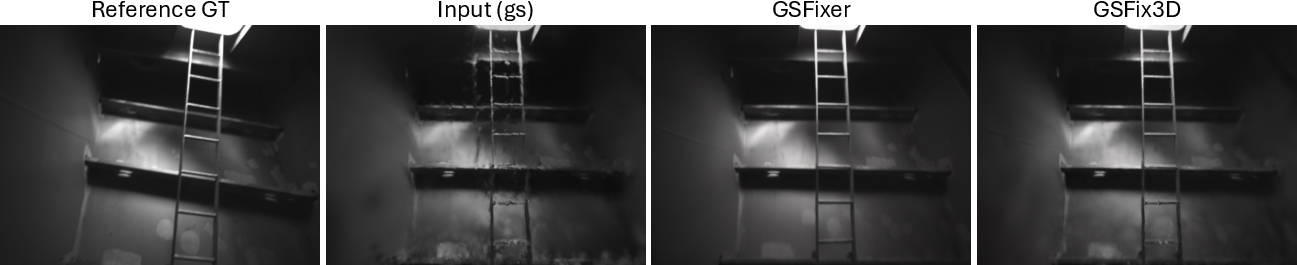

GSFix3D demonstrates resilience to pose errors and sensor noise in real-world data, as shown in ship and outdoor scenes. The method effectively removes shadow-like floaters and fills missing regions, even when initial reconstructions are degraded by inaccurate poses or incomplete measurements.

Figure 5: Novel view repair on self-collected ship data. Our method is robust to pose errors, effectively removing shadow-like floaters.

Ablation Studies

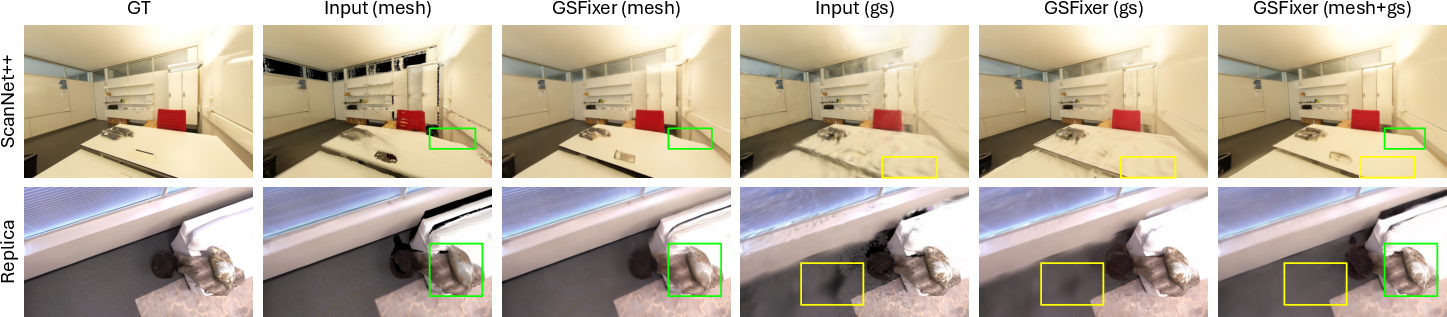

Ablations confirm the importance of dual-input conditioning and random mask augmentation. The dual-input setup consistently outperforms single-input variants, leveraging complementary information to resolve artifacts. Mask augmentation is essential for strong inpainting performance, enabling GSFixer to fill large missing regions with coherent textures.

Figure 6: Qualitative ablation of input image conditions on the ScanNet++ and Replica datasets. We compare GSFixer results using three types of inputs rendered from GSFusion: mesh-only, 3DGS-only, and dual-input. The artifacts (highlighted by green and yellow boxes) present in the single-input settings are effectively mitigated with the dual-input configuration.

Figure 7: Qualitative ablation of random mask augmentation on the Replica dataset. We compare GSFixer results fine-tuned with and without our proposed augmentation strategy. The differences in inpainting quality highlight the improved ability to fill large missing regions when augmentation is used.

Implementation Considerations

- Computational Requirements: GSFixer fine-tuning is efficient, requiring only a few hours on a single consumer GPU (RTX 4500 Ada, 24GB VRAM). DIFIX-finetune demands significantly more memory (A40, 48GB VRAM).

- Data Efficiency: Only minimal scene-specific fine-tuning is needed; no large-scale curated real image pairs are required.

- Scalability: The pipeline is compatible with various 3DGS-based SLAM systems and can be extended to other explicit representations.

- Deployment: The plug-and-play nature of GSFixer allows integration into existing 3D reconstruction workflows, with the repaired images directly improving downstream tasks such as AR/VR visualization and robotics navigation.

Implications and Future Directions

GSFix3D demonstrates that diffusion models, when properly fine-tuned and conditioned, can be effectively harnessed for spatially consistent repair and inpainting in 3D reconstruction pipelines. The dual-input design and mask augmentation are critical for generalization and robustness. The approach is practical for real-world deployment, requiring only modest computational resources and minimal data curation.

Future work may explore:

- Extending the framework to unbounded outdoor scenes and dynamic environments.

- Incorporating temporal consistency for video-based novel view repair.

- Adapting the pipeline for multi-modal sensor fusion (e.g., LiDAR, thermal).

- Investigating end-to-end differentiable training for joint 3DGS and diffusion model optimization.

Conclusion

GSFix3D establishes a robust, efficient pipeline for repairing novel views in 3DGS reconstructions, leveraging diffusion priors via a customized fine-tuning protocol and dual-input conditioning. The method achieves state-of-the-art artifact removal and inpainting, adapts to diverse scenes and reconstruction pipelines, and is resilient to real-world data imperfections. Its practical efficiency and adaptability make it a valuable contribution to the field of 3D scene reconstruction and novel view synthesis.